Question/Issue:

The compiler is complaining about missing initializer clauses in several places within the code related to convolutional layers.

Project ID:

NA

Context/Use case:

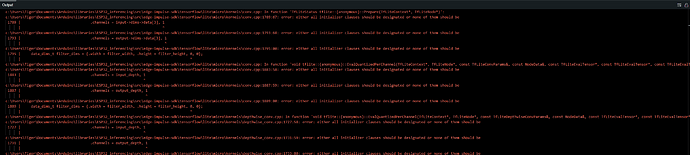

I used edge impulse to create a model for image classification for my esp32-s cam connected with mb board. My camera works perfectly, but as soon as I include the header file from edge impulse there’s this following error:

Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp: In function ‘TfLiteStatus tflite::{anonymous}::Prepare(TfLiteContext*, TfLiteNode*)’:

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1789:67: error: either all initializer clauses should be designated or none of them should be

1789 | .channels = input->dims->data[3], 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1793:68: error: either all initializer clauses should be designated or none of them should be

1793 | .channels = output->dims->data[3], 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1795:80: error: either all initializer clauses should be designated or none of them should be

1795 | data_dims_t filter_dims = {.width = filter_width, .height = filter_height, 0, 0};

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp: In function ‘void tflite::{anonymous}::EvalQuantizedPerChannel(TfLiteContext*, TfLiteNode*, const TfLiteConvParams&, const NodeData&, const TfLiteEvalTensor*, const TfLiteEvalTensor*, const TfLiteEvalTensor*, TfLiteEvalTensor*)’:

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1883:58: error: either all initializer clauses should be designated or none of them should be

1883 | .channels = input_depth, 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1887:59: error: either all initializer clauses should be designated or none of them should be

1887 | .channels = output_depth, 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/conv.cpp:1889:80: error: either all initializer clauses should be designated or none of them should be

1889 | data_dims_t filter_dims = {.width = filter_width, .height = filter_height, 0, 0};

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp: In function ‘void tflite::{anonymous}::EvalQuantizedPerChannel(TfLiteContext*, TfLiteNode*, const TfLiteDepthwiseConvParams&, const NodeData&, const TfLiteEvalTensor*, const TfLiteEvalTensor*, const TfLiteEvalTensor*, TfLiteEvalTensor*)’:

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1727:58: error: either all initializer clauses should be designated or none of them should be

1727 | .channels = input_depth, 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1731:59: error: either all initializer clauses should be designated or none of them should be

1731 | .channels = output_depth, 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1733:80: error: either all initializer clauses should be designated or none of them should be

1733 | data_dims_t filter_dims = {.width = filter_width, .height = filter_height, 0, 0};

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp: In function ‘TfLiteStatus tflite::{anonymous}::Prepare(TfLiteContext*, TfLiteNode*)’:

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1836:67: error: either all initializer clauses should be designated or none of them should be

1836 | .channels = input->dims->data[3], 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1840:68: error: either all initializer clauses should be designated or none of them should be

1840 | .channels = output->dims->data[3], 1

| ^

/Users/yam/Documents/Arduino/libraries/Smart-Waste-Segregation_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/depthwise_conv.cpp:1842:80: error: either all initializer clauses should be designated or none of them should be

1842 | data_dims_t filter_dims = {.width = filter_width, .height = filter_height, 0, 0};

| ^

exit status 1

Compilation error: exit status 1

Steps Taken:

- [Step 1]

- [Step 2]

- [Step 3]

Expected Outcome:

[Describe what you expected to happen]

Actual Outcome:

[Describe what actually happened]

Reproducibility:

- [ ] Always

- [ ] Sometimes

- [ ] Rarely

Environment:

- Platform: [e.g., Raspberry Pi, nRF9160 DK, etc.]

- Build Environment Details: [e.g., Arduino IDE 1.8.19 ESP32 Core for Arduino 2.0.4]

- OS Version: [e.g., Ubuntu 20.04, Windows 10]

- Edge Impulse Version (Firmware): [e.g., 1.2.3]

- To find out Edge Impulse Version:

- if you have pre-compiled firmware: run edge-impulse-run-impulse --raw and type AT+INFO. Look for Edge Impulse version in the output.

- if you have a library deployment: inside the unarchived deployment, open model-parameters/model_metadata.h and look for EI_STUDIO_VERSION_MAJOR, EI_STUDIO_VERSION_MINOR, EI_STUDIO_VERSION_PATCH

- Edge Impulse CLI Version: [e.g., 1.5.0]

- Project Version: [e.g., 1.0.0]

-

Custom Blocks / Impulse Configuration: [Describe custom blocks used or impulse configuration]

Logs/Attachments:

[Include any logs or screenshots that may help in diagnosing the issue]

Additional Information:

[Any other information that might be relevant]