Bringing ML to micro:bit is just awesome! Well done also for this fantastic UI.

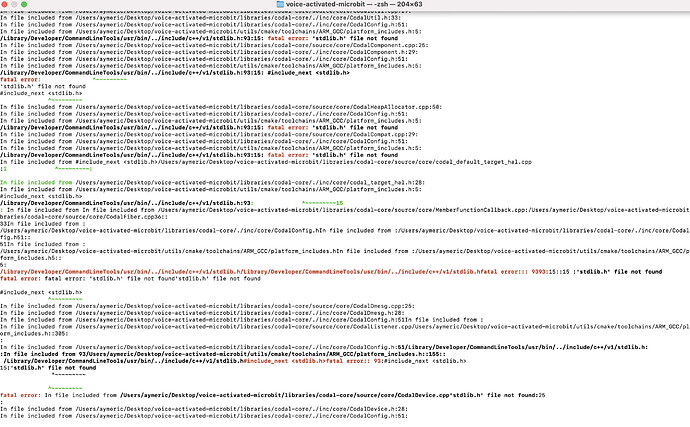

From Terminal (MacBook Pro M1): python build.py generated errors.

(I have loaded Cmake and gcc-arm-none-eabi-9-2020-q2-update and added them in the PATH)

See attached.

Your help would be very much appreciated, thank you!

Hello @AAAAA5,

I do not have an apple M1 to try the build.

As a workaround, could you try compiling with Docker see if it works please?

Regards,

Louis

@AAAAA5 Bit late (sorry!) but this does not look like it’s using the stdlib in ARM GCC. If you run arm-none-eabi-gcc does this work?

Hi,

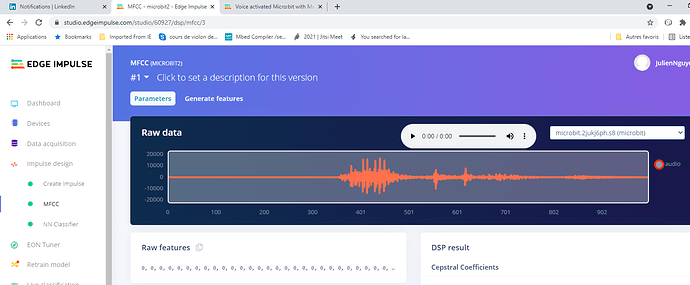

I works great with your example but when I want to use my model with another word “left” instead of “micro:bit” I got the build error below. Do you have any idea ? Thanks, Julien

C:/Users/juphu/voice-activated-microbit/source/edge-impulse-sdk/CMSIS/NN/Source/PoolingFunctions/arm_max_pool_s8_opt.c:156:22: warning: unused variable ‘in’ [-Wunused-variable]

156 | union arm_nnword in;

| ^~

[2/89] Building CXX object CMakeFiles/MICROBIT.dir/source/edge-impulse-sdk/tensorflow/lite/micro/kernels/cast.cc.obj

[3/89] Building CXX object CMakeFiles/MICROBIT.dir/source/edge-impulse-sdk/tensorflow/lite/micro/kernels/batch_to_space_nd.cc.obj

[4/89] Building CXX object CMakeFiles/MICROBIT.dir/source/edge-impulse-sdk/tensorflow/lite/core/api/op_resolver.cc.obj

[5/89] Building CXX object CMakeFiles/MICROBIT.dir/source/edge-impulse-sdk/tensorflow/lite/micro/kernels/add.cc.obj

[6/89] Building CXX object CMakeFiles/MICROBIT.dir/source/edge-impulse-sdk/tensorflow/lite/core/api/flatbuffer_conversions.cc.obj

ninja: build stopped: subcommand failed.

juphu@DESKTOP-S65DAO8 MINGW64 ~/voice-activated-microbit (master)

Hi @Juph, did you change to your keyword here? https://github.com/edgeimpulse/voice-activated-microbit/blob/master/source/MicrophoneInferenceTest.cpp#L32

And replace the edge-impulse-sdk, tflite-model, and model-parameters directories in source with your C++ library export as well?

hello! thanks for the awesome tutorial. Am wondering why the window size is 999ms instead of 1000ms? It was mentioned that it’s got something to do with the 11kHz frequency, but what exactly?

Hello @warp-speed

Indeed, this was not 100% clear to me either, I had to ask @janjongboom too

So basically when you sample at a 11kHz frequency, you have 11000 samples in one second (or 1000 ms).

So if you do 1000/11000 = 0.090909

But back in the time, we used some rounded values in the studio so 0.090909 * 11000 ~= 999.9…

However, now we use zero padding it does not matter anymore.

I hope this is clear.

Regards,

Louis

That’s clear, thanks a bunch louis and jan

Another thing is that you no longer need to downsample your audio files to 11000Hz yourself. You can now just upload any type of audio, and select 11000Hz in the Create impulse screen  Will put that in the repo.

Will put that in the repo.

Hi Jenny and All,

I try to build the original project download from Gitbub and facing to this error. Could someone hep me please ? THANKS

Utilisateur@PCMICRO-NVVPKDJ MINGW64 ~/voice-activated-microbit (master)

$ python build.py

codal-microbit-v2 is already installed

Set target: codal-microbit-v2

Using target.json (dev version)

Targeting codal-microbit-v2

CMake Error: CMake was unable to find a build program corresponding to “Ninja”. CMAKE_MAKE_PROGRAM is not set. You probably need to select a different build tool.

– Configuring incomplete, errors occurred!

See also “C:/Users/Utilisateur/voice-activated-microbit/build/CMakeFiles/CMakeOutput.log”.

I downloaded the missing ninja, the build works but fails a bit later in the process:

c:/progra~2/gnuarm~1/92020-~1/bin/…/lib/gcc/arm-none-eabi/9.3.1/…/…/…/…/arm-none-eabi/bin/ld.exe: cannot find -ladvapi32

collect2.exe: error: ld returned 1 exit status

ninja: build stopped: subcommand failed.

Hi Jenny and All,

I’m able to generate the hex file by keeping the same key word microbit for the exercice, in despite of the good accuracy 94% the voice recognition doesn’t work, micro:bit doesn’t recognize the word “microbit”! Any idea please ? Thanks

Have you cloned the project to your own account and added recordings of your own voice and retrained/deployed to your device? My voice is pretty high pitched compared to many of the recordings in that dataset, and thus doesn’t work for me either without further samples uploaded to the training/testing sets.

Are you able to see the microbit correctly classifying the background noise?

Have you cloned the project to your own account and added recordings of your own voice and retrained/deployed to your device? emphasized text

Yes I dit that. I had 1 minute of microbit , i didn’t work, now I have 5 min as suggested by Jan, but still doesn’t work.

My voice is pretty high pitched compared to many of the recordings in that dataset, and thus doesn’t work for me either without further samples uploaded to the training/testing sets.

Are you able to see the microbit correctly classifying the background noise?

I have not tested yet. How do you do ?

Hi @janjongboom, you did a great tutorial but no one has been able to make it work ! I could compile your code and had a working example, however if I replace your code my the one generated with Edge Impulse studio, it doesn’t recognize the keyword. 100% working with the classification test inside studio. Any idea @janjongboom? I have 1min14 seconds of microbit keyword audio.

Hi @Juph, Apologies for the delay, I am in the EU timezone.

You can view the serial output of the microbit example and see exactly what the model is classifying from the incoming audio data by opening up a serial terminal (like Putty) that your microbit is connected to on your computer to baudrate 115200

@janjongboom Also notes some model troubleshooting steps for improving model performance in the README of this repository, here: https://github.com/edgeimpulse/voice-activated-microbit#poor-performance-due-to-unbalanced-dataset

Hi @jenny,

Effectively, when I say microbit, it classes microbit with 49% of confidence. Where in the code that I can adjust acceptation level lower to see if it works before adding more keyword dataset ? Thanhs for yr support. My time zone is Paris.

Predictions (DSP: 107 ms., Classification: 4 ms.):

microbit: 0.49804

noise: 0.00781

unknown: 0.49414

Now i have 5 minutes of microbit dataset, but it still doesn’t work. I have a warning in the data acquisition section, see below. How to improve it ? Thanks, Julien

Dataset train / test split ratio

×

Training data is used to train your model, and testing data is used to test your model’s accuracy after training. We recommend an approximate 80/20 train/test split ratio for your data for every class (or label) in your dataset, although especially large datasets may require less testing data.

SUGGESTED TRAIN / TEST SPLIT

80% / 20%

Labels in your dataset

Some classes have a poor train/test split ratio: microbit, noise, unknown. To fix this, add or move samples to the training or testing data.

MICROBIT

97% / 3% (5m 4s / 10s)

NOISE

100% / 0% (15m 48s / 0s)

UNKNOWN

100% / 0% (15m 42s / 0s)

Perform train / test split

Use this option to rebalance your data, automatically splitting items between training and testing datasets. Warning: this action cannot be undone.

Perform train / test split

Dismiss