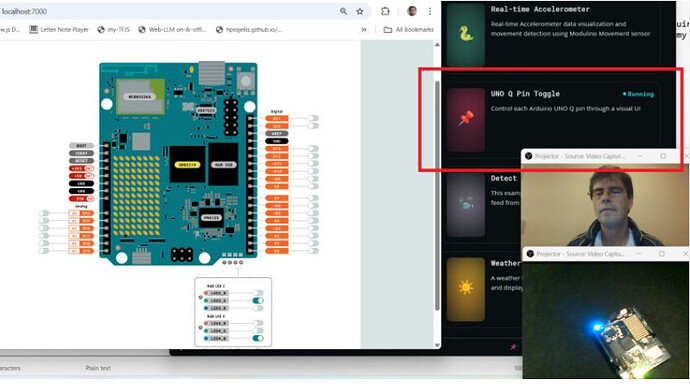

Starting with the UNOQ yesterday. I really like the UI pin toggle code that runs out of the box. I will try the machine learning models when I can figure out how to video with two cameras and run a model with the third, but for now, can anyone explain the serial monitor. Putty can’t see it and App-lab just ignores it. Do I really need to connect UART TX and RX pins like the Coral-Micro just to get the serial monitor working?

If it works for you can you show me your arduino sketch.

Also how do I load an Arduino Library. Not obvious in the App-Lab.

1 Like

Hello @Rocksetta glad to see you having multi cameras running properly!

Could you please be more specific on the error that you get and what code are you trying to run?

I was reading the Arduino docs https://docs.arduino.cc/tutorials/uno-q/user-manual/ (the UART parts) however i didn’t see anything specific.

Hi @marcpous My standard blink and serial print code for the PortentaH7 is below. The blink part works fine the serial print does not seem to work for me. Does it work for you?

I just noticed I had a fast 115200 baud rate, so I will try again.

#include <Arduino.h> // Only needed by https://platformio.org/

void setup() {

Serial.begin(9600);

pinMode(LED_BUILTIN, OUTPUT); // try on Portenta LEDB = blue, LEDG or LED_BUILTIN = green, LEDR = red

}

void loop() {

Serial.println("Serial print works on the Portenta M7 core only. Here is A0 reading: " + String(analogRead(A0)) );

digitalWrite(LED_BUILTIN, LOW); // internal LED LOW = on for onboard LED

delay(1000); // wait for a second

digitalWrite(LED_BUILTIN, HIGH);

delay(3000);

}

That is interesting. Let me test that.

A few minutes later …

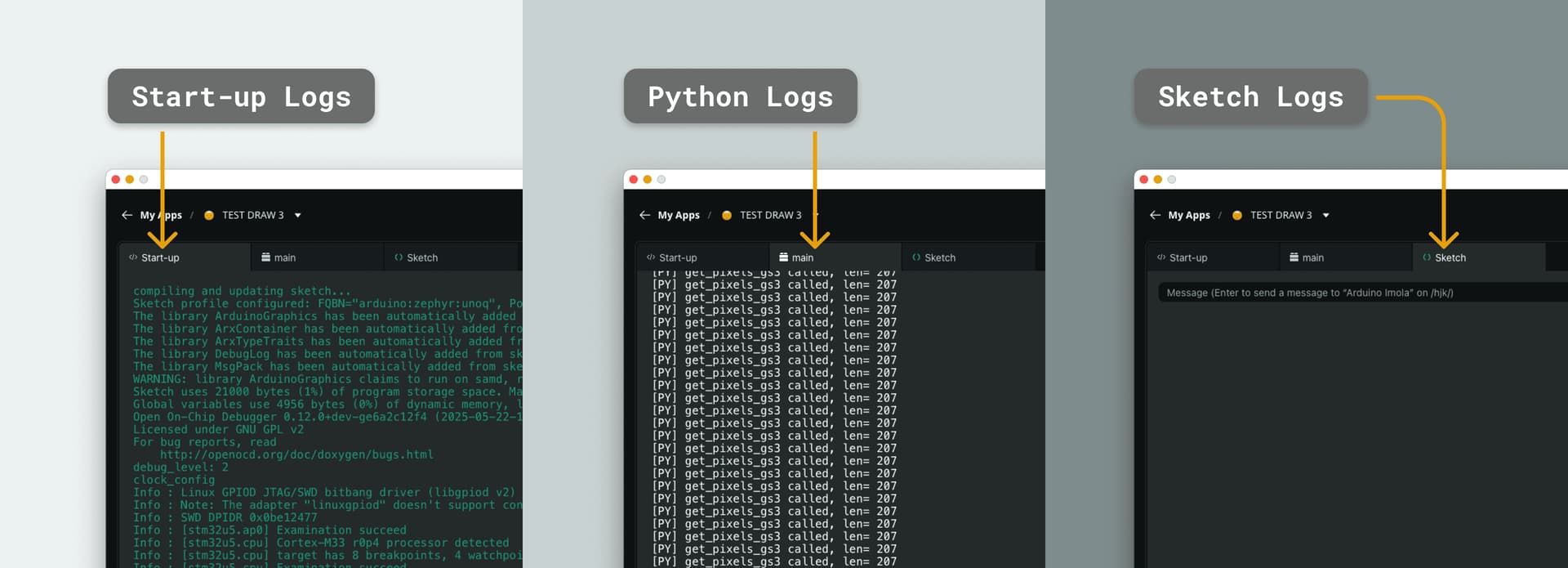

Python logs.

Using CPython 3.13.9 interpreter at: /usr/local/bin/python

Creating virtual environment at: .cache/.venv

Activating python virtual environment

Hello World!

Activating python virtual environment

Hello World!

Activating python virtual environment

Hello World!

Activating python virtual environment

Hello World!

Activating python virtual environment

Hello World!

Activating python virtual environment

Hello World!

exited with code 0

Running the code I posted. The serial monitor not showing anything. I don’t even know where it got the “hello World”

Hello @Rocksetta could you please test this?

check the exited containers with

docker ps -a

annotated theID and then check the logs with docker logs <ID>

To check the cameras, connect the Arduino UNO Q to your laptop directly and check adb shell and then v4l2-ctl --list-devices

Let’s see if this gives us more information!

Nothing really interesting with docker pa -a, the docker logs were just the python logs and cameras are running great. I made a video on the UNOQ using a downgraded version of OBS studio. Surprising how good it is as a debian machine. Bit of flicker but that is OK. On your end can you run a serial monitor arduino sketch?

1 Like

AFAIK @Rocksetta there are some bugs that prevent the Serial Monitor from the Arduino App Lab to showcase the logs. This might be fixed in the next versions!

Fingers crossed!

What other interesting things did you discover? Did you try to add your own custom model to the applications?

The edgeImpulse examples are amazing! I am still a bit confused how to edit them, the bricks, merging javascript, HTML, Python and C++, also code permanence seems to be random, sometimes I reboot the UNOQ and my arduino code is still running, other times it boots from scratch.

The capacitive touch sensitivity from the back of the UNOQ pins sent me down a rabbit hole of confusion. Also by the time I thought A4 and A5 were reversed I think arduino patched an update and I could not replicate what I had found.

I am making lots of really badly done videos at my playlist https://www.youtube.com/playlist?list=PL57Dnr1H_egsOqQUOiIBio2zXN2rE0QgJ

Not interested in the video quality or the writeup just trying to learn as fast as I can.

I am in touch with a few Arduino people who confirmed the Serial Monitor bug, also explained the WiFi connectivity as a chance for live updating as things are changing rapidly (super irritating. I have to connect to my phones hotspot just to load the appLab and then shut down the hot spot).

I am getting a bit of PTSD from my PortentaH7 experience ~5 years ago. @janjongboom and Daniel S, might be the only ones who remember that. Easily 1000’s of hours of frustration, but worth it as the course was amazing and easy for high school students (Not sure they realized how cutting edge it was) but I don’t even teach with it anymore.

, I found my 38 closed and 7 still open Arduino PortentaH7 Issues here!.

, I found my 38 closed and 7 still open Arduino PortentaH7 Issues here!.

Any issues you can solve tell me and I will probably make a video of it. Nice that the edgeimpulse part is running so well.

Did you try this @Rocksetta

Awesome. I will see what I can do.

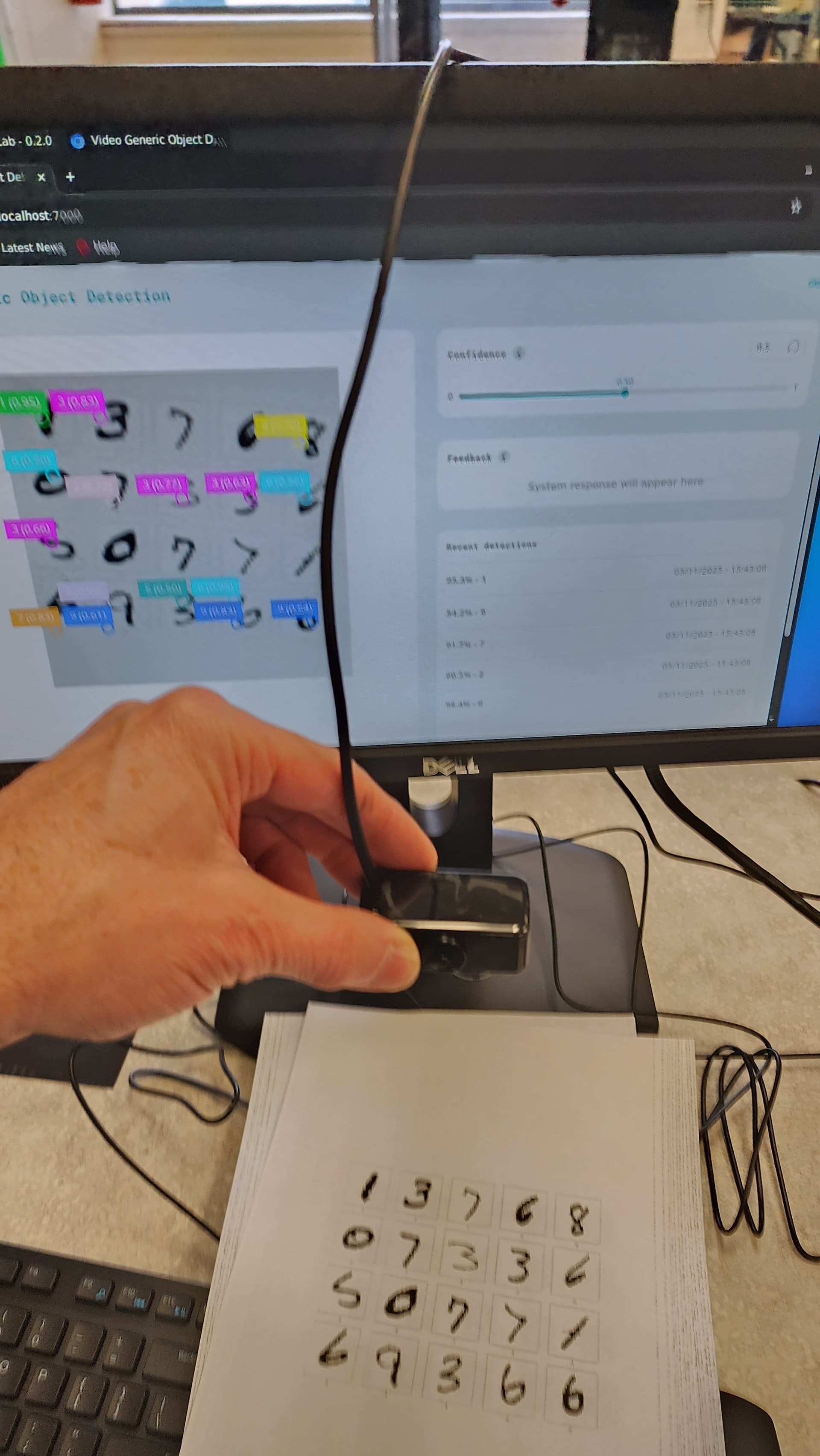

@marcpous Is that example fairly old, I guess not old just SSH and I am using the unoQ desktop? I do get the general concept. I am just using the UNOQ desktop so ssh is not an issue. My code seems to run, did not detect the camera until I did a reboot, now just no webpage showing up. Mine is a FOMO model, but I think all Object Detection should run.

Funny bug, my version of applab (I think 0.2.0 ??) can not edit the app.yaml. Shows it fine just you need to use an editor to edit it. That should be able to be changed soon.

@marcpous Can you somehow get your .eim file that you used in the tutorial available for me to try. Your instructions seem clear, everything compiles the code runs, just the website does not load. It probably is not my .eim fault (It is a very cool MNIST FOMO model) but I would like to check. Any other suggestions for why the web page is not being served. I tried both localhost:7000 and the full IP.

From the webpage all I get is: {"detail":"Not Found"}

Most likely something I messed up.

Run Arduino App Lab - Edge Impulse Documentation

@Rocksetta could you please more details of what you did?

I tested with varios object detection models built for Linux aarch64 (just for the CPU) and it worked well. What did you try?

Funny bug, my version of applab (I think 0.2.0 ??) can not edit the app.yaml. Shows it fine just you need to use an editor to edit it.

Indeed! At the moment it’s not possible to edit yet with AppLab!

Hi @marcpous I assume I am making a silly mistake, but I kind of understand all the steps and the darn thing compiles and looks like it is running. It is possible the webcam was not working this morning, as the regular person detection program did not run since it didn’t detect the camera, but it gave an error, this code gave no error.

Let me try again and I will try to give you better feedback.

Is there an easy way to share your .eim file? Is it on a github that I could download it from?

@marcpous All good, not sure why it wasn’t working, works fine now. I should make a video soon. Thanks so much for making the excellent tutorial. I will test a vison classification, and possibly some other models.

My UnoQ playlist is here, I will add the videos as soon as they are made.

Note: These results are raw, better results with post processing, the importance is how fast and small the FOMO vision model is.

1 Like

@marcpous if I wanted to extrapolate your example to the other models, would this app.yaml be useful or do you see any issues? Would most of the tutorial be similar other than loading different bricks or would there be any major changes? Here is my multi app.yaml

name: Multi-Model Detector

description: "Configuration file for various Edge Impulse model types (UNO Q)"

ports:

- arduino:web_ui: {}

icon: 🤖

bricks:

# =========================================================================

# === ACTIVE BRICK: OBJECT DETECTION (using EI_OBJ_DETECTION_MODEL) ===

# =========================================================================

- arduino:video_object_detection: {

variables: {

EI_OBJ_DETECTION_MODEL: /home/arduino/.arduino-bricks/ei-models/<your object detection model name>.eim

}

}

# =========================================================================

# === COMMENTED BRICKS (Uncomment one block to switch models) ===

# =========================================================================

# --- 1. Generic Classification (Image/Sensor) ---

# Requires: video_classification brick (often used for visual classification)

#- arduino:video_classification: {

# variables: {

# EI_CLASSIFICATION_MODEL: /home/arduino/.arduino-bricks/ei-models/<your classification model name>.eim

# }

# }

# --- 2. Keyword Spotting (Audio) ---

# Requires: keyword_spotting brick

#- arduino:keyword_spotting: {

# variables: {

# EI_KEYWORD_SPOTTING_MODEL: /home/arduino/.arduino-bricks/ei-models/<your keyword spotting model name>.eim

# }

# }

# --- 3. Audio Classification ---

# Requires: audio_classification brick

#- arduino:audio_classification: {

# variables: {

# EI_AUDIO_CLASSIFICATION_MODEL: /home/arduino/.arduino-bricks/ei-models/<your audio classification model name>.eim

# }

# }

# --- 4. Motion Detection ---

# Requires: motion_detection brick (for IMU/accelerometer models)

#- arduino:motion_detection: {

# variables: {

# EI_MOTION_DETECTION_MODEL: /home/arduino/.arduino-bricks/ei-models/<your motion detection model name>.eim

# }

# }

# --- 5. Video Anomaly Detection ---

# Requires: video_anomaly_detection brick

#- arduino:video_anomaly_detection: {

# variables: {

# EI_V_ANOMALY_DETECTION_MODEL: /home/arduino/.arduino-bricks/ei-models/<your video anomaly model name>.eim

# }

# }

1 Like

Also @marcpous the wake word example has an animation using the UNOQ LED matrix. That is awesome, but with the vision examples I feel that is also needed. I am having troubles finding out for the object detection what the Labels are and where is the code would I insert the LED matrix animations. I guess to day I will look at the wake word example and see if I can figure it out.

A few moments later…

I understand now. The wake word uses the rpc bridge to connect python with the arduino matrix but the vision models just stay with Python. I would suggest the vision models at least activate LED1_B or LED2_G when a face, person or object is detected. I think Python can do that. Having nothing showing up on the board is irritating. Also would it be useful to activate the rpc bridge so that Arduino developers have an idea how python and arduino communicate with edgeAI models.

1 Like

@marcpous this code works, flashes LED2_G when the object detection code sees a person.

import time

from datetime import datetime, timezone

from arduino.app_utils import App

from arduino.app_bricks.web_ui import WebUI

from arduino.app_bricks.video_objectdetection import VideoObjectDetection

# Update this path after checking /sys/class/leds on your board

myLedGreenPath = "/sys/class/leds/green:wlan/brightness"

def mySetLedBrightness(path, value):

try:

with open(path, "w") as f:

f.write(str(value))

except Exception as e:

print("Error writing LED brightness:", e)

# Blink in a separate thread or before the main App run

# (depending on how App.run() handles blocking)

import threading

def blink_loop():

mySetLedBrightness(myLedGreenPath, 255)

time.sleep(0.5)

mySetLedBrightness(myLedGreenPath, 0)

time.sleep(0.5)

threading.Thread(target=blink_loop, daemon=True).start()

# Setup UI & detection

ui = WebUI()

detection_stream = VideoObjectDetection(confidence=0.5, debounce_sec=0.0)

ui.on_message("override_th", lambda sid, threshold: detection_stream.override_threshold(threshold))

def send_detections_to_ui(detections: dict):

for key, value in detections.items():

entry = {

"content": key,

"confidence": value.get("confidence"),

"timestamp": datetime.now(timezone.utc).isoformat()

}

ui.send_message("detection", message=entry)

# ✅ Fixed if statement

if key == "person":

blink_loop()

detection_stream.on_detect_all(send_detections_to_ui)

App.run()

1 Like

@Rocksetta i was travelling last week! It’s fantastic what you could do!

What are you testing now?