Question/Issue:

Hello, I am experiencing a significant discrepancy between the gyroscope data collected via Edge Impulse ingestion sketches and data collected through WebUSB on the Nicla Vision board. This discrepancy is causing the deployed model on Nicla Vision to perform poorly during inference, despite working correctly when using live classification through WebUSB.

Project ID:

536170

Context/Use case:

I am using Nicla Vision to recognize gestures based on IMU data. When I collect data via WebUSB and train a model on Edge Impulse, the live classification works as expected using WebUSB. However, after deploying the model as an Arduino library on Nicla Vision, the inference results are incorrect.

Upon investigating, I found that while the gyroscope data collected via WebUSB and the ingestion sketches on Arduino Nano 33 BLE are consistent, the gyroscope data from Nicla Vision shows about a 100x difference between the values collected through Edge Impulse ingestion sketches and WebUSB.

Even after considering the different IMU models and coordinate systems between BLE and Nicla Vision, this large discrepancy seems abnormal. I verified that the accelerometer data is correctly collected in g (which is converted to m/s²), and the gyroscope data is collected in degrees/second for both devices. The accelerometer data between BLE and Nicla Vision appears reasonable, but the gyroscope data on Nicla Vision shows an unexpectedly large difference.

Summary:

Gyroscope data collected via Edge Impulse ingestion sketches on Nicla Vision differs by a factor of ~100 compared to the data collected through WebUSB. This discrepancy leads to incorrect inference results after deploying the model to Nicla Vision, even though live classification via WebUSB works as expected.

Steps to Reproduce:

- Collect gyroscope data using Edge Impulse ingestion sketches on Nicla Vision.

- Collect gyroscope data using WebUSB on Nicla Vision.

- Train the model using WebUSB data and perform live classification (works as expected).

- Deploy the trained model as an Arduino library on Nicla Vision.

- Run inference on Nicla Vision (incorrect results).

Expected Results:

The gyroscope data collected via ingestion sketches and WebUSB should be consistent, or at least produce comparable results. Inference on Nicla Vision should work similarly to live classification via WebUSB.

Actual Results:

The gyroscope data from ingestion sketches shows a large (~100x) difference compared to data collected via WebUSB on Nicla Vision. This results in incorrect inference when the model is deployed to Nicla Vision.

Reproducibility:

- [ O] Always

- [ ] Sometimes

- [ ] Rarely

Environment:

Platform: Nicla Vision, Arduino Nano 33 BLE

Build Environment Details: Arduino IDE 2.3.3, Edge Impulse SDK

OS Version: Windows 10

Edge Impulse Version (Firmware): 1.0.4

Edge Impulse CLI Version: 1.5.0

Project Version: 1.0.5

Custom Blocks / Impulse Configuration: Standard IMU classification block with gyroscope data.

Logs/Attachments:

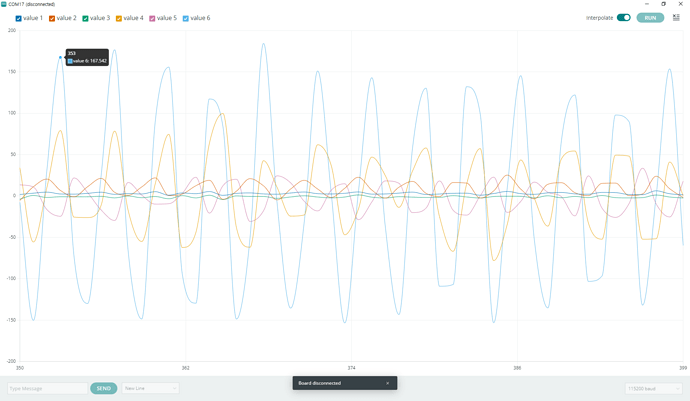

Attached are logs of gyroscope data collected from both ingestion sketches and WebUSB on Nicla Vision, as well as comparative data from the Arduino Nano 33 BLE.

Additional Information:

Despite adjusting for the differences between the IMU models and coordinate systems, the large discrepancy in gyroscope data between Edge Impulse ingestion sketches and WebUSB remains unclear. The accelerometer data is correctly converted and consistent, but the gyroscope data is significantly different. I would appreciate any guidance on why Nicla Vision’s ingestion sketch data and WebUSB data differ so drastically.

nicla-ingestion sketch