Dear Edge Impulse Experts,

I have problems, and it seems I have found a bug, with the Use Case when I try to detect the objects of the same shape but different color.

(If it is about grey-scale and objects with different shapes then it works.)

Use Case

- I teach the FOMO (Faster Objects, More Objects) MobileNetV2 0.1

- Color depth RGB

- Objects: same shape (e.g. simple cylinders) but in different colors

- In the Desktop, Launch in Browser in Edge Impulse (or with QR code) it works perfectly, the neural net can detect the same shape objects but in different colors.

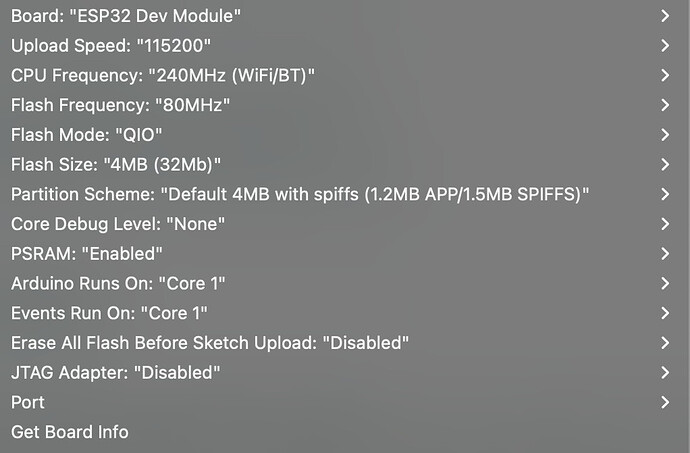

So the neural net works perfectly. - But the generated built SW by Edge Impulse does not work on ESP-EYE microcontroller in case of RGB setting. I tried different built settings and I have got different issues.

With different settings:

- In the Deployment section

-

Set/Unset Enabled EON Compiler, Quantized (int8), RGB →

it can be built and run on the ESP-EYE but the object detection does not work at all.

Always No object detected.

(I think something goes wrong during the quantization. E.g. float->int8 conversion ) -

Set/Unset Enabled EON Compiler, Unoptimized (float32), RGB →

Build error in the Edge Impulse generated code:

…\src\edge-impulse-sdk\tensorflow\lite\micro\micro_graph.cpp" -o "

…\edge-impulse-sdk\tensorflow\lite\micro\micro_graph.cpp.o"

…\src\edge-impulse-sdk\tensorflow\lite\micro\kernels*softmax.cpp*: In function ‘void tflite::{anonymous}::SoftmaxQuantized(TfLiteContext*, const TfLiteEvalTensor*, TfLiteEvalTensor*, const tflite::{anonymous}::NodeData*)’:

…\src\edge-impulse-sdk\tensorflow\lite\micro\kernels\softmax.cpp:301:14: error: return-statement with a value, in function returning ‘void’ [-fpermissive]

return kTfLiteError;

- I only used the generated codes (.zip library, and the template file in Arduino IDE) during this issues.

My questions are as follows:

- Is the RGB Tiny ML code build not supported for the ESP-EYE yet?

- Why do I get this code error in the generated code above? Maybe I forgot to set something.

Best regards,

Lehel