Hi Jim,

I will try with the following training set also:

- images created by the ESP32

- 320x240 pixels or lower resolution

- I upload this images into EI

Best regards,

Lehel

Hi Jim,

I will try with the following training set also:

Best regards,

Lehel

Hi Lehel,

Please check your forum inbox, I have send over a short recording of my setup showing reliable detection in RGB for the ObjDetnRedBlackCylinder1 project. I will clone the ObjDetnRedBlackCylinder project and train it with RGB to verify. I hope this is helpful to debug.

We do have YoloV5 available as a Community block under Object Detection, though you may struggle to fit this onto MCU hardware and it is not available in conjunction with FOMO.

Hi Jim,

Do you mean this screenshot? Or what does the forum inbox mean?

…

I will check, thank you.

Do you mean that it detects not only that there is an object but right according to the type also? For example red-black?

With grey-scaled training it detects the objects but the type, which depends only on the colors, are not correct in the most cases. This is understandable because in a grey-scaled image for example the red and the black are very similar especially if the lighting conditions are not already good.

I agree if the color does not matter and the objects can be distinguished by shape then it works because the grey-scaled training can be used.

I can see the problem only with the color case when I have to train in RGB mode instead of grey-scaled mode.

Best regards,

Lehel

Hi Lehel,

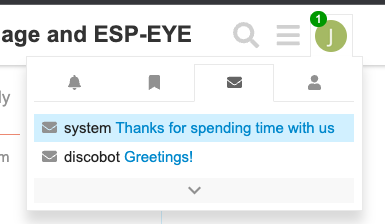

Your forum inbox can be found up in the top right here:

There should be a private message from me with a link to a screen recording- I didn’t want to share your project details publicly.

The deployment was detecting between red-red & red-black reliably yes, I have also tested your ObjDetnRedBlackCylinder model with the full dataset by retraining it as an RGB model and it too works as expected- detecting reliably between all the cylinder types on the ESP-EYE. I’ve sent screen recordings of both via the private message. I hope this is helpful!

Many thanks,

Jim

Hi Jim,

Thank you very much for your tests. I checked your videos.

Yes, everything works correctly if you use the Launch in Browser for testing. I know it.

But build the Arduino Library and put into the Arduino IDE, please start your framework program and check the Serial Port when you show the picture for ESP-EYE. On the Serial Port you will see that it does not detect any objects in RGB case.

There you will see my issue.

That was also suspicious for me on Edge Impulse site that Launching from Browser everything is working. I used with Webcamera.

I think it runs the RGB float model and not the quantized/int version therefore everything is so perfect.

Anyway how did you connect your ESP-EYE to the Launch in Browser?

Best regards,

Lehel

Hi Lehel,

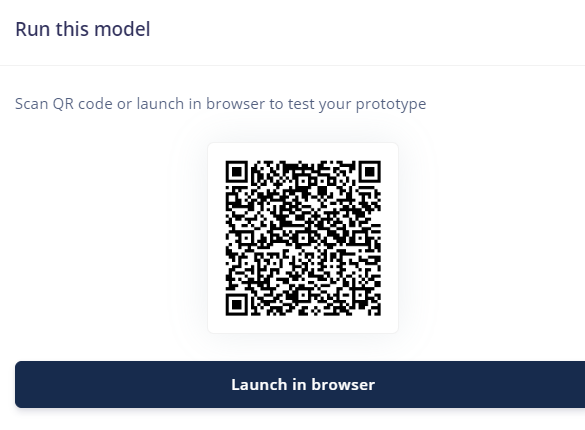

These screen recordings are not running the model in my browser, they are running on my ESP-EYE. Once you have downloaded the ESP-EYE firmware option from the Deployment page of the model:

You can then use our edge-impulse-cli tool to connect to the ESP-EYE and view the model output via your browser, this lets you see how the model is performing directly on the ESP-EYE. Once the CLI tool is installed use edge-impulse-runner --debug in your terminal and open the link that is displayed to see the model running. This runs on the ESP-EYE itself, not in your browser. The results should be the same as when building via Arduino. I am going on leave tomorrow until the start of January but will be happy to share another screen recording of the model running via Arduino in the new year.

Many thanks,

Jim

Hi Jim,

Happy New Year!

Thank you for your videos and for your description very much.

You test it differently like me. I have not used this method. I will try it.

Could you test the following method also, please:

Sometimes there are issues with Arduino IDE (too slow compiling, go Offline etc.), I do not know why and annoying. If you have such issue then you can use the Visual Studio Code also.

So without any changes in your Edge Impulse code/library it does not detect anything in RGB mode at me.

I look forward to seeing your results. ![]()

Best regards,

Lehel

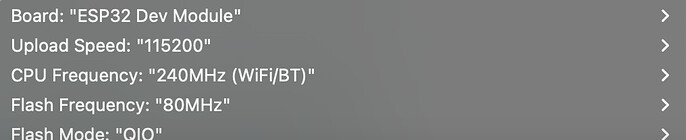

@edghba I have recorded myself testing with the above settings (no changes) and I am seeing detection as expected. You’ll find the video in your private forum messages as before. Please review and see if there are any differences. Many thanks,

Jim

Hi Jim,

Thank you for your video and for retesting. According to your video you executed the same as me.

Currently, I do not know what the difference can be in my case because I do the same as you.

I check and investigate it again and I will inform you.

Best regards & thanks,

Lehel

Hi Jim,

I noticed in your video that in your generated Sketch you have: v1

#include <ObjDetnRedBlackCylinder_v1_inferencing.h>

In my case it is without v1:

#include <ObjDetnRedBlackCylinder_inferencing.h>

Why do I have this difference when we created the .zip in the same way? Can it be problem?

I retested applying exactly the same settings which you proposed on December 7, 2023 and which I saw in your video.

Best regards,

Lehel

Hi Jim,

Could you share with me that code which you created with the EI and it worked with good detection for you, please?

I would like to test.

Unbelievable but for me the detection performance is bad for images 48x48 and it does work for images 96x96 at all as I wrote you in my earlier message also.

As you can see I already created a new training set also but the results, experiences are the same.

Furthermore according to my experiences the neural nets used for object detection are enough robust.

So it is not expectation for an object detection, that we have the exact same environment and light conditions as we had during the training.

But here the robustness is also very poor for my case.

Thank you in advance.

Best regards,

Lehel

Hi Lehel,

I’ve shared the arduino lib & firmware tested above with you via private message. The V1 is from training your ObjDetnRedBlackCylinder model with the full dataset at 96x96. You hit the community tier compute limits for this so I have trained it for you.

I have just tested your 48x48 model with images on my computer screen and it detected the blocks fairly reliably, but let me know how your performance compares when deploying the exact models from the videos I shared. I really recommend trying the full firmware and using edge-impulse-run-impulse --debug to view the output from your device live on your computer screen by following the link when you start the CLI tool runner

Many thanks,

Jim

Hello,

I am a student in Informatics and we are currently running a project with TinyML. For this purpose we wanted to build a Sorting machine which can detect Colors and Object and sort them based on classification.

I am facing the exact same issue as Lehel. My model is running perfectly in web and mobile devices, but as soon as I am deploying the model to the mcu (esp32s3 Xiao seed) its not detecting it as tested via Edge Impuls.

First we had 2 circles in orange and green + 2 rectangles in blue and yellow. As i was testing every setting I finally quitted and chose to train the model with objects which are more different to each other. So I am now testing with Star Wars Lego figures. I get this model running for 2 of 4 figures, but more wont work.

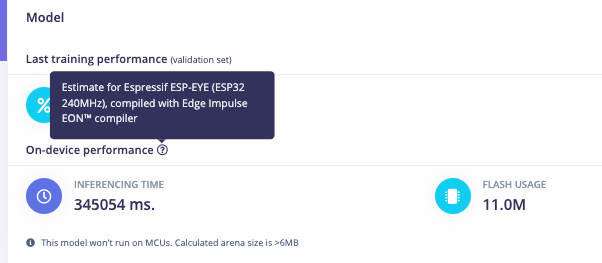

I was first trying with the FOMO Models, but wanted to test the MobileNetV2. I am facing the problem, that this model output wont be available in an optimized int version. When I try to use the unoptimized float model I get compilation error:

Sorting_inferencing/src/edge-impulse-sdk/tensorflow/lite/micro/kernels/softmax.cpp:301:14: error: return-statement with a value, in function returning ‘void’ [-fpermissive]

Can someone help me with this?

Here is my public EI project: Sorting - Dashboard - Edge Impulse

Hi Daniel,

The project attached using MobileNetV2 has greater RAM requirements than are possible to use with an ESP32. We have developed the FOMO architecture to allow you to fit object detection models onto resource constrained devices such as the ESP32.

I would also suggest that you’re training the model with quite a small dataset- there are less than 30 instances of each object in your training set- increasing this will improve your performance.

Hi Jim,

I have tried older Node.js versions and this

also but the error is the same. I also tried the Node.js version as written in the previous post but I have the same error. So I can not connect Edge Impulse yet as you mentioned. Which Node.js version do you have? Do you have any idea?

Thank you for your support. ![]()

Best regards,

Lehel

Updating here to note some troubleshooting I have done offline. It looks like for the ESP-EYE there is an issue with the Arduino library, it appears that the Espressif camera lib sends images to your Impulse as Blue Green Red, when they should be Red Green Blue. This is something we have identified and raised with Espressif and in our prebuilt firmware we have patched it ourselves, which is why your model is working well using the Edge Impulse Standalone Firmware deployment for the ESP-EYE, but not working so well with Arduino particularly for your images with red objects.

This issue lies with the espressif arduino libraries, we will raise it again with Espressif and try to get it fixed, but in the meantime I recommend using our ESP-EYE firmware option, along with our CLI tool to see the results. I hope you can get the CLI installed. If you cannot, you can still use the Serial Monitor in the Arduino IDE (or PuTTY/Minicom) to view the serial output with our ready-built firmware:

You can use AT+ commands to start different functions. To view them all type “AT+HELP” in the top bar and click enter. To run the impulse continuously as you can see in the above screenshot type “AT+RUNIMPULSECONT”. You’ll then see the predictions as expected.

A workaround for this is to edit the ei_camera_get_data function in yout arduino script to swap the blue and red channels around:

static int ei_camera_get_data(size_t offset, size_t length, float *out_ptr)

{

// we already have a RGB888 buffer, so recalculate offset into pixel index

size_t pixel_ix = offset * 3;

size_t pixels_left = length;

size_t out_ptr_ix = 0;

while (pixels_left != 0) {

// Swap BGR to RGB here

out_ptr[out_ptr_ix] = (snapshot_buf[pixel_ix + 2] << 16) + (snapshot_buf[pixel_ix + 1] << 8) + snapshot_buf[pixel_ix];

// go to the next pixel

out_ptr_ix++;

pixel_ix+=3;

pixels_left--;

}

// and done!

return 0;

}

Hi Jim,

Thank you very much for your support in uncovering/solving the issue.

Yes, the BGR-RGB transformation missed (red will be blue), as we discussed, and this caused that for example the black-black objects worked well (but the others not or not really) because it is the same in the two worlds.

(Once I experienced this blue image issue in a completely another ESP32-EYE project (not EI project) also when I forgot this transformation.)

Some closing experiences:

Best regards,

Lehel

I have a nearly similar setup. I wish to use ESP32 s2 connected with arducam ov5642 to capture images. I am developing an human detection model. tried all the variation, but the accuracy is very poor. this is my project link lab_human_detection - Dashboard - Edge Impulse . if you could guide me my train data is not correct or if you wish could share my code, I am using platformio to flash Arduino files

@ajadhav I’ve taken a look at your project and it appears that your testing results are significantly lower than your training accuracy- this would suggest that your model is overfitting to the training set. Tools like the “data augmentation” check box can help.

More training data which is representative of the environment you want to test in will also help. Here are some further tips for increasing model performance: