So @janjongboom could you have a quick look at my code for the Nano33BleSense using the OV7670 Cam. The code is at the bottom of this post.

It is close to working, but does not give any positive results. The Impulse I am using is here, I have successfully used this same impulse exported for: the Himax WEI-I, The PortentaH7 with OpenMV and the PortentaH7 using only C++, but I just can’t get any results on the Nano33BleSense?

I can print out the “signal” which then shows a 48x48 accurate full color image, but I am not sure if it is properly being sent to the classifier with the command (which looks correct):

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, false /* debug */);

I really wanted to solve the issue on my own, but it is presently not making sense. Everything compiles and runs, but it just is not getting any positive readings. Can you see anything glaringly obvious wrong with the code?

Here is my serial printout, with RGB888 image code.

Edge Impulse standalone inferencing (Arduino)

run_classifier returned: 0

Predictions (DSP: 4 ms., Classification: 706 ms., Anomaly: 0 ms.):

[0.00000, 0.99609]

is-microcontroller: 0.00000

unknown: 0.99609

0xa0a488, 0xa0a488, 0xa0a490, 0xa0a490, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a8a0, 0xa0a8a0, 0xa0aca0, 0xa8a8a8, 0xa8a8a8, 0xa8a8a0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca0, 0xa0aca0, 0xa0aca0, 0xa8a8a0, 0xa8a8a0, 0xa0a8a0, 0xa0a898, 0xa0a898, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a488, 0xa0a890, 0xa0a888, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a888, 0xa0a888, 0xa0a488, 0xa0a488, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8a8a8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8a8a8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8a898, 0xa8a898, 0xa8ac98, 0xa0ac98, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a888, 0xa0a490, 0xa0a490, 0xa0a890, 0xa0a890, 0xa0a490, 0xa0a490, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa8aca0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8a8a0, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca0, 0xa8aca0, 0xa8a8a0, 0xa0a8a0, 0xa0ac98, 0xa8a898, 0xa0a898, 0xa0a898, 0xa0a890, 0xa0a890, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a488, 0xa0a490, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0ac98, 0xa0a898, 0xa8a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8a8a8, 0xa8a8a8, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8a8a0, 0xa0aca0, 0xa8ac98, 0xa0ac98, 0xa0a898, 0xa8a898, 0xa8a890, 0xa0a890, 0xa0a888, 0xa0a888, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a8a0, 0xa8a898, 0xa0a898, 0xa0a8a0, 0xa8a8a0, 0xa0a8a0, 0xa8a8a0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa0ac98, 0xa0a898, 0xa8a898, 0xa8a898, 0xa8a898, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0a890, 0xa0ac90, 0xa0ac98, 0xa0ac98, 0xa8ac98, 0xa0ac98, 0xa0a8a0, 0xa0aca0, 0xa8a898, 0xa8a898, 0xa8a8a0, 0xa8a8a0, 0xa8a8a0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca8, 0xa8a8a8, 0xa8a8a0, 0xa8a8a0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa0ac98, 0xa0a898, 0xa0a890, 0xa0ac90, 0xa0a890, 0xa8a890, 0xa0a890, 0xa0a890, 0xa0a898, 0xa0ac98, 0xa0ac98, 0xa0ac98, 0xa8aca0, 0xa0aca0, 0xa0aca0, 0xa0aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8aca0, 0xa8aca0, 0xa8a8a0, 0xa8a8a0, 0xa8aca0, 0xa8aca0, 0xa8a8a0, 0xa8a8a0, 0xa8ac98, 0xa8ac98, 0xa8a898, 0xa8a898, 0xa8a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa0a898, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8a8a0, 0xa8a898, 0xa8a898, 0xa8a898, 0xa8a898, 0xa0ac98, 0xa0ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8a8b0, 0xa8a8a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8b0a0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xb0b0b0, 0xa8b0b0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8b0a8, 0xa8b0b0, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b0, 0xa8acb0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0a8, 0xb0b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xb0b0a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8b0a0, 0xa8b0a0, 0xa8b0a0, 0xa8b0a0, 0xa8aca0, 0xa8aca0, 0xa8b098, 0xa8b098, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xb0b0b0, 0xb0b0b0, 0xb0acb0, 0xb0acb0, 0xa8b0b0, 0xb0b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xb0aca8, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8aca0, 0xa8b098, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xb0acb0, 0xb0acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xb0aca8, 0xb0aca8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8aca0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8acb0, 0xa8b0b8, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0b8, 0xa8b0b8, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8b0b8, 0xa8b0b8, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b0, 0xa8b0b0, 0xb0b0b8, 0xb0acb0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b8, 0xb0b0b0, 0xb0b4b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xb0b0b8, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b0, 0xa8b0b0, 0xb0b0b8, 0xb0b0b0, 0xa8b0b8, 0xb0b0b0, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0a8, 0xb0b0a8, 0xb0b0b0, 0xb0b0a8, 0xb0b0a8, 0xa8b0a8, 0xa8b0a0, 0xa8b0a0, 0xa8b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8a8a0, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xb0b0b8, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b8, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0a8, 0xb0b0a8, 0xb0b0a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8a898, 0xa0a898, 0xa0a098, 0x98a498, 0xa0a490, 0x98a490, 0x98a898, 0xa0ac98, 0xa8ac98, 0xa8ac98, 0xa8ac98, 0xa8ac90, 0xb0b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa0a4a8, 0xa0a098, 0x98a098, 0x98bc98, 0x909890, 0x909498, 0x909490, 0x889890, 0x909088, 0x889088, 0x909c90, 0x988498, 0xa0a898, 0xa8ac98, 0xa8ac98, 0xa8ac90, 0xa8ac90, 0xb0b0b0, 0xb0b0b0, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xb0b0b8, 0xb0acb0, 0xa8b0b8, 0xa8acb0, 0xa8a8a8, 0xa0a4a8, 0xa0a0a8, 0x98bca0, 0x9898a0, 0x989ca0, 0x9898a0, 0x909098, 0x889c98, 0x909498, 0x909490, 0x909088, 0x889470, 0x786060, 0x607850, 0x505428, 0x282468, 0x6874a0, 0xa09888, 0x988090, 0xa0a890, 0xa0a890, 0xa8ac90, 0xa0ac90, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b8, 0xb0b0b0, 0xa8b0b0, 0xa8b0b8, 0xb0b0b8, 0xb0b0b8, 0xa8b0b8, 0xa8acb0, 0xa8a8b0, 0xa8a4b0, 0xa0a4b0, 0x98a0a8, 0x98a0a8, 0x98b8a8, 0x989cb0, 0x9880a8, 0x98a0a8, 0x98a0a8, 0x98b8a0, 0x908c98, 0x888088, 0x788870, 0x686c78, 0x686c90, 0x888c90, 0x889c98, 0x909c98, 0x989c78, 0x807c38, 0x403c40, 0x303c40, 0x303068, 0x707890, 0x909480, 0x909c88, 0x988488, 0xa0a890, 0xa0ac90, 0xa8ac90, 0xb0b0b8, 0xb0b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xb0acb8, 0xa8a8b0, 0xa8a8b0, 0xa0a4a8, 0xa0a0b0, 0xa0a0a8, 0x98bcb0, 0x9880b0, 0x98a0b0, 0xa0a0b8, 0xa0a4b0, 0xa0a4b0, 0xa0a0a8, 0xa0bca8, 0x989098, 0x888890, 0x809c88, 0x786070, 0x587070, 0x505070, 0x585860, 0x382060, 0x404058, 0x484080, 0x706c90, 0x908c90, 0x888098, 0x98a098, 0x98b870, 0x807830, 0x403830, 0x282038, 0x305c48, 0x584878, 0x888c78, 0x889078, 0x909c88, 0xa08490, 0xa0a888, 0xa0a888, 0xa8b0b0, 0xa8acb0, 0xa8acb0, 0xa8a8b0, 0xa0a4b0, 0xa0a4b0, 0xa0a0a8, 0x98a0a8, 0x98a0b0, 0x98a0b0, 0xa0a4b8, 0xa0a8b8, 0xa8a8b0, 0xa8a8b0, 0xa8acb8, 0xa8a8b0, 0xa0bca8, 0x9090a0, 0x888090, 0x809888, 0x786c80, 0x706480, 0x687068, 0x504860, 0x485c68, 0x403c70, 0x402c60, 0x302c60, 0x302c58, 0x303c68, 0x403c58, 0x482c88, 0x706888, 0x988888, 0x988c80, 0x888878, 0x809060, 0x786030, 0x485428, 0x303428, 0x303038, 0x504468, 0x888870, 0x888c70, 0x909c80, 0x988888, 0xa0a890, 0xa0a888, 0x98bcb0, 0x9880b0, 0x98a0b0, 0x98a0b0, 0xa0a4b0, 0xa0a8b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8acb0, 0xa8a8b0, 0xa8a4b0, 0xa8b4a0, 0x988090, 0x809888, 0x807480, 0x707060, 0x485468, 0x383488, 0x584c90, 0x707070, 0x584c78, 0x505460, 0x383468, 0x403068, 0x402850, 0x302450, 0x282850, 0x302c50, 0x302c48, 0x383470, 0x604068, 0x686c50, 0x504440, 0x505c38, 0x482040, 0x485838, 0x403c28, 0x382818, 0x283c30, 0x483870, 0x806468, 0x888868, 0x888080, 0xa0a880, 0xa0ac90, 0xa0a888, 0xa8acc0, 0xa8acc0, 0xa8acb8, 0xa8a8b8, 0xa8a4b0, 0xa8a4b0, 0xa8a0a8, 0xa8bca8, 0xa898a8, 0xa094a0, 0xa094a0, 0xa094a0, 0xa090a0, 0x989480, 0x807888, 0x807888, 0x787870, 0x584860, 0x485470, 0x402c88, 0x584490, 0x686878, 0x504070, 0x405068, 0x382080, 0x485c80, 0x483c60, 0x403058, 0x303060, 0x302c60, 0x303050, 0x303058, 0x303848, 0x382450, 0x485058, 0x504870, 0x687088, 0x889468, 0x687c28, 0x402c18, 0x282030, 0x485868, 0x806460, 0x888c68, 0x908488, 0xa0a888, 0xa8a888, 0xa8a888, 0xa098a8, 0xa098a8, 0xa098a8, 0xa094a8, 0xa09ca8, 0xa09ca8, 0xa09ca8, 0xa080a8, 0xa0a0a8, 0xa0a4b0, 0xa8a8b0, 0xa0acb0, 0xa0bca8, 0x988088, 0x788898, 0x888c98, 0x889868, 0x585868, 0x584c78, 0x584878, 0x504878, 0x505c70, 0x403468, 0x383060, 0x302c90, 0x505498, 0x585478, 0x584060, 0x404470, 0x405480, 0x584860, 0x405490, 0x706470, 0x606068, 0x607c88, 0x807c68, 0x585068, 0x707c38, 0x402c18, 0x303420, 0x303448, 0x584470, 0x888468, 0x808c78, 0x908480, 0xa0a480, 0xa8a888, 0xa0a888, 0xa09ca8, 0xa084b0, 0xa8a4b0, 0xa0a8b8, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa8a0a8, 0x98a890, 0x809480, 0x706878, 0x687c78, 0x605878, 0x585880, 0x605480, 0x605078, 0x505078, 0x585c68, 0x403488, 0x585488, 0x584090, 0x606ca8, 0x909470, 0x584078, 0x687870, 0x604058, 0x485060, 0x585040, 0x382c38, 0x302838, 0x282030, 0x203828, 0x201c30, 0x200020, 0x283038, 0x383c40, 0x604468, 0x888868, 0x889070, 0x908478, 0xa0a480, 0xa0a888, 0xa0a888, 0xa0a8b8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8b0b8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8a4b0, 0xa0a488, 0x789c90, 0x786078, 0x606480, 0x606080, 0x606890, 0x686080, 0x607898, 0x707898, 0x706880, 0x687490, 0x707078, 0x504c70, 0x505878, 0x585458, 0x383458, 0x382c50, 0x302450, 0x282450, 0x282040, 0x282448, 0x282440, 0x282c48, 0x303040, 0x383040, 0x383448, 0x585c50, 0x605460, 0x686c78, 0x888c78, 0x909480, 0x908080, 0xa0a888, 0xa0a880, 0xa0a880, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8b0b8, 0xa8acb8, 0xa8acb0, 0xa8a8b0, 0xa0b880, 0x707078, 0x5054a0, 0x786888, 0x687490, 0x706080, 0x607070, 0x505080, 0x585868, 0x403470, 0x383470, 0x382870, 0x382870, 0x382860, 0x302c68, 0x383060, 0x383060, 0x382068, 0x485860, 0x402c70, 0x505878, 0x605c68, 0x604c78, 0x687470, 0x707878, 0x786878, 0x809080, 0x888878, 0x809480, 0x889080, 0x909480, 0x988080, 0xa0a480, 0xa0a880, 0xa0a880, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8a8b0, 0xa8a4b0, 0xa0bc80, 0x707078, 0x584870, 0x505870, 0x403470, 0x403070, 0x383068, 0x383070, 0x383070, 0x383878, 0x403478, 0x402478, 0x484880, 0x505480, 0x584090, 0x686888, 0x687090, 0x707c90, 0x806498, 0x808c90, 0x889098, 0x909898, 0x909ca0, 0x988498, 0x98a898, 0xa0a8a0, 0xa0a498, 0x98ac80, 0x889488, 0x908c78, 0x889078, 0x908080, 0x98a488, 0xa0a888, 0xa0a880, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8a8b0, 0xa8a8b0, 0xa8a8b0, 0xa8a8b0, 0xa0a8b0, 0xa0a4a8, 0xa0a4b0, 0xa0b8a0, 0x908898, 0x809c70, 0x505c70, 0x402078, 0x484478, 0x485088, 0x585c88, 0x604498, 0x687490, 0x787898, 0x806498, 0x8090a8, 0x8894a0, 0x889ca8, 0x9080a8, 0x98a4b0, 0x98a8a8, 0xa0a8a8, 0xa0a8a8, 0xa0a8a8, 0xa0a8a8, 0xa0a8a8, 0xa0a8a0, 0xa0aca0, 0xa0aca0, 0xa0a498, 0x98ac80, 0x889488, 0x908870, 0x888870, 0x908080, 0x98a888, 0xa0ac88, 0xa0a880, 0xa8a8b0, 0xa8a8b0, 0xa8a8b0, 0xa0a8b0, 0xa8a8b0, 0xa8a8b0, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa0a4b0, 0xa0bca8, 0x9090a0, 0x888898, 0x808898, 0x8090a0, 0x8894a8, 0x9098b0, 0x9084b8, 0x98a8b0, 0xa0a8b8, 0xa0acb8, 0xa0a8b8, 0xa0a8b0, 0xa0a8b0, 0xa0a8b0, 0xa0acb0, 0xa0aca8, 0xa0a8a8, 0xa0aca8, 0xa0aca8, 0xa0aca8, 0xa0aca8, 0xa0a4a0, 0xa0a4a0, 0xa0a098, 0x98a098, 0x98bc90, 0x989490, 0x908470, 0x888c78, 0x908488, 0xa0a890, 0xa8ac90, 0xa8a888, 0xa8a8b0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa0a8b8, 0xa0a8b0, 0xa0acb0, 0xa0acb8, 0xa0acb8, 0xa0a8b8, 0xa0acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa0acb8, 0xa0acb0, 0xa0acb0, 0xa0acb0, 0xa0a8b0, 0xa0a4a8, 0xa0a0a8, 0x98bca0, 0x9898a0, 0x989898, 0x989090, 0x909890, 0x909490, 0x909898, 0x9080a0, 0x98a098, 0xa0b890, 0x988470, 0x889880, 0x988890, 0xa0a890, 0xa8ac90, 0xa8ac90, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa0acb8, 0xa8acb8, 0xa8acb8, 0xa0a8b8, 0xa0a4b8, 0xa0a0b0, 0x98bcb0, 0x989cb0, 0x9898a8, 0x9094a8, 0x9094a8, 0x9094a0, 0x9094a0, 0x9094a0, 0x8894a0, 0x9094a0, 0x9094a0, 0x909ca0, 0x909ca0, 0x9080a8, 0x98a0a8, 0x98bc98, 0xa09490, 0x988470, 0x889c80, 0xa08890, 0xa0ac90, 0xa8ac90, 0xa0ac90, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8a8b8, 0xa0a8b0, 0xa0a0b0, 0xa0a0b0, 0xa0a0b0, 0x98bca8, 0x909ca8, 0x989ca8, 0x9898a8, 0x9098a8, 0x9094b0, 0x9094a8, 0x9098b0, 0x9098b0, 0x909cb0, 0x9098b0, 0x9080b0, 0x98a8b8, 0xa0a8b0, 0xa0b0b8, 0xa0b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8b0b0, 0xa8aca8, 0xa8a4a0, 0xa0bc98, 0xa09490, 0x988478, 0x889c90, 0xa08890, 0xa0ac90, 0xa8ac90, 0xa0ac90, 0xa8a8b0, 0xa8acb8, 0xa8acb0, 0xa0acb0, 0xa0a8b0, 0xa8acb8, 0xa8a8b0, 0xa0a8b0, 0xa0a8b0, 0xa0a4b0, 0x98a0b0, 0x98bcb0, 0x989cb0, 0x989ca8, 0x9894a8, 0x9098a8, 0x9094a8, 0x9098b0, 0x9098b0, 0x9094b0, 0x909cb0, 0x9080b8, 0x98a4b8, 0x98a8b8, 0xa0acb8, 0xa8acc0, 0xa8b0c0, 0xa8acc0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xa8acb0, 0xa8acb0, 0xa8a8a8, 0xa8a0a8, 0xa8bca0, 0xa89c98, 0xa884a0, 0xa8a4a0, 0xa8a4a0, 0xa8b890, 0x989088, 0x989890, 0xa08898, 0xa8a898, 0xa8a898, 0xa8a898, 0xa0a8b0, 0xa0a4b0, 0xa0a4b0, 0xa0a0a8, 0x98bca8, 0x9898a8, 0x9894a8, 0x9094a8, 0x9094a8, 0x9094a8, 0x9094a8, 0x9098b0, 0x909cb8, 0x9880b8, 0x98a4b8, 0xa0a8c0, 0xa0acb8, 0xa8acc0, 0xa8acc0, 0xa8acb8, 0xa8acb8, 0xa8b0c0, 0xb0b0c0, 0xa8b0c0, 0xa8acb8, 0xb0a8b8, 0xa8a8b8, 0xb0a4b0, 0xa8a0b0, 0xa8a0b0, 0xa8bca8, 0xa89ca8, 0xa880a8, 0xa8a4a8, 0xa8a4a8, 0xa8a8b0, 0xb0aca8, 0xb0aca8, 0xb0aca8, 0xa8a8a8, 0xa8a8a0, 0xa8bc98, 0x989c98, 0xa09890, 0xa08898, 0xa8a898, 0xa8ac98, 0xa8a898, 0x9094a8, 0x8894a8, 0x9098a8, 0x9098b0, 0x909cb0, 0x9880b8, 0x98a4b8, 0xa0acc0, 0xa0acc0, 0xa8acc0, 0xa8acb8, 0xa8b0c0, 0xb0b0c0, 0xa8b0c0, 0xb0b0c0, 0xb0b0b8, 0xa8acb8, 0xb0a8b8, 0xa8a8b8, 0xb0a4b0, 0xa8a0b0, 0xa8a0b0, 0xa8a0b0, 0xb0a0b0, 0xb0a4b0, 0xb0a4b0, 0xb0a8b8, 0xb0a8b8, 0xb0acb8, 0xb0acb8, 0xb0b0b8, 0xb0acb8, 0xa8b0b8, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8a8b0, 0xa8a8b0, 0xa8aca8, 0xa0a8a8, 0xa0a8a8, 0xa0a0a0, 0x98b898, 0x989898, 0x988498, 0xa0a898, 0xa8ac98, 0xa0a898, 0xa0a4b0, 0xa0acb8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0c0, 0xa8acb8, 0xa8acb8, 0xb0acb0, 0xa8a4b0, 0xa8a4b0, 0xa8a0b0, 0xa8a4b0, 0xb0a0b0, 0xb0a4b0, 0xb0a8b0, 0xb0a8b0, 0xb0a8b8, 0xb0acb8, 0xb0acb8, 0xb0acb8, 0xb0acb8, 0xb0b0b8, 0xb0b0c0, 0xb0acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb8, 0xa8b0b8, 0xa8acb0, 0xa8b0b0, 0xa0acb0, 0xa0a8b0, 0xa8a8b0, 0xa8aca8, 0xa0aca8, 0xa0aca8, 0xa0a8a0, 0xa0b490, 0x909490, 0x988898, 0xa0a8a0, 0xa8ac98, 0xa8a898, 0xa8a4b0, 0xa8a4b0, 0xa8a0b0, 0xa8a0b0, 0xa8bcb0, 0xa89cb0, 0xa880b0, 0xa8a4b0, 0xa8a4b0, 0xa8a8b8, 0xb0a8b8, 0xb0acb8, 0xb0acb8, 0xb0acb8, 0xb0acc0, 0xb0acc0, 0xb0acb8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xa8b0b8, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xa8b4b8, 0xb0acb8, 0xa8acb0, 0xa0acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8acb0, 0xa8aca8, 0xa0a8a8, 0xa0b490, 0x9084a0, 0xa0aca0, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8a0b0, 0xa8a4b0, 0xb0a8b0, 0xa8acb8, 0xb0acb8, 0xb0acb8, 0xb0acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8b0b8, 0xa8b0b8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acc0, 0xa8acc0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0c0, 0xa8b0c0, 0xa8b0c0, 0xa8b0c0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8acb0, 0xa8a8b0, 0xa0acb0, 0xa8aca8, 0xa8a8a8, 0xa0a4a0, 0xa0a0a0, 0xa0bc98, 0x989890, 0x989c90, 0x988ca0, 0xa8aca8, 0xa8aca0, 0xa8aca0, 0xa8aca0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8acc0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8acc0, 0xa8b0c0, 0xa8b0b8, 0xa8b0b8, 0xa8b0b8, 0xa8b0c0, 0xa8b0c0, 0xa8a8b8, 0xa8a8b0, 0xa8acb8, 0xa8a8b0, 0xa0a8b0, 0xa0a8a8, 0xa0acb0, 0xa8acb0, 0xa8a4a8, 0xa0a0a8, 0xa0a4a8, 0xa0a8a0, 0xa0aca8, 0xa0aca8, 0xa8b4b0, 0xa8b4b0, 0xb0b8b0, 0xb0b4a8, 0xb0b4a8, 0xa8b0a8, 0xa8b0a0, 0xa8aca0, 0xa8aca0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acc0, 0xa8b0b8, 0xa8b0b8, 0xa8acc0, 0xa8b0c0, 0xa8acc0, 0xa8b0c0, 0xa8b0c0, 0xa8acc0, 0xa8acb8, 0xa8acb8, 0xa8acb0, 0xa8acb0, 0xa0a8b0, 0xa8a8b0, 0xa8a8a8, 0xa8a8a8, 0xa8a8b0, 0xa0a0a8, 0x98a0a8, 0xa0a0a8, 0xa0a8a8, 0xa0a8a8, 0xa0acb0, 0xa8b0b0, 0xa8acb0, 0xa8a8b0, 0xa0b4b8, 0xb0bcc0, 0xb8bcb8, 0xb8bcb8, 0xb8bcb8, 0xb8bcb8, 0xb8bcb0, 0xb0b8b0, 0xb0b4b0, 0xb0b4a8, 0xa8b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa0a8b8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8acc0, 0xa8acc0, 0xa8acb8, 0xa8acb8, 0xa8acb8, 0xa8a4b8, 0xa0a4b0, 0xa0a0b0, 0xa0a0a8, 0xa0bca8, 0x989ca0, 0x9898a0, 0x9898a0, 0x989ca0, 0xa09ca8, 0xa080a8, 0xa0a4a8, 0xa0acb0, 0xa8b0b8, 0xa8b8c0, 0xb0bcc0, 0xb8bcc0, 0xb8a0c0, 0xb8c4c8, 0xb8c0c0, 0xb8c0c0, 0xb8dcc0, 0xb8bcc0, 0xb8bcc0, 0xb8bcc0, 0xb0bcb8, 0xb8bcb8, 0xb0b8b8, 0xb0b4b0, 0xb0b4b0, 0xb0b4b0, 0xb0b4b0, 0xb0b0b0, 0xb0b0a8, 0xa8b0a8, 0xa8aca0, 0xa8aca0, 0xa0acb0, 0xa0acb0, 0xa0a8b0, 0xa0a8b0, 0xa0a8b0, 0xa0a4b0, 0xa0a0a8, 0xa0a0a8, 0xa0bca0, 0x9898a0, 0x989498, 0x9898a0, 0x9898a0, 0x989ca8, 0x9880a8, 0x98a4b0, 0xa0acb0, 0xa8b4b8, 0xa8b8c0, 0xb0bcc0, 0xb0bcc0, 0xb8bcc0, 0xb8bcc0, 0xb8a0c8, 0xb8c0c8, 0xb8c0c8, 0xb8c0c8, 0xb8dcc8, 0xb8bcc0, 0xb8a0c0, 0xb8dcc0, 0xb8bcc0, 0xb0bcc0, 0xb8bcc0, 0xb8b8c0, 0xb0b8c0, 0xb0b8b8, 0xb0b8b8, 0xb0b4b0, 0xb0b4b0, 0xb0b4b0, 0xb0b4b0, 0xb0b0a8, 0xa8b0a8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0xa8aca8, 0x989c98, 0x989898, 0x989498, 0x909498, 0x909498, 0x909898, 0x989ca8, 0x9880a8, 0x98a8b0, 0xa0acb8, 0xa8b4b8, 0xa8b8c0, 0xb0b8c0, 0xb0bcc0, 0xb8a0c0, 0xb8c0c0, 0xb8c0c8, 0xb8c0c8, 0xb8c0c8, 0xb8c0c8, 0xb8dcc8, 0xb8bcc8, 0xb8bcc0, 0xb8bcc0, 0xb8b8c0, 0xb8b8c0, 0xb8bcc8, 0xb8b8c8, 0xb8bcc0, 0xb8bcc0, 0xb8bcc0, 0xb0b8c0, 0xb0b8b8, 0xb0b4b8, 0xb0b4b8, 0xb0b4b8, 0xb0b4b8, 0xb0b4b8, 0xb0b4b8, 0xb0b0b8, 0xb0b0b0, 0xb0b0b0, 0xa8b0b0, 0xb0b0b0, 0xb0b0b0, 0xa8acb0, 0xa8aca8, 0xa8aca8

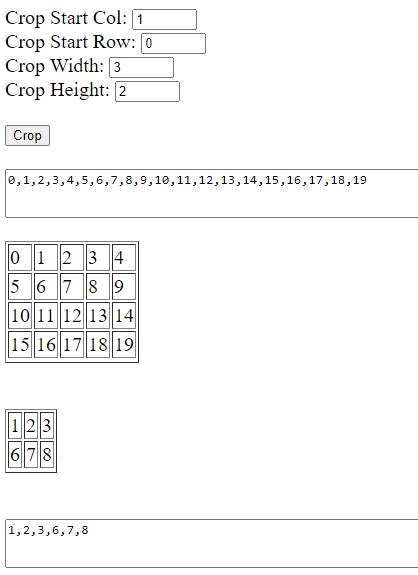

The above RGB888 code when saved in a text file called frame23.txt and converted using

edge-impulse-framebuffer2jpg --framebuffer-file "frame23.txt" --width 48 --height 48 --output-file frame23-15.jpg

Shows an image of the Nano33BleSense. You can even see the correct blue and red lines on the breadboard.

Below is a Github link to the code I am using.