Hi;

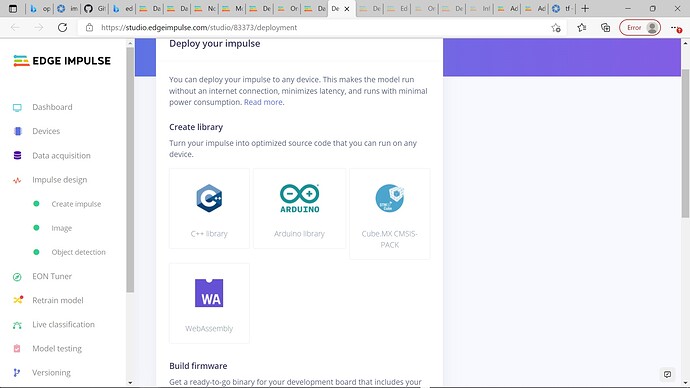

I am trying to follow the deployment directions for the OpenMV H7 camera board which say to export the Tflite and txt files. Not available on my UI. Any clues?

Thanks,

Stimpy

Hi @Stimpy,

Can you provide a screenshot of what you see on your UI?

You should be able to go to the Deployment page in your project, select “OpenMV” and click Build. In a few moments, a .zip file should be downloaded containing the .tflite and .txt files.

Please note that this option is only available on image-type projects. The OpenMV library option will not show up on other types of projects. You can still download the .tflite file manually from the Dashboard and create your own .txt labels file. However, the OpenMV library expects data to be formatted in a very particular manner to be used for inference. You can read more about it here.

I’ve noted that I can’t seem to get very small file sizes at least below 4 MB which won’t fit on the OpenMV camera. What trick do I need to do to get a small enough file to run on the camera?

Thanks,

Stimpy

I looked into the *.lite file size problem, apparently, I don’t have a 96/96 transfer model available either. Can you say where I can get one?

Thanks,

Aaron

Can you say how to make the TFlite files for the OpenMV cam manually? I’m trying to wade thru the docs, but there doesn’t seem to be any mention anywhere of getting the required tf model flatening etc. that seems to be required to have the camera eat the model.

Any clues?

Stimpy

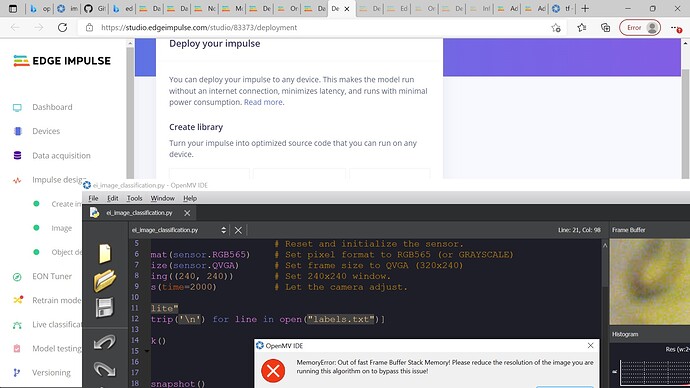

OK, finally realized that the image classification doesn’t need the manual boxes drawn and just extracts its own features. But there seen to be a variety of ‘models’, “MobileNetV1 96x96 0.25 (no final dense layer, 0.1 dropout)” seems to be pretty small 298k. But still fails to run in my openMV cam. I tried several of the others but still get this!

.Stack overflow?

Clues?

Aaron

Tried much smaller TFlite 85k still crashes the camera. No error message this time just a complete fart out.

Wasted a lot of time on this already. Any clues?

Stimpy,

It looks like you are doing object detection, which is not currently supported on the OpenMV cam. The TFLite interpreter for it is missing some of the ops, so it won’t run at this time. It can only do image classification.

As I noted above I made that observation and proceeded to create the 96 X 96 classification model. The size looked good and so I tried to use your downloaded PY script and the TFlite model. I display the error I got doing that above. I thought that the file was too big and reduced it down twice using the Impulse tools and a different model choices. In each case the model crashed the Camera. The PY script would run to the tf classify line and crash by winking green then white as described for a freak out. Why?

Stimpy

I should note that I have the newest H7 R2 model.

More Info:

Made a separate PY script that just does an instantiation on the tf lib then tries to load the model:

tf.load(“trained.tflite”)

Immediate crash.

Nothing else in the script not even snapshot().

So this is a total failure I think?

What could cause that?

Stimpy

More info:

Tried the model in unoptimized float instead of int 8, no diff.

crash freak out.

Stimpy

To me it seems like there is a complete failure somewhere in all this. Too bad. Might have bought a few seats for the company…

I can’t send the model files to you here, where can I send them? They are apparently broken. You’ll need to take a look and see why.

Let me know if this is fixable. I can’t wait to much longer for this to work as you claim it does.

Hi @Stimpy,

From the error, it seems that your OpenMV cam is running out of memory used to store and process images. This is a big limitation with the H7 and H7 R2 models–both have 512 kB of frame buffer/stack.

One option is to purchase the H7 Plus model, which has 32 MB of frame buffer. However, it sounds like you are limited on time, so that may not be an option.

Another option is to use MobileNetV2 (possibly the 0.1 or 0.05 versions) with as small input as you can get (likely 48x48 pixels). I have not tried this myself, but you should be able to use the mean_pool() function to resize your captured images in the OpenMV.

To save on even more space, it would be best to transform your images to grayscale. Knowing that this is going on an FRC robot, that may not be possible (where colors are important).

I recommend reading through this tutorial to see how the author manages to get a MobileNetv2 model to run on the OpenMV H7 (R1) cam. Please note that some of the commenters ran into the same problem you are having–they run out of memory quickly. The fix is to either use a small model and input images or get the H7 Plus model.

Finally, if you have the space and power budget on your robot, you may want to consider moving to a Raspberry Pi 3B+ or 4B. They should be able to perform image classification and even object detection (albeit at a slower frame rate) without much of a problem.

I guess I came to the same conclusion after stumbling across that same article a while ago. I have an Arduino Due handy, will that work? What I would need is some example code to get the h7 feeding video to the co-processor. The FRC people have exhausted the ready supply of the plus units. Too bad you can’t supply code to virtualize the SD card. Might slow it down a bit but could work.

BTW I didn’t see any 48 X 48 models in the list for training? Anyway I did make a pretty small model that claims to only need 104k peak only 85k on disk. Still won’t work.

Hi @Stimpy,

The Arduino Due is an Arm Cortex-M3 running at a much slower speed and a fraction of the RAM of the OpenMV, so I highly doubt it will work with MobileNet.

Virtualizing the SD card is a low-level operation of the OpenMV. We don’t have control over that aspect of the board, so I recommend asking on the OpenMV forum.

The way MobileNetV2 operates, it will work with any input resolution down to 32x32. The models you find on Edge Impulse were originally trained with 96x96 images or with 160x160 images. They will be most accurate if you use images with the same resolution during transfer learning (hence the note e.g. “works best with 96x96 input size”). However, they will happily take images down to 32x32 (you’ll just lose some accuracy).

If it helps, here is another example of performing inference with MobileNetV2 0.05 (trained on 96x96 images but with 48x48 images used for transfer learning) on the OpenMV. Note that I am using grayscale.

We need the color as previously noted.

We have obtained a ‘plus’ unit fortunately for market price.

I expect to be able to run at least the 96 X 96 filter I cooked up as it only needs about 160K for the image and now will have all that frame buffer space to not crash in.

That will be when the new camera arrives day after tomorrow.

Now I am trying to bring up the servo unit to be able to track the target and follow along with the drive.

No luck so far with these examples. As I look into the various pieces I note that when I call for P7 in the DAC instantiation, which is the Servo output to be engaged, I immediately get a complier error telling me D12 is not a servo control pin. But the camera card sez just the opposite. What is correct? Since neither work maybe it’s not important. There seem to be a lot of problems with this part. Can you please unravel this latest conundrum?