Question/Issue:

Why are all the prediction results for my model returning 0 in all the inferences?

Project ID:

730902

Context/Use case:

Applying Quantization to BYOM

Summary:

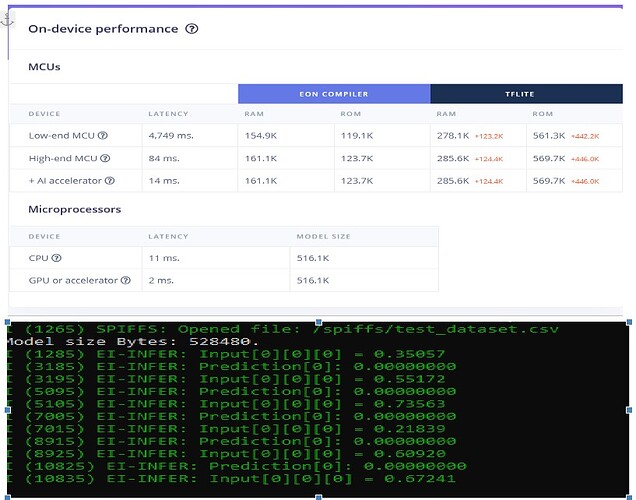

I’ve successfully loaded my model (BYOM) in saved_model.zip format and provided representative dataset, it appears to load correctly and it calculates the MCU performance.

I’ve also selected the Quantization(int 8) option.

Everything builds successfully on Edge Impulse. Then i generated the C++ library to my project and the build for my model is successfull. But when i try to Flash and Monitor i’m getting predictions as 0 for every inference.

I’ve uploaded a LSTM model but i had to downgrade to an older version of tensorflow, tf-2.13.

Am i missing something or is the Quantization not working properly?

Steps to Reproduce:

- Load BYOM in SavedModel format

- Check quantization optimization option

- Implement C++ library in project

Expected Results:

I’ve expected to get actual values in my prediction results as i did in my non-optimized tflite models that work when implemented on the MCU (ESP32-S3-N8R8)

Actual Results:

Predictions results return 0 for every inference.

Reproducibility:

- [x] Always

- [ ] Sometimes

- [ ] Rarely

Environment:

- Platform: ESP32-S3-N8R8

- Build Environment Details: IDF-ESP

- OS Version: Windows 11

- Edge Impulse Version (Firmware): 1.72.4

- Edge Impulse CLI Version: Not used

- Project Version: 1.0.0

- Custom Blocks / Impulse Configuration: None