enko

January 2, 2024, 4:30pm

#1

Hi,

I’ve used this example

and this works fine:

Hi @enko

Can you please share the Project ID, and the IDE version that you are using / compiler, OS and any other details you have. So we can reproduce your environment. Thanks!

Best

Eoin

My project on EI:https://studio.edgeimpulse.com/studio/319646

but now I have no clue how to integrate my camera into it.

I can’t find a way to convert grayscale to out_ptr format, all examples are for RGB. Should I use RGB?

how can I put my grayscale data to

signal_t signal;

signal.total_length = sizeof(features) / sizeof(features[0]);

signal.get_data = &get_feature_data;

from

#define FRAME_WIDTH (80)

#define FRAME_HEIGHT (60)

static volatile uint8_t camera_buff[FRAME_WIDTH*FRAME_HEIGHT];

my current flow: buffer 80x60 grayscale - > ei-infernece

How can I extract features from the image?

Thanks,

1 Like

enko

January 3, 2024, 1:16pm

#2

Now looks like I’ve found the fay how-to feed ML Network:

int get_camera_data(size_t offset, size_t length, float *out_ptr)

{

size_t bytes_left = length;

size_t out_ptr_ix = 0;

uint8_t r, g, b;

// read byte for byte

while (bytes_left != 0)

{

// grab the value and convert to r/g/b

uint8_t pixel = camera_buff[offset];

mono_to_rgb(pixel, &r, &g, &b);

// then convert to out_ptr format

float pixel_f = (r << 16) + (g << 8) + b;

out_ptr[out_ptr_ix] = pixel_f;

// and go to the next pixel

out_ptr_ix++;

bytes_left--;

}

return 0;

}

signal.total_length = EI_CLASSIFIER_INPUT_WIDTH * EI_CLASSIFIER_INPUT_HEIGHT;

signal.get_data = &get_camera_data;

but my prediction is incorrect, even though I have no day I see accuracy for class 2: about 0.98

I have used 28x28 dataset for model training, and it works fine if I put raw, data.

but when I put 80x60 images, I see the incorrect result

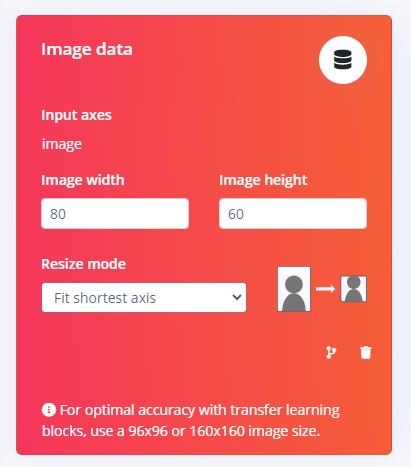

my Image data setting:

what I’m doing wrong?

louis

January 3, 2024, 3:32pm

#3

Hello @enko ,

I’d suggest you use a square image ratio to train your model. Especially if you used a transfer learning pre-trained model.

Best,

Louis

enko

January 3, 2024, 5:34pm

#4

Hello @louis ,

Thanks for the suggestion. I’ll try to retrain my model.

Sorry, but I didn’t get why I needed to resize my image, as I provided input width and height the same as on the picture.

Also, I’m trying to use the code example from

/**

* Read from Arducam camera (Himax HM01B0) in grayscale, resize image to 96x96,

* run inference with resized image as input, and print results over serial.

*

* Copyright (c) 2023 EdgeImpulse, Inc.

*

* Licensed under the Apache License, Version 2.0 (the License); you may

* not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an AS IS BASIS, WITHOUT

* WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

// RP2040 libraries

show original

// Callback: fill a section of the out_ptr buffer when requested

static int get_signal_data(size_t offset, size_t length, float *out_ptr)

{

uint8_t c;

float pixel_f;

// Loop through requested pixels, copy grayscale to RGB channels

for (size_t i = 0; i < length; i++)

{

c = (image_buf + offset)[i];

pixel_f = (c << 16) + (c << 8) + c;

out_ptr[i] = pixel_f;

}

return EIDSP_OK;

}