Hi Jan,

I begin the creation of the model with my dataset but I I encountered 2 errors :

My training set consist of several hundreds of sound samples of 2 seconds each.

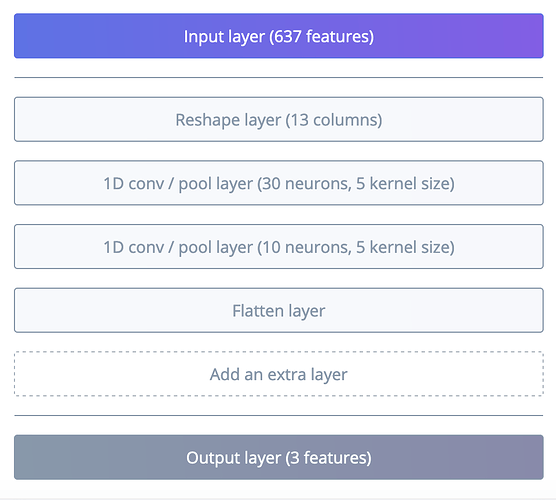

I set :

- Window size = 200 ms

- Window step = 20 ms

- When I launch the generation of features, the script is raising the following error during the execution :

ERR: DeadlineExceeded - Job was active longer than specified deadline

ERR: DeadlineExceeded - Job was active longer than specified deadline

****Job failed (see above)

When I set the window step to 100 ms, the script run without error.

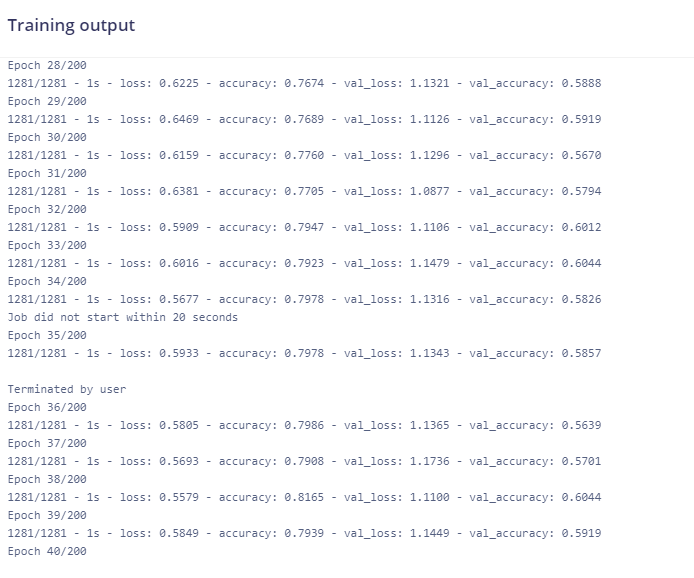

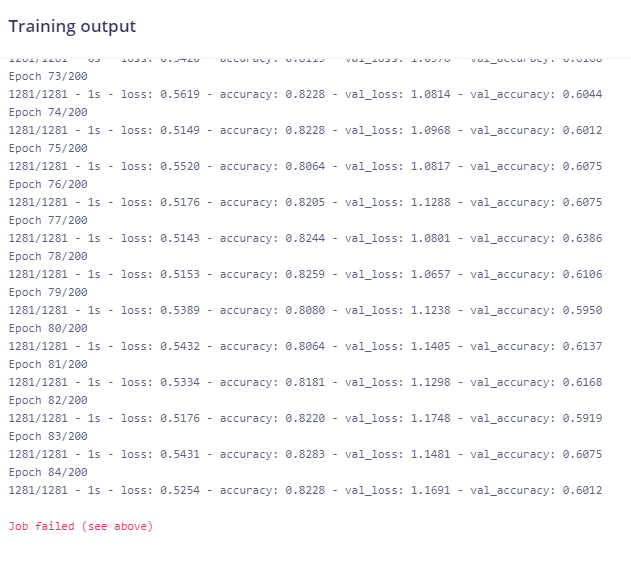

- After generating the features , when I launch the training of the neural network, the script is raising immediately the following error :

EDIT : I solved this second error by setting kernel size to 1 (instead 5 by default).

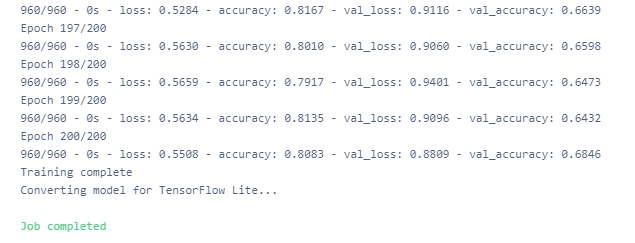

By doing this, the training run successfully.

However I obtain disappointing results (accuracy = 30 %). How can I improve the performance of the neural network ?

Creating job… OK (ID: 160025) Copying features from processing blocks… Copying features from processing blocks OK Training model Job started Traceback (most recent call last): File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/ops.py”, line 1619, in _create_c_op c_op = c_api.TF_FinishOperation(op_desc) tensorflow.python.framework.errors_impl.InvalidArgumentError: Negative dimension size caused by subtracting 5 from 1 for ‘conv1d_1/conv1d’ (op: ‘Conv2D’) with input shapes: [?,1,1,30], [1,5,30,10]. During handling of the above exception, another exception occurred: Traceback (most recent call last): File “/home/train.py”, line 113, in model = train_model(X_train, Y_train, X_test, Y_test) File “/home/train.py”, line 31, in train_model model.add(Conv1D(10, kernel_size=5, activation=‘relu’)) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/training/tracking/base.py”, line 457, in _method_wrapper result = method(self, *args, **kwargs) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/sequential.py”, line 203, in add output_tensor = layer(self.outputs[0]) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/base_layer.py”, line 773, in call outputs = call_fn(cast_inputs, *args, **kwargs) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/keras/layers/convolutional.py”, line 209, in call outputs = self._convolution_op(inputs, self.kernel) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/nn_ops.py”, line 1135, in call return self.conv_op(inp, filter) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/nn_ops.py”, line 640, in call return self.call(inp, filter) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/nn_ops.py”, line 239, in call name=self.name) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/nn_ops.py”, line 228, in _conv1d name=name) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/util/deprecation.py”, line 574, in new_func return func(*args, **kwargs) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/util/deprecation.py”, line 574, in new_func return func(*args, **kwargs) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/nn_ops.py”, line 1682, in conv1d name=name) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/ops/gen_nn_ops.py”, line 969, in conv2d data_format=data_format, dilations=dilations, name=name) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/op_def_library.py”, line 742, in _apply_op_helper attrs=attr_protos, op_def=op_def) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/func_graph.py”, line 595, in _create_op_internal compute_device) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/ops.py”, line 3322, in _create_op_internal op_def=op_def) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/ops.py”, line 1786, in init control_input_ops) File “/usr/local/lib/python3.7/site-packages/tensorflow_core/python/framework/ops.py”, line 1622, in _create_c_op raise ValueError(str(e))

ValueError: Negative dimension size caused by subtracting 5 from 1 for ‘conv1d_1/conv1d’ (op: ‘Conv2D’) with input shapes: [?,1,1,30], [1,5,30,10]. Application exited with code 1 Job failed (see above)

What is going on ? Can you help me to fix these 2 issues ?

Thank you,

Regards,

Lionel