@krukiou, hey, not for the whole dataset in one go at the moment, but you can do it via the API (getsampleasaudio):

https://studio.edgeimpulse.com/v1/api/YOUR_PROJECT_ID/raw-data/YOUR_SAMPLE_ID/wav?axisIx=0

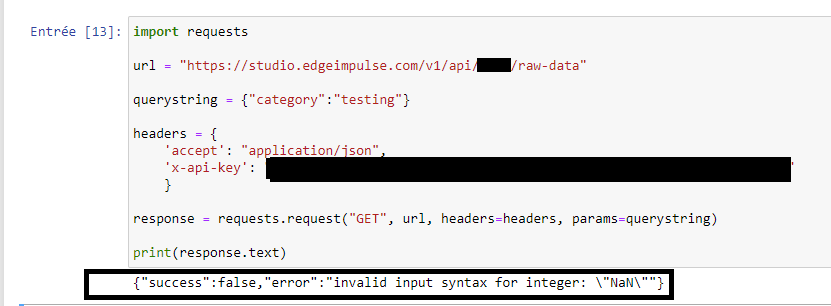

You can get all the sample IDs quickly through the listsamples API call. Hope this helps, if you run into anything I’m happy to hack something up to download everything quickly.

Also, maybe useful for someone in the future. This is how we can go from CBOR to WAV (in Typescript):

static buildWavFileBuffer(intervalMs: number, data: number[]) {

// let's build a WAV file!

let wavFreq = 1 / intervalMs * 1000;

let fileSize = 44 + (data.length * 2);

let dataSize = (data.length * 2);

let srBpsC8 = (wavFreq * 16 * 1) / 8;

let headerArr = new Uint8Array(44);

let h = [

0x52, 0x49, 0x46, 0x46, // RIFF

// tslint:disable-next-line: no-bitwise

fileSize & 0xff, (fileSize >> 8) & 0xff, (fileSize >> 16) & 0xff, (fileSize >> 24) & 0xff,

0x57, 0x41, 0x56, 0x45, // WAVE

0x66, 0x6d, 0x74, 0x20, // fmt

0x10, 0x00, 0x00, 0x00, // length of format data

0x01, 0x00, // type of format (1=PCM)

0x01, 0x00, // number of channels

// tslint:disable-next-line: no-bitwise

wavFreq & 0xff, (wavFreq >> 8) & 0xff, (wavFreq >> 16) & 0xff, (wavFreq >> 24) & 0xff,

// tslint:disable-next-line: no-bitwise

srBpsC8 & 0xff, (srBpsC8 >> 8) & 0xff, (srBpsC8 >> 16) & 0xff, (srBpsC8 >> 24) & 0xff,

0x02, 0x00, 0x10, 0x00,

0x64, 0x61, 0x74, 0x61, // data

// tslint:disable-next-line: no-bitwise

dataSize & 0xff, (dataSize >> 8) & 0xff, (dataSize >> 16) & 0xff, (dataSize >> 24) & 0xff,

];

for (let hx = 0; hx < 44; hx++) {

headerArr[hx] = h[hx];

}

let bodyArr = new Int16Array(data.length);

let bx = 0;

for (let value of data) {

bodyArr[bx] = value;

bx++;

}

let tmp = new Uint8Array(headerArr.byteLength + bodyArr.byteLength);

tmp.set(headerArr, 0);

tmp.set(new Uint8Array(bodyArr.buffer), headerArr.byteLength);

return tmp;

}

Save the tmp buffer somewhere and you’ll have a WAV file.