Question/Issue: Cannot detect the correct gesture using the USB camera on Raspberry Pi 4

Project ID: 400673

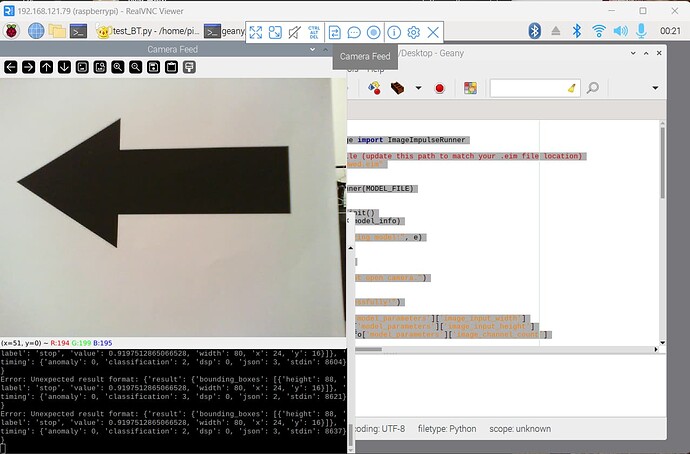

**Context/Use case: I am a newbie to coding, so most of the code I am using right now are from ChatGPT. Currently, I am working on an object detection project with FOMO and Raspberry Pi. I have the model and have successfully connected my Raspi with Edge Impulse using “edge-impulse-linux”. I use a Linux (AARCH64) deployment file. I tried with the Quantized (int8) model and Unoptimized (float32) model. But both models do not work for me. I tried to change this " resized_frame = resized_frame.astype(np.float32)" from float32 to int8, not sure if this will affect the eim file? There is an error “Unexpected result format” and keeps showing only the label: “stop”, which I show a “Front” gesture to the camera.

Here is the code:

import cv2

import numpy as np

from edge_impulse_linux.image import ImageImpulseRunner

path to your .eim model file (update this path to match your .eim file location)

MODEL_FILE = “/home/pi/arrowwed.eim”

def main():

runner = ImageImpulseRunner(MODEL_FILE)

try:

model_info = runner.init()

print("Model info:", model_info)

except Exception as e:

print("Error initializing model:", e)

return

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("Error: Could not open camera.")

return

print("Camera opened successfully!")

input_width = model_info['model_parameters']['image_input_width']

input_height = model_info['model_parameters']['image_input_height']

input_channels = model_info['model_parameters']['image_channel_count']

try:

while True:

ret, frame = cap.read()

if not ret:

print("Error: Could not read frame.")

break

# Display the camera feed

cv2.imshow("Camera Feed", frame)

# Resize the image to match the model input dimensions

resized_frame = cv2.resize(frame, (input_width, input_height))

# Convert image to the format required by the model (if needed)

if input_channels == 1:

resized_frame = cv2.cvtColor(resized_frame, cv2.COLOR_BGR2GRAY)

resized_frame = resized_frame.reshape(input_height, input_width, input_channels)

else:

resized_frame = resized_frame.reshape(input_height, input_width, input_channels)

# Normalize the image

resized_frame = resized_frame.astype(np.float32) / 255.0

# Flatten the image

features = resized_frame.flatten()

# Run inference

try:

res = runner.classify(features.tolist())

if "classification" in res["result"]:

predicted_direction = max(res['result']['classification'], key=res['result']['classification'].get)

print("Detected gesture:", predicted_direction)

else:

print("Error: Unexpected result format:", res)

except Exception as e:

print("Error during classification:", e)

break

# Press 'q' to exit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

finally:

cap.release()

cv2.destroyAllWindows()

runner.stop()

if name == “main”:

main()

The picture when I run the code:

I am placing the camera on my left side from top, so the arrow picture look like another direction.

**