What could cause FOMO to miss an object?

Background Statement

Previously I ran another experiment similar to this one and nothing changed except maybe the screw location but the position was very similar to the previous experiment. In the previous experiment a second erroneous Bounding Box (BB) was detected. In post 8 of the previous experiment user matkelcey gave advice on how to solve the erroneous BB issue.

These experiments take repeated inferences of a screw, so one would think that:

- All of the FOMO prediction scores would be similar, and

- A FOMO detection would occur at-each-and-every inference (within the bounds of the Accuracy of the model)

Problem Statement

In this experiment:

- Good News: At each FOMOed detection only a single Bounding Box (BB) was found, aka no extraneous BB existed.

- Bad News: 40% of the time no FOMO detections occurred even tho a trained FOMO object was present. Based on the EI Studio reported Accuracy I would expect misses 10% of the time. (Actually I would expect less than 10% of the time a miss would occur since the object did not move, nor did the lighting change.)

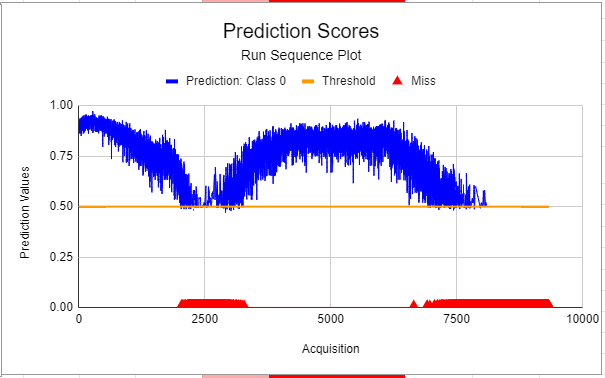

The following run sequence chart (RSC) shows how the prediction scores varied over time. Until someone can prove me wrong, I will I assume that the prediction scores are a Gaussian distribution about the F1 score exhibited in the Edge Impulse (EI) Studio, Impulse Design, Object Detection page. The (RSC) starts at such an F1 score but then starts to drop until the EI_CLASSIFIER_OBJECT_DETECTION_THRESHOLD is reached at which point FOMO says no objects are present. Then oddly enuf the Model recovers only to decline back into a No Object Found state.

It seems oddly weird that the prediction scores are correlated.

Figure 1. Run Sequence chart showing correlated prediction scores as well as many images where FOMO failed to detect an object.

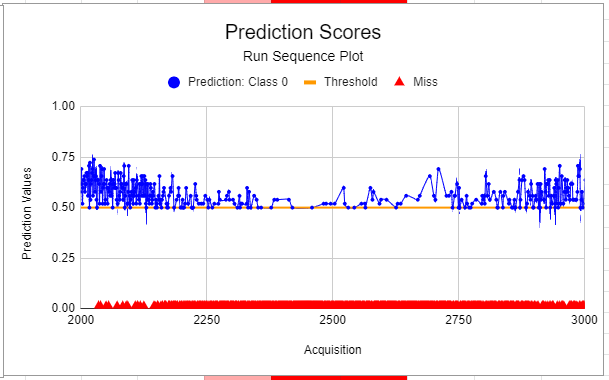

Figure 2. A zoomed in view showing how the FOMO “misses” become more frequent and then less frequent over time.

Questions

Q1: What could cause FOMO to miss an object?

Q2: What could cause the coorelation in the prediction scores?