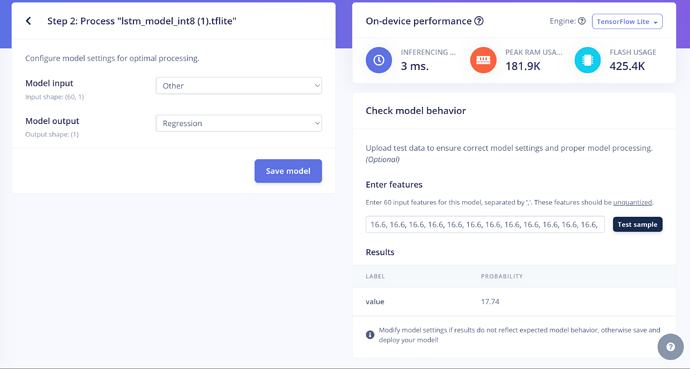

I am deploying a byom lstm quantized model for prediction on Raspberry pi 5. Attached is the ‘check model behavior’ result on edge impulse when deploying. When building and running the C++ library on Raspberry 5, the prediction is 21.64, not 17.74. What could be the issue. The input buffer is initialized with the same values as the screenshot.

My float32 model is too big to deploy so I have to use my int8 model. I followed the same format as ‘Edge Impulse Example: stand-alone inferencing (C++)’, but the results still don’t match the expected results displayed in the attached screenshot. I did not quantize my input (keeping it float32) as per a reply I got, even though the input datatype in the meta data file is set to int8.

Any Help is appreciated.