I trained my model on the website for pattern recognition. After training, I downloaded C++ library of my model locally on my my computer. I get a zip file with all the information.I also cloned one more repo example-edge-inference library from c++ standalone_c++_example. I took the contents from the zip file downloaded from my laptop and merged it with standalone example. I followed the tutorials step by step as mentioned on website. Works perfectly well on my laptop.

Then using scp protocol I transferred it folder to rpi 4.

i could build my model on rpi using this command

$ APP_CAMERA=1 make -j

However, when I use this command to build on rpi , I get an error.

$ APP_CAMERA=1 TARGET_LINUX_X86=1 USE_FULL_TFLITE=1 make -j

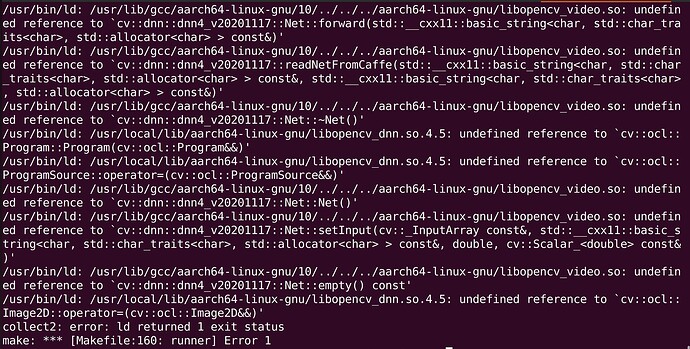

The error is

Hi @prarthana

This command issued on RPi4:

$ APP_CAMERA=1 TARGET_LINUX_X86=1 USE_FULL_TFLITE=1 make -j

Mean you are going to cross-compile the app for x86 CPU (for example, your laptop). The correct command is:

APP_CAMERA=1 TARGET_LINUX_AARCH64=1 USE_FULL_TFLITE=1 make -j

Also, make sure you have the OpenCV library installed. You can do it using the system package manager or use the script from our repository.

Best regards,

Mateusz

1 Like

Hello,

that was my typo while asking the question. I am sorry about it. However, I used the correct flag for my rpi architecture i.e APP_CAMERA=1 TARGET_LINUX_AARCH64=1 USE_FULL_TFLITE=1 make -j.

Thanks prarthana sigedar

Hi @prarthana

Did it solve your question? Or the last command you posted is still making an issue?

Kind regards,

Mateusz

this is still the issue @Mateusz.

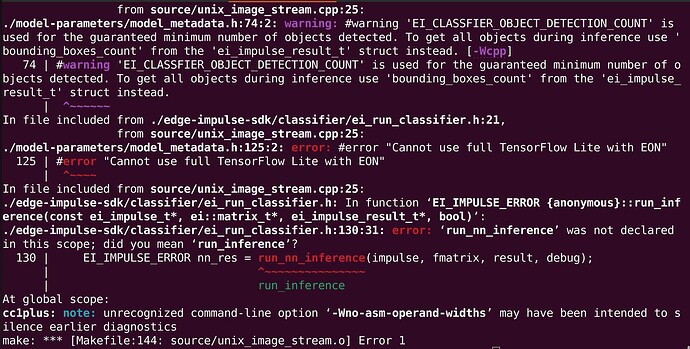

I am also getting this error when I try to use hardware acceleration

Hi @prarthana

The error says:

Cannot use full TensorFlow Lite with EON

It looks like you exported EON Compiled C++ model, so you cannot compile the app with USE_FULL_TFLITE=1.

Best regards,

Mateusz

Is there any way around such that I can export a compiled model without EON and then use TF_LITE?

If yes? Then how?

I am trying all the ways to improve the FPS on rpi4. Currently, when I integrate the fomo classification with my code, I get around 15 fps on rpi. However, when I only run the compiled code for classification without integration with my code, then I get an fps around 30.

I am not able to get this discrepancy so I was trying to use hardware acceleration for rpi4

Thankyou

Hi @prarthana I’m attempting the same and have reproduced this issue. Have you figured out how to achieve hardware acceleration yet?

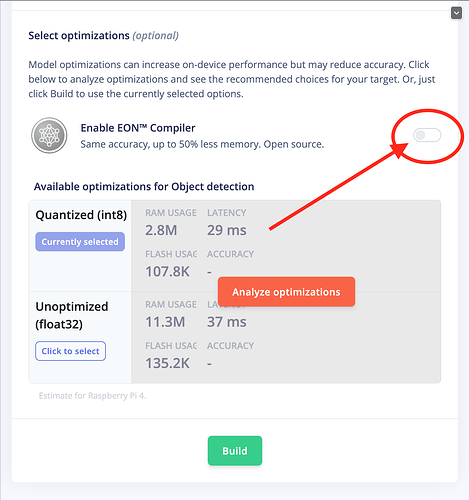

@prarthana I figured it out … there is a switch on the build page that toggles whether to enable EON or not. Turn it off and you’ll be able to compile.

1 Like

Thanks a lot. I will look into it and get back.

Hello @inteladata

I turned off the eon compiler, and first I ran it on the computer by running the command for hardware acceleration i.e is$ APP_CAMERA=1 TARGET_LINUX_X86=1 USE_FULL_TFLITE=1 make -j.

It solved the error which I was getting previously. However, the inference speed dropped from 30ms to approx 300ms on laptop. There was a huge discrepancy in the inference speed. I could not understand the reason behind it.

Thanks,

Prarthana Sigedar