I created an image recognition model in Edge Impulse with a dataset I took/augmented of 1000+ photos of 6 different pills. The idea is for a person to use their phone to identify a pill… because you always forget which pill you are looking at if it’s not in a pill bottle.

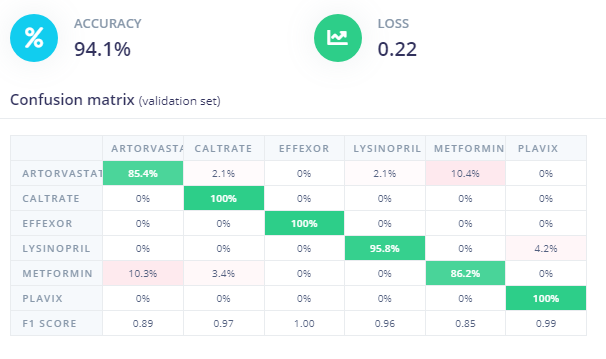

My model has an accuracy of 94.1% with a 0.22 loss, and the test has an accuracy of 93.58%. And my confusion matrix looks pretty good.

But whenever I go to use my phone (and I can see it’s connected correctly) and switch to classification mode, almost every single “photo” I take of a pill is completely wrong in how it’s classified. Even the ones that are 100% accurate in the confusion matrix!

Yes, these are the same pills, same background, same camera, same lighting I used to create my dataset. I feel like I’m missing something big because of the horrible results I am getting when classifying the pill images with my phone. Help!

I’ve made my Pill Identifyer project public. # 64036 Thank you in advance for any and all help.

I’ve tried to classify the pills on my phone again today. And I’m still getting horrible results. So I’m attaching my confusion matrix and three screenshots of my phone’s results for you to see. Each of these pills got 100% accuracy readings on the confusion matrix but are not classified correctly at all with my phone.

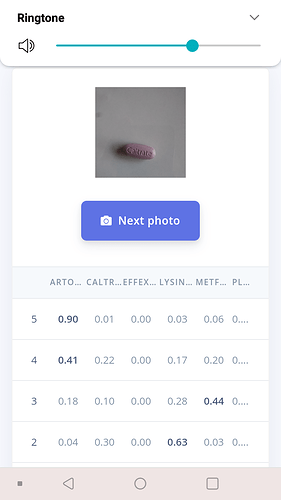

My phone incorrectly classifying the Caltrate pill

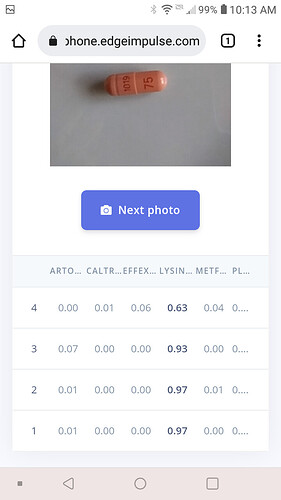

My phone incorrectly classifying the Effexor pill

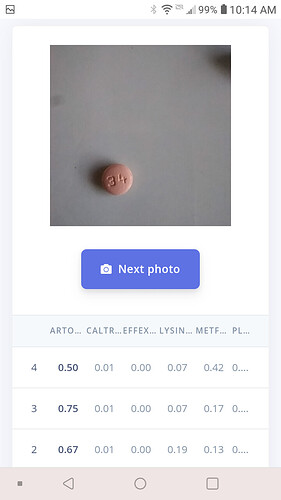

My phone incorrectly classifying the Plavix pill

I forgot to add the URL to get to the public project

Hi @sarakubik If looking through your dataset you seem to have centered (almost) all the pills nicely and made photos with the same zoom level (then did some augmentation script I guess for rotation and brightness). My guess would be that the model is very overfit on those exact dimensions of the pills. Because this is both in test and training set you get great accuracy in both but not in the real world testing. Some stuff that would help me understand this better:

- Add some unknown data (so no pill in there). This helps the model generalize a lot.

- Add some photos from your phone of pills to the test set so we can more easily gather if the model works properly (so the same way as you do the classification on phone right now). This should take only a few minutes if you have the classification on the phone already.

I also think picking a model with less capacity could work too (you have a pretty big custom NN right now). My gut feeling would be that using grayscale input and a transfer learning (e.g. MobileNetV2 0.35) would yield a better real-world result. Adding some data to the test set would help us iterate on that.

confusion matrix

confusion matrix