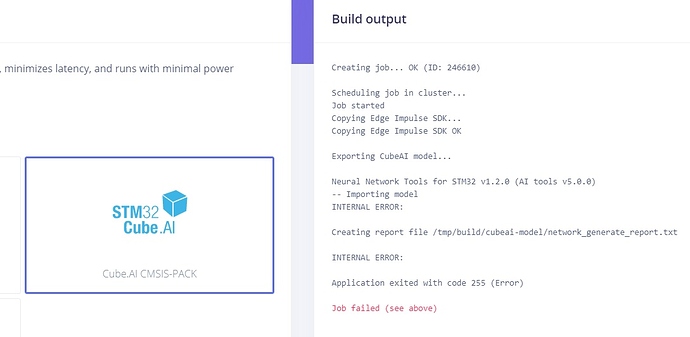

Hi @xky1024, I’m not sure either; I’ve reported this to ST, hopefully they can provide a fix. Alternatively you can deploy as C++ library, it will work in Cube.MX as well but you need to set the include paths yourself (example Makefile: https://github.com/edgeimpulse/example-standalone-inferencing/blob/master/Makefile.tflite)

Got a response from ST that they will fix the issue in Cube.AI 5.2, but this is not out yet. Will add a note to the deployment option.

edit: I’m not entirely sure what and how - but retraining the model and exporting it to Cube.AI works. I’ve done it on your project, so you could retry the deployment now.

Yes, retraining the model and exporting it to Cube.AI works.

Thank you.

Hi Jan, I experience this same problem being able to successfully build an OpenMV Lib but not being able to build a CMSIS-PACK lib. I’ve tried retraining and building again and got the same error. You mention “exporting” above. How would I export the model. I’m using the MobileNet2 0.05. with my own images used for training.

I’ve successfully deployed a model on the OpenMV H7 Plus, thanks to your user friendly platform. Thank you very much. I would like to create a smaller model to deploy on my STM32F429ZI using STM32CUBE.AI.

@GranvillePaulse, unfortunately this is a bug in Cube.AI that is not yet publicly fixed (ST says this is fixed in STM32Cube.AI 5.2, but it’s not publicly available yet). You can export as C++ Library which will work in CubeIDE as well, although it requires some manual labor, see here.

Thank you very much. I will give this a try.