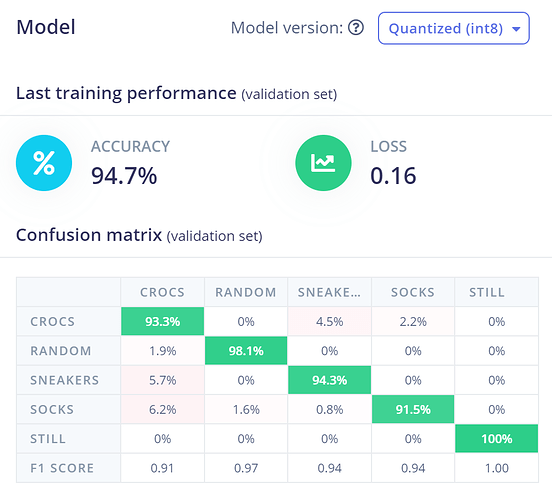

As part of creating a hands-on course in machine learning, I wanted to see if a smartphone accelerator can distinguish between different shoe wear. Without planning anything further, I put my phone in my right sock and walked around for 4 x 10 seconds with only socks, with crocs sandals and with sneakers, and at the same time obviously gathering data with EI. With this fairly limited data and with default settings I got an excellent accuracy of 94 %!

The live classification correctly classified what I was wearing every time, no need to look down at my feet  .

.

Do also note that the phone was not taped or very firmly against my ankle, so by purpose it was not in exact same position and orientation every time.

Doing all this took just some 15 minutes longer than writing this post, I’m very impressed with how easy it is to get started!

Next I will try another project to distinguish between two pair of different running shoes, here I’ll ensure the phone is at same place every time. I’m almost expecting this experiment to fail (= low accuracy) as the shoes might be too similar, but sometimes you learn more by failures than success! Let’s see…

As another element in the same course, I’m going to try to classify different music styles (pop, hard rock, country, classical…), here the new EON Tuner will be of help.