Hey guys,

I’m currently working on trying to replicate the “Faucet Tutorial” from EdgeImpulse but via STM32CubeIDE. I wanted to make this one work first so I can work on a bigger project later on. At the moment I’m having issues trying to make my EdgeImpulse model work with the inputs from the microphone. Here’s what my code looks like for now :

ei_printf(“Init Audio\n”);

AudioInit.BitsPerSample = 16; //16

AudioInit.ChannelsNbr = AUDIO_CHANNELS;

AudioInit.Device = AUDIO_IN_DIGITAL_MIC1; //AUDIO_IN_DIGITAL_MIC1

AudioInit.SampleRate = AUDIO_FREQUENCY_16K; //AUDIO_FREQUENCY_11K // AUDIO_SAMPLING_FREQUENCY //

AudioInit.Volume = 100; //32

int32_t AudioInitRet = BSP_AUDIO_IN_Init(AUDIO_INSTANCE, &AudioInit);

if (AudioInitRet != BSP_ERROR_NONE) {

ei_printf(“Error Audio Init (%ld)\r\n”, AudioInitRet);

}

else {

ei_printf(“OK Audio Init\t(Audio Freq=%ld)\r\n”, AudioInit.SampleRate);

}

/* USER CODE END 2 */

/* Infinite loop /

/ USER CODE BEGIN WHILE /

while (1)

{

/ USER CODE END WHILE */

int32_t ret2 = BSP_AUDIO_IN_Record(AUDIO_INSTANCE, (uint8_t *) ram_buffer, 2048U); //64U // 2048U // Max 4095U

if (ret2 != BSP_ERROR_NONE) {

ei_printf("Error Audio Record (%ld)\n", ret2);

}

else {

for(uint32_t ui = 0; ui <= sizeof(ram_buffer); ui++)

{

ei_printf("%d\t", ram_buffer[ui]);

}

ei_printf("OK Audio Record\n");

signal_t signal;

signal.total_length = TOTAL_AUDIO_SIZE; // 16000

signal.get_data = &ei_microphone_audio_signal_get_data;

ei_impulse_result_t result = { 0 };

ei_printf("%d\t", signal.get_data);

ei_printf("run_classifier_continuous\n");

EI_IMPULSE_ERROR r = run_classifier_continuous(&signal, &result, true);

if (r != EI_IMPULSE_OK) {

ei_printf("ERR: Failed to run classifier (%d)\n", r);

}

else {

// Print the predictions

ei_printf("Predictions (DSP: %d ms., Classification: %d ms., Anomaly: %d ms.):\n",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf("%s: ", result.classification[ix].label);

ei_printf_float(result.classification[ix].value);

ei_printf("\n\n\n");

}

}

}

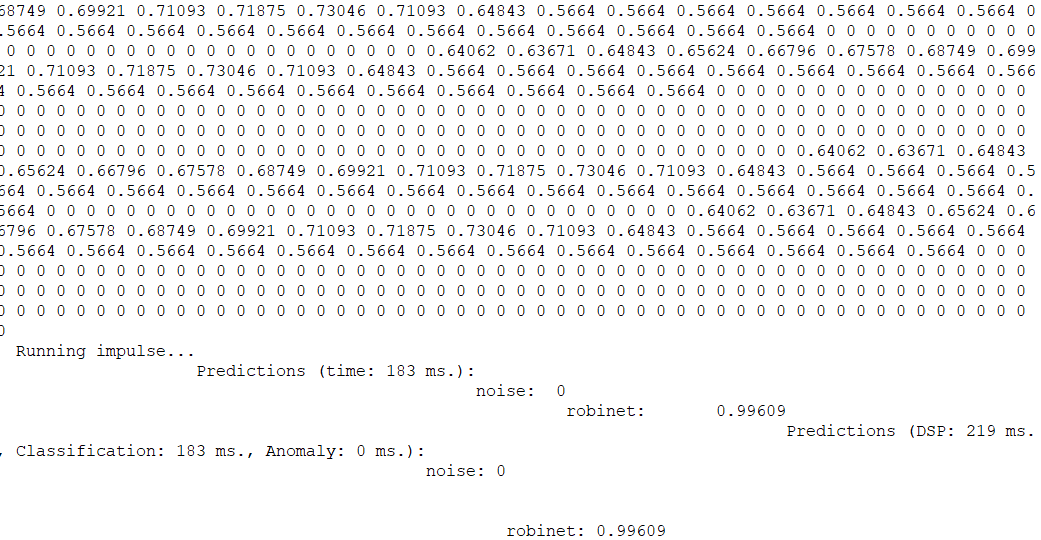

I don’t really know what is missing and my model output looks like that:

In case you’re wondering I’m french so robinet means faucet

Rene