Hi, I am trying to train a model locally that I got by exporting the NN Classifier as a IPython Notebook. The first line contained “Download the data - after extracting features through a processing block - so we can train a machine learning model.”. I was wondering how do I integrate the processing block with my python script so I can create a pipeline locally. I saw that there is a github repo for processing block but I couldnt understand how to use it. I would appreciate if someone could help me out or point me to a link or resource where I could understand how do I integrate processing block locally with the classifier in python.

Hi @umaid,

When a processing block is run in the Edge Impulse studio, it’s done using a Docker image. Within that image, the generate_features() function is called from within dsp.py. I recommend starting there for seeing how to port a processing block to a local Python script.

For example, here is generate_features() for the spectral analysis DSP block: processing-blocks/dsp.py at 76a5d144086154d7132fc4060cbcce292e768c0b · edgeimpulse/processing-blocks · GitHub.

Hope that helps!

Thanks @shawn_edgeimpulse

I ran dsp-server.py after installing the requirements-block. I think its possible to run generate features remotely using this. Correct me if I am wrong. However, when I run dsp-server I do not understand how to set the post request to start the dsp process so that the features can be generated. Can you highlight how that is supposed to be done?

Also there is a parameter “axes” how is that supposed to be used. What is meant by “Names of the axis (pass as comma separated values)” and what would it be in some specific case e.g. audio activity detection. If there is anyway I can see an example of parameters passed so that I can mimic them for my use case.

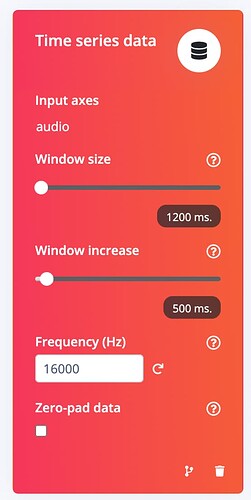

I have been able to use generate_features.py from dsp locally. However, I donot get the same result as I got from the Edge Impulse Studio. Here are the steps I did and parameters I chose for the studio:

-

I used win_size as 1201. I used 1200 but there was an error stating that the win_size needs to be odd. and I set the frequency as 16000 as I have collected the data using Arduino Nano BLE Sense 33.

-

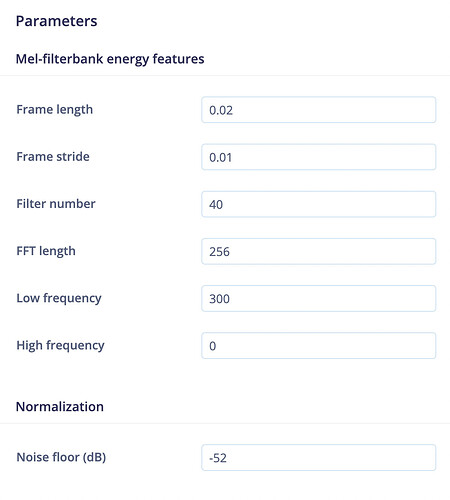

I copied the parameters from this image and plugged them as parameters to generate_features.py

-

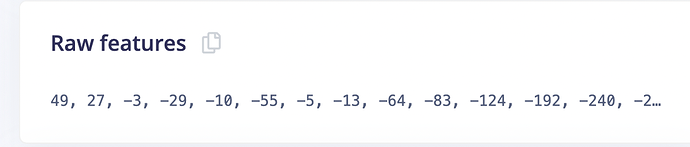

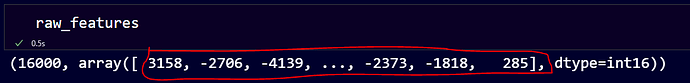

I used the raw features here by copying them as input to generate_features to the parameter “features”. Also I am assuming that this is wav to csv conversion for the frame.

-

I ran the following code where data was a comma separated string of the raw features.

raw_features = np.array([float(item.strip()) for item in data.split(',')])

generate_features(2, True, raw_features, "axis", 16000, 0.02, 0.01, 40, 256, 300, 0, 101, -52)

- This was the result of the local code:

{‘features’: [0.11678837984800339,

0.17817948758602142,

0.17817948758602142,

0.1697307676076889,

0.1697307676076889,

0.18250030279159546,

0.21939215064048767,

0.21939215064048767,

0.13011771440505981,

0.13124321401119232,

0.13124321401119232,

0.14022785425186157,

0.14000669121742249,

0.16483275592327118,

0.18450801074504852,

0.1361309438943863,

0.10644878447055817,

0.11290952563285828…

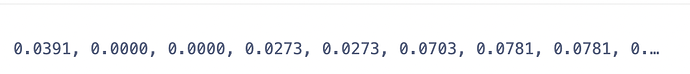

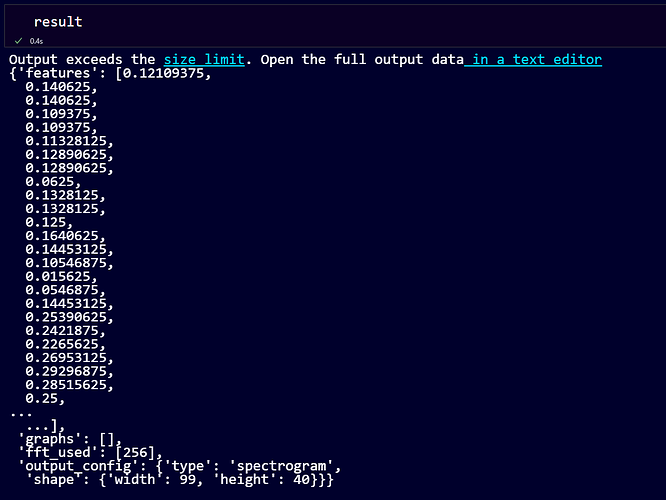

Where as this is the result of edge impulse studio:

Can you explain to me what is happening here?

Hi @umaid,

Which dsp.py code are you using? I linked to “spectral-analysis,” which may not be what you want. It looks like you’re using Mel-filterbank energy features, which should be the mfe processing block that comes from here: processing-blocks/mfe at 76a5d144086154d7132fc4060cbcce292e768c0b · edgeimpulse/processing-blocks · GitHub. I recommend trying the generate_features() functions in dsp.py in there to see if it matches what you are getting in the Studio.

Hi @umaid,

To answer your other questions:

- You can use ngrok to make your Docker image available over the Internet. Studio will connect to your local Docker image to run your DSP block. See this tutorial for how to get that working: https://docs.edgeimpulse.com/docs/edge-impulse-studio/processing-blocks/custom-blocks

- Axes is mostly used for things like accelerometers where you usually have 3 axes on a time-series data sample. However, the generate_features() function is a common interface, so it’s still there as a parameter. If you have multiple channels (e.g. stereo), you could probably set axes=2. However, note that Features from each channel are appended into a flat list. This line performs that concatenation: processing-blocks/dsp.py at 76a5d144086154d7132fc4060cbcce292e768c0b · edgeimpulse/processing-blocks · GitHub

Yes I am using MFE processing block. The result generated above was using generate_features() method available in dsp.py on the github for MFE. I looked through the github files to find it. I also noticed that for processed features, the number of dimensions from edge impulse studio is 4760 however, the num of dimension for locally reproducing the result is 4640.

My project ID is 88907. I hope the following link to notebook pdf helps you understand my problem. My main concern is replicating the results from edge impulse studio processing block on my local computer.

Link to PDF (Easier to read):

Link to Notebook:

Hi @umaid,

Try implementation_version=3 and win_size=101 to see if it matches the Studio output. I recreated your code in Colab, and using one of the raw features seems to produce the same output of the DSP block in your Studio project: Google Colab.

Thanks @shawn_edgeimpulse,

This worked. In dsp.py the default for implementation_version was 2 so I was under the impression that it stays the same. Really appreciate your help here.

hi @shawn_edgeimpulse thanks for the code in google collab this is what I am looking for.

btw is it possible raw feature extracted directly from the audio file?

so we don’t have to copy paste raw feature from the edge impulse studio?

Hi @desnug1,

I think we both are working on a similar project. You can do using scipy.wavfile.read().

hi @umaid

do you have code example for raw feature extracted?since I am not so familiar using scipy, usually I am using librosa…

hi @umaid

this is the result that I’ve got, exactly same with the edge studio…

btw do you know how to retrieve only array data?

cool…here is my result.

exactly same with the studio. next step I just need to figure out how to save features to array 99x40.

thanks @umaid

@desnug1, I think what you are pointing is the shape of the spectogram. the features are just in the dictionary with key “features”

hi @umaid.

in your project, what is the purposes?

for me I have to run on locally feature extraction until save X and y to npy. so it can ready to feed to ML algorithm. but I got stuck.

Yeah I have similar goals to produce it locally.