Great question from one of our users on LinkedIn:

Q: Do you plan to have firmware for other AI+IoT boards aside from ST B-L475E-IOT01A?

A: All the models we output are standard C++11 so can be deployed on any device (with hardware optimised paths for devices with vector extensions or an FPU). For data gathering we have a spec that you can implement, so no need for us to implement a new firmware. We have some instructions here: https://docs.edgeimpulse.com/docs/porting-guide

In general, we are focused on optimising for MCU class targets with a sweet spot around Cortex-M4 to Cortex-M7. The awesome thing about producing standard C++11 is that the inference library could be run on eg Cortex-A devices such as the Raspberry Pi or edge servers.

5 Likes

Concerning development on boards other than the ST B-L475E-IOT01A, I’m a bit concerned with the response. We are seeing a push to move ML to the edge. That means increasing interest in the STM32WB and STM32WL development boards as well as interest in the STM32L0 and STM32G0 families. Creation of examples and drivers in C rather than C++ is desirable. Please let us know if you plan to head in this direction as well. Thanks…

Hi @jblauser, the implementation for the B-L475E-IOT01A is just a reference - as long as you implement the ingestion SDK you can get data into Edge Impulse from any device. The ingestion and data signing implementation is already in C99, but a full solution naturally depends on network protocol used on the target device. An example is under data acquisition format.

On the inferencing part, we’re using upstream libraries that depend on C++, but you can just mark the ei_classifier_run function as extern "C", compile the library and link it against a C application.

1 Like

@janjongboom

Is it possible to export the deployment model in *.h5 or *.hdf5 or

*.tflite format

This facilitate importing the model to STM32CubeMX AI

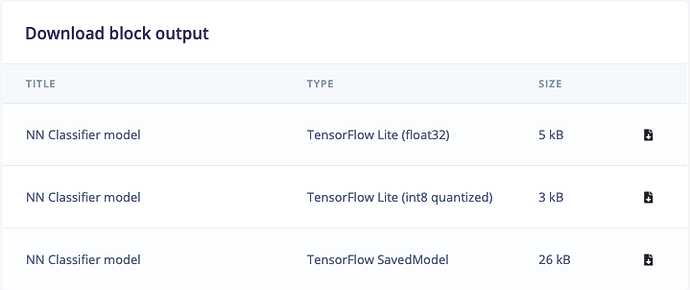

@MoKhalil if you go to the dashboard, under Download block output you can download the TensorFlow model. This is a .pb file that you can convert with e.g. the TFLite tools.

Naturally you’d still need the signal processing blocks, but you can get them from the Deployment tab. Would be very interested to see if performance goes up using Cube.AI!

@janjongboom

.pb graph export alone is not enough to transform to tflite, another graphfile .pbtxt is required

@MoKhalil You don’t actually. You can convert the graph like this:

toco --graph_def_file trained.pb --output_file=trained.tflite --output_arrays="y_pred/Softmax" --input_arrays="x_input"

Input tensor layer is typically called x_input and output layer called y_pred/Softmax in Edge Impulse (as long as you use the visual editor). You can also use the API to get the exact names of the layers via getkerasmetadata.

@jblauser (and everyone else who might read this): we have updated the tutorial with an example on how to use the compiled impulse from C: Running your impulse locally.

@MoKhalil and others: you can now download the TensorFlow Lite files (both float32 and quantized int8 versions), plus the full TensorFlow SavedModel files from your dashboard.

You’ll need to re-train your neural networks for these files to show up.