Today we have released an upgrade to TensorFlow 2.1 for all neural network blocks in Edge Impulse. This will have little immediate impact, but it sets us up for new performance improvements and smaller neural networks. If you train or deploy a model in the studio you’ll automatically get the latest version, and we have also updated all SDKs and examples to work with this release.

Plans for the near future

A lot of work has been done by Google, Arm and others to improve the performance of neural networks on device, and we’ll take advantage of that in the near future. We’re already heavily using hardware optimized paths in our signal processing blocks, but this upgrade means that we can also start using optimized kernels for neural networks through CMSIS-NN.

In addition we will start supporting quantized versions of neural networks. At the moment all neural networks that we output are using 32-bit floating point mathematics, but switching to 8-bit fixed point quantization will significantly reduce model size, memory requirements and inferencing time. We’ll add full support for this in the studio, so you can compare quantized and unquantized networks side-by-side and make the right choice depending on accuracy and processing power on your device. Stay tuned for more information.

Downloading quantized models

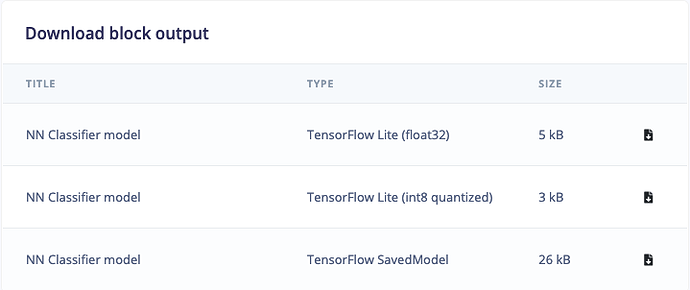

An immediate effect of the upgrade is that we now allow you to download the full TensorFlow SavedModel, and both unquantized and quantized TensorFlow Lite models from your dashboard. Just re-train your neural network and the download links will show up:

Increased binary size

The binary size of models has temporarily increased by around 30K. This is because we load all ops in the interpreter on device, and the number of ops has increased since our previous release. In the next few weeks we’ll work on automatically including only the ops that are actually needed, and will also work on automatically calculating the required arena size to reduce RAM usage. As our inferencing SDK is fully open-source you can also swap out the AllOpsResolver with just the ops that your model requires here.

Removing uTensor deployments

As part of this change we have also removed the option to deploy with uTensor due to incompatibilities with TensorFlow 2.1. The inferencing SDK still supports uTensor, so if you want to use uTensor as your inferencing engine, you can take the TensorFlow SavedModel from the dashboard and convert the graph yourself.

If you have any questions, or see any bugs, just reply to this forum post!