**Question/Issue: Is there any way to train a model to get a Tensorflow Lite model that can be used for non-Edge Impulse TensorflowLite? TensorFlow lite converter? Or do i have no choice but to retrain using Tensorflow Lite google colab?

Context/Use case:

Hi,i am very new to Tensorflow Lite. I’m currently working on an object detection project on my Raspberry Pi 4, following the guide here (https://github.com/EdjeElectronics/TensorFlow-Lite-Object-Detection-on-Android-and-Raspberry-Pi/blob/master/deploy_guides/Raspberry_Pi_Guide.md) and all is working fine.

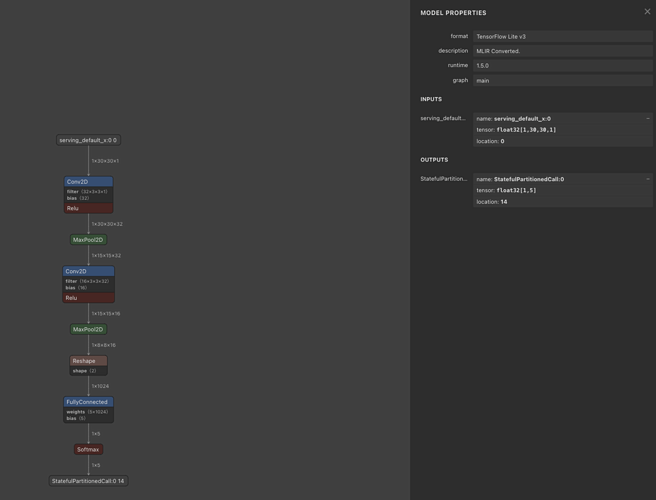

Now, I have trained a few models that I have previously used on my ESP32-Cam and would like to use the same models for my Raspberry PI. I have used the Lite file on the dashboard and renamed them tflite but there were some issues getting them to work. I have tried the ones u can get from the deployment tab “OpenMV Library” but its not working as well. For the dashboard files:

Using the quantized int8 model, the error shows “ValueError: Cannot set tensor: Got value of type UINT8 but expected INT8 for input 0, name: serving_default_x:0”

While if I were to use the float32 model, the error shows to be Bounding box coordinates of detected objects IndexError: List index out of range.