Question/Issue:

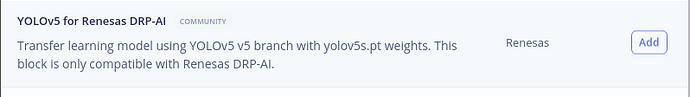

I am trying to get the deployment files for Renesas RZ/V2L.

I have a pre trained model in the ONNX format. When I upload the file, enter the parameters required on Edge Impulse tool, I get the following error.

Please help me on the same.

Project ID: 247019

Context/Use case:

Scheduling job in cluster…

Container image pulled!

Job started

ONNX model found! Will use instead

Processing ONNX model…

Cutting graph in two…

Traceback (most recent call last):

File “/app/drp-ai/onnx_cut_graph.py”, line 21, in

onnx.utils.extract_model(path, output_path_1, input_names, middle_layers)

File “/app/.split-venv/lib/python3.10/site-packages/onnx/utils.py”, line 192, in extract_model

extracted = e.extract_model(input_names, output_names)

File “/app/.split-venv/lib/python3.10/site-packages/onnx/utils.py”, line 150, in extract_model

outputs = self._collect_new_outputs(output_names)

File “/app/.split-venv/lib/python3.10/site-packages/onnx/utils.py”, line 46, in _collect_new_outputs

return self._collect_new_io_core(self.graph.output, names) # type: ignore

File “/app/.split-venv/lib/python3.10/site-packages/onnx/utils.py”, line 36, in _collect_new_io_core

new_io_tensors.append(self.vimap[name])

KeyError: ‘653’

Application exited with code 1

WARN: Deployment for runner-linux-aarch64-rzv2l failed, falling back to runner-linux-aarch64…

Scheduling job in cluster…

Container image pulled!

Job started

Exporting TensorFlow Lite model…

Found operators [‘Concatenation’, ‘Conv2D’, ‘DepthwiseConv2D’, ‘Logistic’, ‘MaxPool2D’, ‘Mul’, ‘Reshape’, ‘Pad’, ‘Transpose’, ‘StridedSlice’, ‘Padv2’, ‘ResizeNearestNeighbor’]

Exporting TensorFlow Lite model OK

Removing clutter…

Removing clutter OK

Copying output…

Copying output OK

Scheduling job in cluster…

Container image pulled!

Job started

Building binary…

aarch64-linux-gnu-g++ -Wall -g -Wno-strict-aliasing -I. -Isource -Imodel-parameters -Itflite-model -Ithird_party/ -Iutils/ -Os -DNDEBUG -DEI_CLASSIFIER_ENABLE_DETECTION_POSTPROCESS_OP=1 -g -Wno-asm-operand-widths -DEI_CLASSIFIER_USE_FULL_TFLITE=1 -Iedge-impulse-sdk/tensorflow-lite -DDISABLEFLOAT16 -std=c++14 -c source/main.cpp -o source/main.o

aarch64-linux-gnu-g++ -Wall -g -Wno-strict-aliasing -I. -Isource -Imodel-parameters -Itflite-model -Ithird_party/ -Iutils/ -Os -DNDEBUG -DEI_CLASSIFIER_ENABLE_DETECTION_POSTPROCESS_OP=1 -g -Wno-asm-operand-widths -DEI_CLASSIFIER_USE_FULL_TFLITE=1 -Iedge-impulse-sdk/tensorflow-lite -DDISABLEFLOAT16 -std=c++14 -c tflite-model/tflite-trained.cpp -o tflite-model/tflite-trained.o

In file included from ./edge-impulse-sdk/classifier/ei_run_classifier.h:21,

from source/main.cpp:9:

./model-parameters/model_metadata.h:77:2: warning: #warning ‘EI_CLASSFIER_OBJECT_DETECTION_COUNT’ is used for the guaranteed minimum number of objects detected. To get all objects during inference use ‘bounding_boxes_count’ from the ‘ei_impulse_result_t’ struct instead. [-Wcpp]

#warning ‘EI_CLASSFIER_OBJECT_DETECTION_COUNT’ is used for the guaranteed minimum number of objects detected. To get all objects during inference use ‘bounding_boxes_count’ from the ‘ei_impulse_result_t’ struct instead.

^~~~~~~