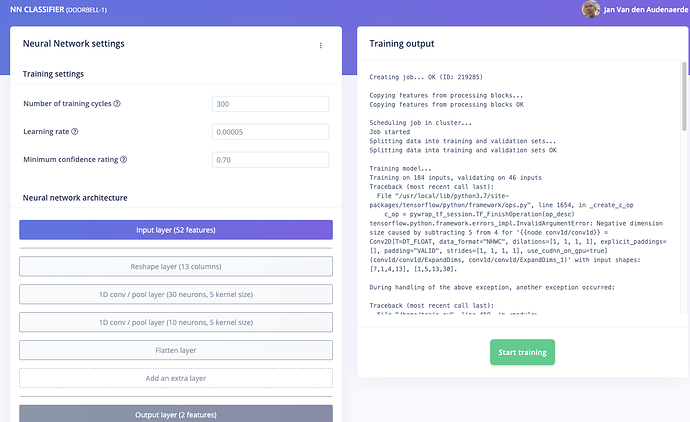

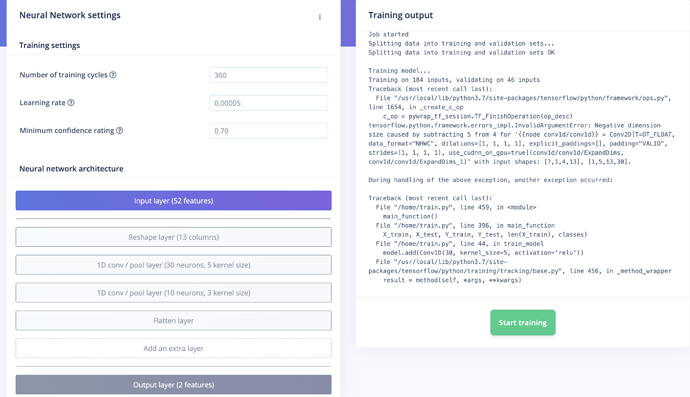

When starting training of my Neural Network it is reporting the following error:

Creating job... OK (ID: 219285)

Copying features from processing blocks...

Copying features from processing blocks OK

Scheduling job in cluster...

Job started

Splitting data into training and validation sets...

Splitting data into training and validation sets OK

Training model...

Training on 184 inputs, validating on 46 inputs

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/ops.py", line 1654, in _create_c_op

c_op = pywrap_tf_session.TF_FinishOperation(op_desc)

tensorflow.python.framework.errors_impl.InvalidArgumentError: Negative dimension size caused by subtracting 5 from 4 for '{{node conv1d/conv1d}} = Conv2D[T=DT_FLOAT, data_format="NHWC", dilations=[1, 1, 1, 1], explicit_paddings=[], padding="VALID", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true](conv1d/conv1d/ExpandDims, conv1d/conv1d/ExpandDims_1)' with input shapes: [?,1,4,13], [1,5,13,30].

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/train.py", line 459, in <module>

main_function()

File "/home/train.py", line 396, in main_function

X_train, X_test, Y_train, Y_test, len(X_train), classes)

File "/home/train.py", line 44, in train_model

model.add(Conv1D(30, kernel_size=5, activation='relu'))

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/training/tracking/base.py", line 456, in _method_wrapper

result = method(self, *args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/keras/engine/sequential.py", line 213, in add

output_tensor = layer(self.outputs[0])

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/keras/engine/base_layer.py", line 922, in __call__

outputs = call_fn(cast_inputs, *args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/keras/layers/convolutional.py", line 207, in call

outputs = self._convolution_op(inputs, self.kernel)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/nn_ops.py", line 1106, in __call__

return self.conv_op(inp, filter)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/nn_ops.py", line 638, in __call__

return self.call(inp, filter)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/nn_ops.py", line 237, in __call__

name=self.name)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/nn_ops.py", line 226, in _conv1d

name=name)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/util/deprecation.py", line 574, in new_func

return func(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/util/deprecation.py", line 574, in new_func

return func(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/nn_ops.py", line 1663, in conv1d

name=name)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/ops/gen_nn_ops.py", line 969, in conv2d

data_format=data_format, dilations=dilations, name=name)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/op_def_library.py", line 744, in _apply_op_helper

attrs=attr_protos, op_def=op_def)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/func_graph.py", line 595, in _create_op_internal

compute_device)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/ops.py", line 3327, in _create_op_internal

op_def=op_def)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/ops.py", line 1817, in __init__

control_input_ops, op_def)

File "/usr/local/lib/python3.7/site-packages/tensorflow/python/framework/ops.py", line 1657, in _create_c_op

raise ValueError(str(e))

ValueError: Negative dimension size caused by subtracting 5 from 4 for '{{node conv1d/conv1d}} = Conv2D[T=DT_FLOAT, data_format="NHWC", dilations=[1, 1, 1, 1], explicit_paddings=[], padding="VALID", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true](conv1d/conv1d/ExpandDims, conv1d/conv1d/ExpandDims_1)' with input shapes: [?,1,4,13], [1,5,13,30].

Application exited with code 1 (Error)

Job failed (see above)

Here a screenshot of the same error:

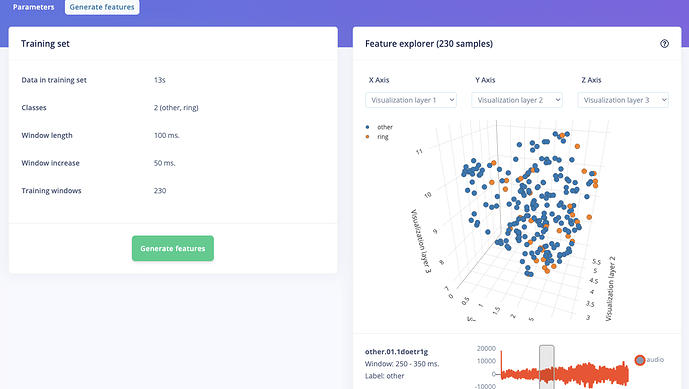

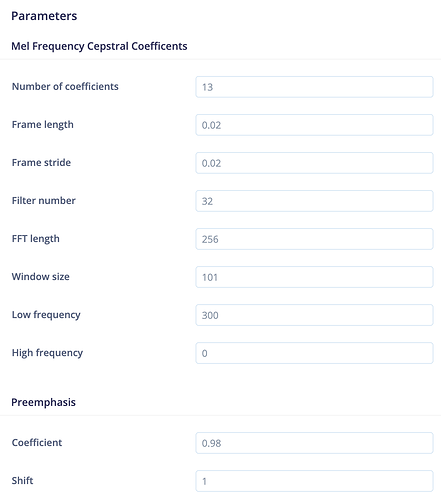

Here a screenshot of the generated features (MFCC) that are used as input for the neural network: