Hi,

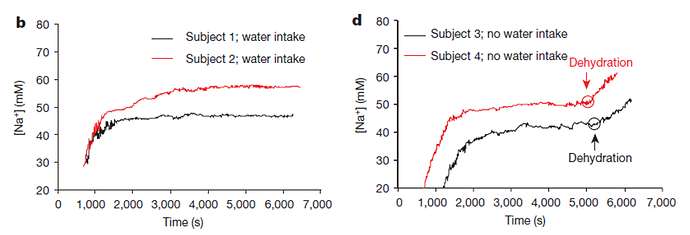

We are trying to classify time-series sensor data. The hardware is based on a Nordic BLE chip, it collects data from multiple sensors for hours. We’re trying to classify that data. Let’s say the hardware collects data for over 10 mins, and we want to pick up the differences in that data. One example is shown in the plots below. In the top plot, we’re collecting Na+ concentration data, and the runner takes water, hence doesn’t get dehydrated. In the second case, without water, he/she gets dehydrated. After training a model, we want to classify dehydration based on sensor data.

Do you have any pointers on how we can do this using edge impulse?

Thanks.

Hey @yasser.khan, would you even need ML for this? If you know that the first 2,000 seconds there’s no chance of dehydration (not sure if that’s valid), then you can take the derivative of the Na+ level and have a simple threshold. Maybe smooth it out over a 5 minute window to make it more robust.

Thanks for the reply @janjongboom. The data won’t always look like this simple, especially when we introduce the variability of different subjects and sensors. Also, the plan is to use additional sensors such as heart rate simultaneously. In that case, we’ll have data from 2 to 5 sensors – all will be time series. Just putting thresholds will be problematic in that case.

@yasser.khan in that case I’d engineer some features over e.g. a 1 minute, a 5 minute and a 10 minute window. In there you can grab features like:

- Current timestamp

- Average

- Average offset by the mean (of the whole file)

- Derivative of the raw data

That should give you about 60 features (3 windows * 4 features * 5 sensors) that can feed into a neural network.

For this to work properly in Edge Impulse you’d probably want to do a little bit of preprocessing on the data, so split it up in chunks that are as long as the longest window (say 10 minutes), and have some overlap between these windows (so you have 0…10 minutes, 2…12 minutes, 4…14 minutes, etc.). Label these with ‘dehydrated’ / ‘not dehydrated’. Then create a custom processing block to generate these features.

As you can see from the description we’re not perfectly set up to answer these questions where historic information is important (e.g. removing the running mean from a very long sample), so it requires a bit of manual work.