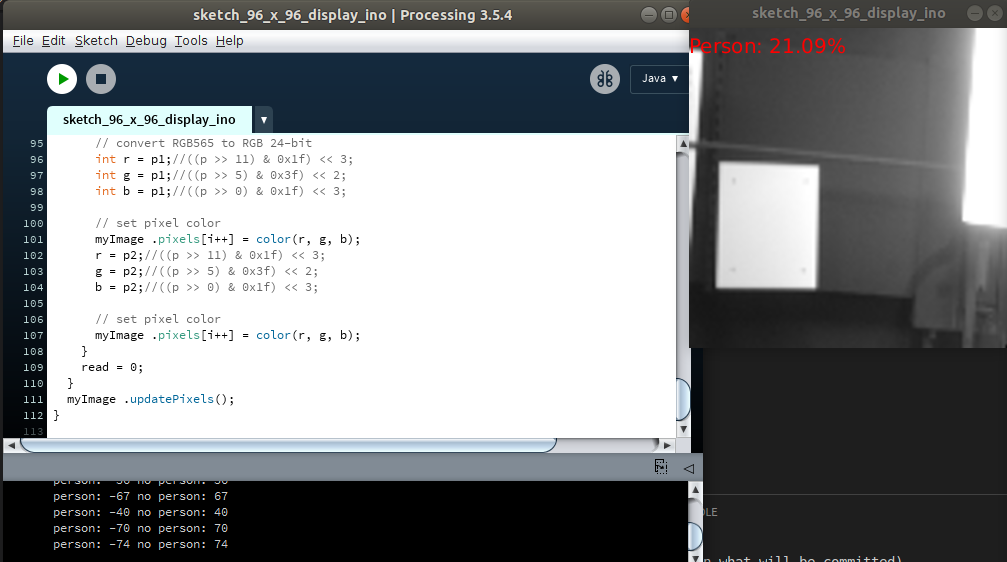

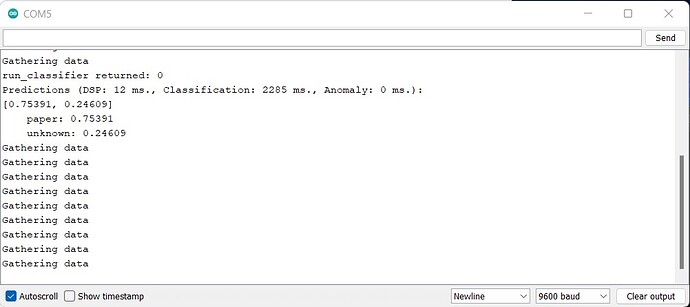

Hello, can anyone help me on this. I am still trying to connect the ov2640 arducam to the Pico to get live data. I can’t seem to connect the port, like the port won’t show on the arduino serial monitor. It works just fine without the arducam library but when i added the library it does this. Here is the screenshot:

I’ve based the arducam library and how to set it up with the Pico in this github link: RPI-Pico-Cam/tflmicro at master · ArduCAM/RPI-Pico-Cam · GitHub

Here is my main.cpp in source/main.cpp:

#include "ei_run_classifier.h"

// #include "hardware/gpio.h"

// #include "hardware/uart.h"

// #include "pico/stdio_usb.h"

#include "pico/stdlib.h"

#ifdef __cplusplus

extern "C"

{

#include "arducam.h"

}

#endif

#include <stdio.h>

const uint LED_PIN = 25;

static const float features[] = {

// copy raw features here (for example from the 'Live classification' page)

};

#ifndef DO_NOT_OUTPUT_TO_UART

// RX interrupt handler

void on_uart_rx() {

uint8_t cameraCommand = 0;

while (uart_is_readable(UART_ID)) {

cameraCommand = uart_getc(UART_ID);

// Can we send it back?

if (uart_is_writable(UART_ID)) {

uart_putc(UART_ID, cameraCommand);

}

}

}

void setup_uart() {

// Set up our UART with the required speed.

uint baud = uart_init(UART_ID, BAUD_RATE);

// Set the TX and RX pins by using the function select on the GPIO

// Set datasheet for more information on function select

gpio_set_function(UART_TX_PIN, GPIO_FUNC_UART);

gpio_set_function(UART_RX_PIN, GPIO_FUNC_UART);

// Set our data format

uart_set_format(UART_ID, DATA_BITS, STOP_BITS, PARITY);

// Turn off FIFO's - we want to do this character by character

uart_set_fifo_enabled(UART_ID, false);

// Set up a RX interrupt

// We need to set up the handler first

// Select correct interrupt for the UART we are using

int UART_IRQ = UART_ID == uart0 ? UART0_IRQ : UART1_IRQ;

// And set up and enable the interrupt handlers

irq_set_exclusive_handler(UART_IRQ, on_uart_rx);

irq_set_enabled(UART_IRQ, true);

// Now enable the UART to send interrupts - RX only

uart_set_irq_enables(UART_ID, true, false);

}

#else

void setup_uart() {}

#endif

int raw_feature_get_data(size_t offset, size_t length, float *out_ptr)

{

ei_printf("Gathering data \n");

printf("All Good \n");

memcpy(out_ptr, features + offset, length * sizeof(float));

return 0;

}

int main()

{

// stdio_usb_init();

setup_uart();

// stdio_init_all();

gpio_init(LED_PIN);

gpio_set_dir(LED_PIN, GPIO_OUT);

// arducam.systemInit();

printf("pass");

// if (arducam.busDetect())

// {

// return 1;

// }

// if (arducam.cameraProbe())

// {

// return 1;

// }

// arducam.cameraInit(YUV);

ei_impulse_result_t result = {nullptr};

while (true)

{

ei_printf("Edge Impulse standalone inferencing (Raspberry Pi Pico)\n");

if (sizeof(features) / sizeof(float) != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE)

{

ei_printf("The size of your 'features' array is not correct. Expected %d items, but had %u\n",

EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE, sizeof(features) / sizeof(float));

return 1;

}

while (1)

{

// blink LED

gpio_put(LED_PIN, !gpio_get(LED_PIN));

// the features are stored into flash, and we don't want to load everything into RAM

signal_t features_signal;

features_signal.total_length = sizeof(features) / sizeof(features[0]);

features_signal.get_data = &raw_feature_get_data;

// invoke the impulse

EI_IMPULSE_ERROR res = run_classifier(&features_signal, &result, false);

ei_printf("run_classifier returned: %d\n", res);

if (res != 0)

return 1;

ei_printf("Predictions (DSP: %d ms., Classification: %d ms., Anomaly: %d ms.): \n",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

// print the predictions

ei_printf("[");

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++)

{

ei_printf("%.5f", result.classification[ix].value);

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(", ");

#else

if (ix != EI_CLASSIFIER_LABEL_COUNT - 1)

{

ei_printf(", ");

}

#endif

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

printf("%.3f", result.anomaly);

#endif

printf("]\n");

// human-readable predictions

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf(" %s: %.5f\n", result.classification[ix].label, result.classification[ix].value);

}

ei_sleep(2000);

}

}

}

Here is my CMakeLists.txt in root:

cmake_minimum_required(VERSION 3.13.1)

set(MODEL_FOLDER .)

set(EI_SDK_FOLDER edge-impulse-sdk)

include(pico_sdk_import.cmake)

project(pico_paper_detection C CXX ASM)

set(CMAKE_C_STANDARD 11)

set(CMAKE_CXX_STANDARD 11)

pico_sdk_init()

add_subdirectory("Arducam")

add_executable(pico_paper_detection

source/main.cpp

)

include(${MODEL_FOLDER}/edge-impulse-sdk/cmake/utils.cmake)

target_link_libraries(pico_paper_detection

PUBLIC Arducam

pico_stdlib

)

target_include_directories(pico_paper_detection PUBLIC

"${PROJECT_BINARY_DIR}"

"${PROJECT_SOURCE_DIR}/Arducam"

)

# enable usb output, disable uart output

pico_enable_stdio_usb(pico_paper_detection 1)

pico_enable_stdio_uart(pico_paper_detection 0)

target_include_directories(pico_paper_detection PRIVATE

${MODEL_FOLDER}

${MODEL_FOLDER}/classifer

${MODEL_FOLDER}/tflite-model

${MODEL_FOLDER}/model-parameters

)

target_include_directories(pico_paper_detection PRIVATE

${EI_SDK_FOLDER}

${EI_SDK_FOLDER}/third_party/ruy

${EI_SDK_FOLDER}/third_party/gemmlowp

${EI_SDK_FOLDER}/third_party/flatbuffers/include

${EI_SDK_FOLDER}/third_party

${EI_SDK_FOLDER}/tensorflow

${EI_SDK_FOLDER}/dsp

${EI_SDK_FOLDER}/classifier

${EI_SDK_FOLDER}/anomaly

${EI_SDK_FOLDER}/CMSIS/NN/Include

${EI_SDK_FOLDER}/CMSIS/DSP/PrivateInclude

${EI_SDK_FOLDER}/CMSIS/DSP/Include

${EI_SDK_FOLDER}/CMSIS/Core/Include

)

include_directories(${INCLUDES})

# find model source files

RECURSIVE_FIND_FILE(MODEL_FILES "${MODEL_FOLDER}/tflite-model" "*.cpp")

RECURSIVE_FIND_FILE(SOURCE_FILES "${EI_SDK_FOLDER}" "*.cpp")

RECURSIVE_FIND_FILE(CC_FILES "${EI_SDK_FOLDER}" "*.cc")

RECURSIVE_FIND_FILE(S_FILES "${EI_SDK_FOLDER}" "*.s")

RECURSIVE_FIND_FILE(C_FILES "${EI_SDK_FOLDER}" "*.c")

list(APPEND SOURCE_FILES ${S_FILES})

list(APPEND SOURCE_FILES ${C_FILES})

list(APPEND SOURCE_FILES ${CC_FILES})

list(APPEND SOURCE_FILES ${MODEL_FILES})

# add all sources to the project

target_sources(pico_paper_detection PRIVATE ${SOURCE_FILES})

pico_add_extra_outputs(pico_paper_detection)

Here is the CMakeLists.txt in the Arducam directory that I modified:

# aux_source_directory(. DIR_LIB_SRCS)

# add_library(arducam ${DIR_LIB_SRCS})

# target_include_directories(arducam

# PUBLIC

# ${CMAKE_CURRENT_LIST_DIR}/.

# )

# target_link_libraries(arducam pico_stdlib hardware_i2c hardware_spi)

# enable usb output, disable uart output

# my modifications

add_library(Arducam arducam.c arducam.h ov2640.h)

target_link_libraries(Arducam pico_stdlib hardware_i2c hardware_spi)

target_include_directories(Arducam PUBLIC "${CMAKE_CURRENT_SOURCE_DIR}")```