Hello all,

I’m new to EI but have a question about a model that I’ve converted to TFLite to run on an ESP32. The model is not mine so I can’t share unfortunately however the details I can share is that it’s converted to TFLite using:

converter = tf.lite.TFLiteConverter.from_concrete_functions([concrete_func_basic])

converter.target_spec.supported_ops = [

tf.lite.OpsSet.TFLITE_BUILTINS, # enable TensorFlow Lite ops.

tf.lite.OpsSet.SELECT_TF_OPS # enable **TensorFlow** ops.

]

Note: I had to enable TensorFlow Ops for it to convert, its quite a complex model. This TFLite model works OK on a desktop machine but when running on the ESP32 generates the error:

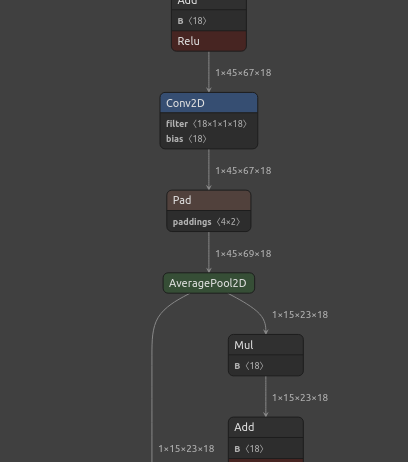

tensorflow/lite/micro/kernels/pad.cpp:93 output_dim != expected_dim (69 != 67)

Node PAD (number 11f) failed to prepare with status 1

AllocateTensors() failed

This error is generated at this line of code & occurs at the pad operation seen in the image below. Its a little surprising as its a simple Pad operation. The only possible explation I can see is the Pad operation version:

DenseNet/tf.compat.v1.pad/Pad/paddings;StatefulPartitionedCall/DenseNet/tf.compat.v1.pad/Pad/paddings

From here it seems to be depreciated, I’m not sure if this is just the website I’m using to view the model or something else.

If anyone had some pointers it would be gratefully appreciated!

Regards,

Owen