Hello everyone,

I’m trying to deploy my custom MNIST model to the Jetson Nano using Edge Impulse. So far, I’ve successfully followed the Linux C++ SDK tutorial by downloading a C++ library and building the application via: APP_CUSTOM=1 make -j which works well.

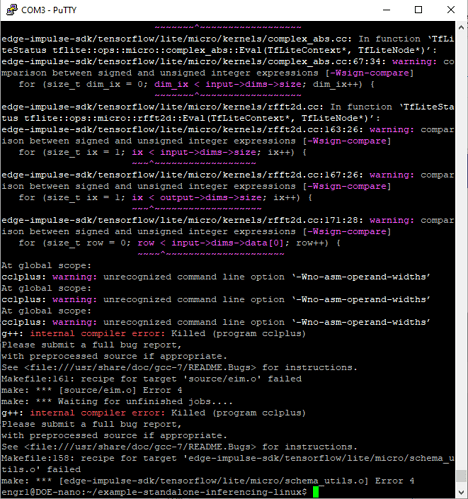

However, now I want my model to run on the GPU of the Jetson Nano, so I downloaded the TensorRT library, replaced the C++ library in the /example-standalone-inferencing-linux directory with it and downloaded the shared libraries via sh ./tflite/linux-jetson-nano/download.sh but when I run APP_CUSTOM=1 TARGET_JETSON_NANO=1 make -j to make build the application, I get the following errors:

My Jetson Nano is running Linux version 4.6.1-b110 and gcc version 7.5.0. I’ve tried updating my C++ compiler version, but this is the laster version I can download. Is it because I’m using an old C++ compiler?

Hello!

Looks like OOMKIlled issue, try running with -j2 instead? That would only spawn two processes.

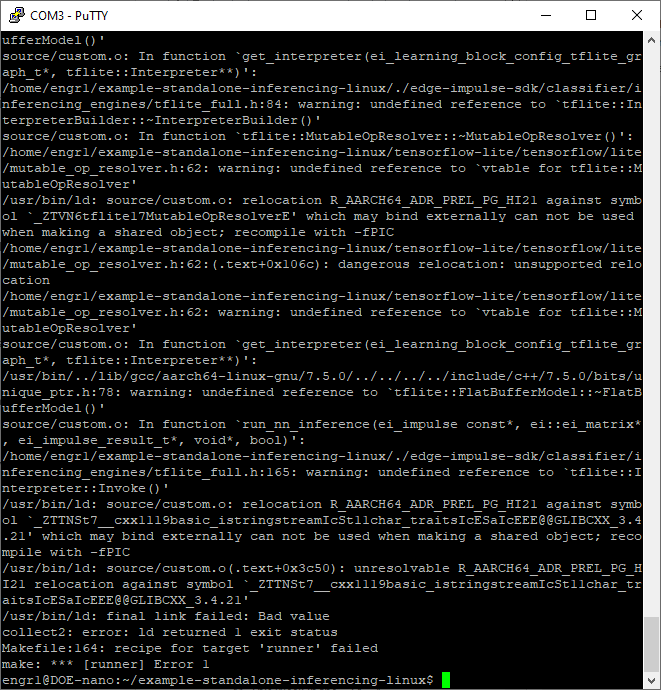

Thank you for responding to my question! I have followed what you said and now I get a different error.

Maybe it’s progress?

This is a familiar error. Are you trying to build our default example or you’re modifying it? I remember there was a person building application with DeepStream, who got a similar error.

I’m actually not using any default example from Edge Impulse. I’m using an MNIST model that I built following one of the TensorFlow tutorial pages and I optimized it using TensorRT on the Jetson Nano (performing the optimization on the device). Maybe I’m trying to double optimize it, and that’s what’s causing issues?

Mmm, but I see you are working in example-standalone-inferencing-linux folder?

By “example” I mean deployment code example.

I’m following the TensorRT deployment code example from the example-standalone-inferencing-linux Github repository. Its under the AARCH64 with AI Acceleration section in the README. I don’t know if that answers your question.

Okay, so you are using example-standalone-inferencing-linux.

Can you try APP_CUSTOM=1 TARGET_JETSON_NANO=1 make clean before running APP_CUSTOM=1 TARGET_JETSON_NANO=1 make -j2 again?

In your second screenshot it looks like the linker cannot find Full Tflite functions - but these should not be compiled in for TensorRt build, so perhaps a clean build will help.

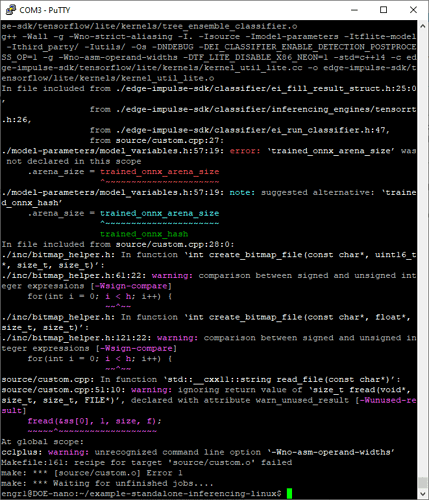

For sure. I ran the commands you mentioned and now these are the errors I’m getting:

Ok. Re-download the deployment from Edge Impulse and run clean build again  Should work this time (your last error is a bug that was fixed - the fix is already in production, I just checked).

Should work this time (your last error is a bug that was fixed - the fix is already in production, I just checked).

1 Like

Got it to work now, thank you! Although it does give a warning: “make: warning: Clock skew detected. Your build may be incomplete”.