@SunBeenMoon This is what we use for performance tests:

custom.cpp

/* Edge Impulse Linux SDK

* Copyright (c) 2021 EdgeImpulse Inc.

*

* Permission is hereby granted, free of charge, to any person obtaining a copy

* of this software and associated documentation files (the "Software"), to deal

* in the Software without restriction, including without limitation the rights

* to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the Software is

* furnished to do so, subject to the following conditions:

*

* The above copyright notice and this permission notice shall be included in

* all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

* IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

* FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

* AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

* LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

* OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

* SOFTWARE.

*/

#include <stdio.h>

#include <cstring>

#include <iostream>

#include <sstream>

#include "edge-impulse-sdk/classifier/ei_run_classifier.h"

std::string trim(const std::string& str) {

size_t first = str.find_first_not_of(' ');

if (std::string::npos == first)

{

return str;

}

size_t last = str.find_last_not_of(' ');

return str.substr(first, (last - first + 1));

}

std::string read_file(const char *filename) {

FILE *f = (FILE*)fopen(filename, "r");

if (!f) {

printf("Cannot open file %s\n", filename);

return "";

}

fseek(f, 0, SEEK_END);

size_t size = ftell(f);

std::string ss;

ss.resize(size);

rewind(f);

fread(&ss[0], 1, size, f);

fclose(f);

return ss;

}

int main(int argc, char **argv) {

if (argc != 2) {

printf("Requires one parameter (a comma-separated list of raw features, or a file pointing at raw features)\n");

return 1;

}

std::string input = argv[1];

if (!strchr(argv[1], ' ') && strchr(argv[1], '.')) { // looks like a filename

input = read_file(argv[1]);

}

std::istringstream ss(input);

std::string token;

std::vector<float> raw_features;

while (std::getline(ss, token, ',')) {

raw_features.push_back(std::stof(trim(token)));

}

if (raw_features.size() != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE) {

printf("The size of your 'features' array is not correct. Expected %d items, but had %lu\n",

EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE, raw_features.size());

return 1;

}

while (1) {

ei_impulse_result_t result;

signal_t signal;

numpy::signal_from_buffer(&raw_features[0], raw_features.size(), &signal);

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, false);

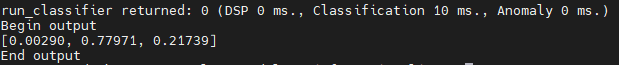

printf("run_classifier returned: %d (DSP %d ms., Classification %d ms., Anomaly %d ms.)\n", res,

result.timing.dsp, result.timing.classification, result.timing.anomaly);

printf("Begin output\n");

#if EI_CLASSIFIER_OBJECT_DETECTION == 1

for (size_t ix = 0; ix < EI_CLASSIFIER_OBJECT_DETECTION_COUNT; ix++) {

auto bb = result.bounding_boxes[ix];

if (bb.value == 0) {

continue;

}

printf("%s (%f) [ x: %u, y: %u, width: %u, height: %u ]\n", bb.label, bb.value, bb.x, bb.y, bb.width, bb.height);

}

#else

// print the predictions

printf("[");

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

printf("%.5f", result.classification[ix].value);

#if EI_CLASSIFIER_HAS_ANOMALY == 1

printf(", ");

#else

if (ix != EI_CLASSIFIER_LABEL_COUNT - 1) {

printf(", ");

}

#endif

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

printf("%.3f", result.anomaly);

#endif

printf("]\n");

#endif

printf("End output\n");

}

}