So I think I have narrowed down the source of the unstableness. It has something to do with taking the image from the Video Preview, vs taking a Snapshot and running that through the classifier.

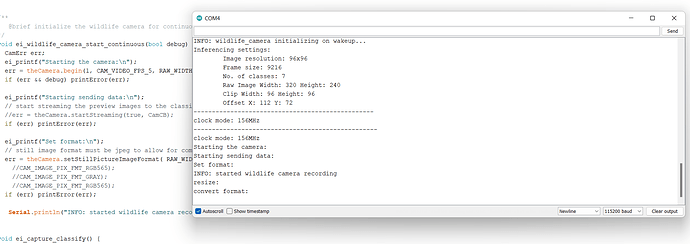

I am configuring both the Video preview and the Snapshot the same:

err = theCamera.begin(1, CAM_VIDEO_FPS_5, RAW_WIDTH, RAW_HEIGHT,CAM_IMAGE_PIX_FMT_YUV422);

err = theCamera.setStillPictureImageFormat( RAW_WIDTH,RAW_HEIGHT, CAM_IMAGE_PIX_FMT_YUV422);

With a static scene, here are the classification results when the Video Preview source is being used:

INFO: wildlife_camera initializing on wakeup...

Inferencing settings:

Image resolution: 160x160

Frame size: 25600

No. of classes: 2

Raw Image Width: 320 Height: 240

Clip Width: 160 Height: 160

Offset X: 80 Y: 40

Starting the camera:

Starting sending data:

Set format:

INFO: started wildlife camera recording

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.035156

lego: 0.964844

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.367188

lego: 0.632812

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.593750

lego: 0.406250

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.476562

lego: 0.523437

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.351563

lego: 0.648437

INFO: new frame processing...

convert format:

classify picture:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.324219

lego: 0.675781

and here is when it is using the image from takePicture(). There still is some variance, which is weird since it should be the exact same image each time, but it is only about 8%

INFO: wildlife_camera initializing on wakeup...

Inferencing settings:

Image resolution: 160x160

Frame size: 25600

No. of classes: 2

Raw Image Width: 320 Height: 240

Clip Width: 160 Height: 160

Offset X: 80 Y: 40

Starting the camera:

Starting sending data:

Set format:

INFO: started wildlife camera recording

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.078125

lego: 0.921875

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.132812

lego: 0.867187

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.105469

lego: 0.894531

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.035156

lego: 0.964844

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.078125

lego: 0.921875

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.050781

lego: 0.949219

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.066406

lego: 0.933594

resize:

convert format:

Predictions (DSP: 21 ms., Classification: 4319 ms., Anomaly: 0 ms.):

background: 0.105469

lego: 0.894531

Here is an update of my program: spresense-picture.ino · GitHub