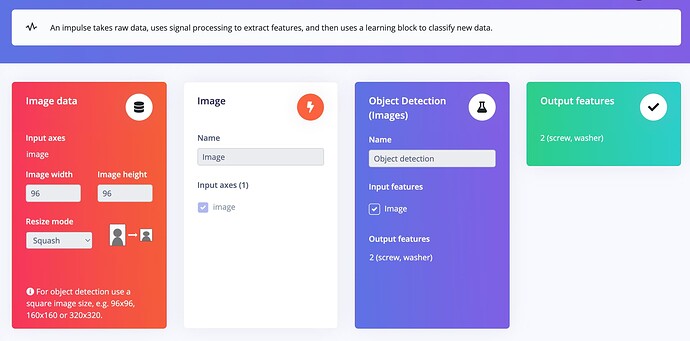

Pre-face: First time doing computer vision projects using a service like this. Will be used for graduation project. Goal is FOMO model, which can detect multiple different object types.

I keep running either OOM or time problems when trying to train my model. I however have difficulties understanding the underlying structure of the problems. The basics I understand; I’m using too much memory, or training the model takes too much time, but I don’t understand why.

I have a few different datasets available to me, of varying sizes, resolutions, objects etc. The biggest ones in the original datasets are 1920x1200. I am assuming that creating an impulse and setting it to 640x640 will require much more RAM compared to a 320x320, but will also increase the accuracy, so it seems to be an issue of trying to balance the resolution of the picture versus the size of the dataset (2000 images for example).

However, I’ve also had issues when using a different smaller dataset (which was set to 320x320, grayscale) where I would run into OOM problems after training around 50-60 batches, but just as training is about to begin, the job is evicted from the cluster, even though the RAM consumption should be much less.

So my questions are these:

-

What is the exact relationship between dataset sizes, their original resolutions, and the resolution set in the impulse? There must be something obvious I’m not grasping here.

-

Regarding the time issues, I would assume that because the impulse images are still somewhat large, it takes much longer to process them. Correct?

-

What could be the problem regarding the OOM eviction? It happened even when setting very loose training parameters for testing purposes (1 cycle, 0.002 learning rate)

Side note: Is there a way to get more time?