Hi Team,

I’m trying to run the model standalone Inference in code composer studio using TI CC1352P.

I have done progress by following the guide getting results for the model one window locally with the code composer studio.

It would be great, If I could run the Run_classifier continuously for a successive slice of windows for one full time series dataset.

Kindly throw some light regarding this.

Thanks & Regards,

Keerthivasan

Hi @Keerthivasan,

I’m not sure if you are doing audio data, but this guide might be helpful in running continuous inference on successive slices of windows (i.e. a “rolling window”): Continuous audio sampling - Edge Impulse Documentation. You will need to use the run_classifier_init() and run_classifier_continuous() functions instead of run_classifier().

Hi @shawn_edgeimpulse

I’m not using the audio data, we are using the PPG sensor’s time-series data ie. not high-frequency data like audio signal data. So I tried to work with the run classifier itself to get the results for successive windows in Code Composer Studio, It works with the model Minimum size input feature of about 585 frame size( taken Raw sample count 50 for one window).

But When I tried to work with another model which has an input feature of about 4355 frame size and took Raw sample count for one window of 344 sample data,

It fails to work with the run classifier.

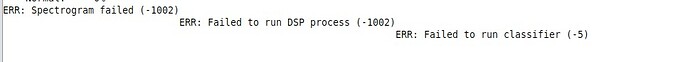

showing error:

ERR: Spectrogram failed (-1002 )

ERR: Failed to run DSP process (-1002)

ERR: Failed to run classifier (-5)

Why this error occurs, kindly help me out to sort this.

Regards,

Keerthivasan.

Hi @Keerthivasan,

Can you share your project ID and/or inference code? That would help us understand what you are trying to do.

Did you set the debug parameter to true in run_classifier_continuous()?

Hello @shawn_edgeimpulse

I’m not using the run_classifier_continuous API function call. I could produce output using the run_classifier itself for full windows, but the problem is its only works for the minimal RAM size model(6kb).

when we try to work with another model that has RAM Size(13kb) it’s showing an error as mentioned above.

Hereby I’m attaching the Inference code and mainthread code for your reference.

Inference.cpp Code

extern "C" ei_impulse_result_t ei_infer(float *data, size_t len, bool debug)

{

signal_t signal;

numpy::signal_from_buffer(data, len, &signal);

ei_impulse_result_t result = { 0 };

EI_IMPULSE_ERROR r = run_classifier_continuous(&signal, &result, debug);

if (r != EI_IMPULSE_OK) {

ei_printf("ERR: Failed to run classifier (%d)\r\n", r);

while(1);

}

// print the predictions, but only if valid labels are present

if (!result.label_detected) {

ei_printf("\r\nPredictions (DSP: %d ms., Classification: %d ms., Anomaly: %d ms.): \r\n",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf(" %s: \t", result.classification[ix].label);

// printing floating point

ei_printf("%d%%", (int32_t) (result.classification[ix].value * 100.0));

ei_printf("\r\n");

}

}

return result;

}

Mainthread.c

void *mainThread(void *arg0)

{

spi_init();

clearMeasurements();

sensorDataCollects();

// ei_init: Intialize the Run_classifier init , UART2, Timer

ei_init();

while(start_index<= TotalLen) {

/*Total len have total raw data samples

In data array have one window size count. sends to the model to classify*/

for (int i=0,j = start_index; j < start_index+EI_CLASSIFIER_RAW_SAMPLE_COUNT;i++, j++)

{

data[i] = fullsample[j];

}

ei_impulse_result_t result = ei_infer(data, EI_CLASSIFIER_RAW_SAMPLE_COUNT, false);

if (!result.label_detected)

{

float max_val = 0.0;

uint16_t result_idx = 0;

for (uint16_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

if (max_val < result.classification[ix].value) {

max_val = result.classification[ix].value;

result_idx = ix;

}

}

Result_count = result_idx;

}

start_index = start_index + EI_CLASSIFIER_RAW_SAMPLE_COUNT;

count++;

}

return 0;

}

It looks like you are using the run_classifier_continuous() API call in your ei_infer() function:

EI_IMPULSE_ERROR r = run_classifier_continuous(&signal, &result, debug);

Could you try using run_classifier() instead of run_classifier_continuous() to see if that works?

What is in your ei_init() function?

Also, how are you replacing your model (from the 6kB to the 13 kB model)? Which files/folders are you replacing? Note that you must replace the entire C++ SDK library (you can’t just replace the tflite-model/ directory).

I tried with the run_classifier() API call only and it worked for the minimal model.

while debugging I changed it into run_classifier_continuous(). Forgot to change it back.

Also, how are you replacing your model (from the 6kB to the 13 kB model)? Which files/folders are you replacing?

Thou, I replaced the entire model deployment files not only the tflite-model/directory and in ei_init() initialized the UART2, Timer, and run_classifier_init().

The problem is now solved, For the 13KB model, the Heap size will be required twice the amount in CCS. After changing the HEAPSIZE amount in the Linker file ie. <target_name>.cmd file, it started inference the successive windows.

HEAPSIZE = 0x7530; /* Size of heap buffer used by HeapMem */

Thanks & Regards,

Keerthivasan M

1 Like

Hi @Keerthivasan,

Ah! Good catch on increasing the heap size. Glad to hear you got it working!

1 Like