Hi @Sina,

Thanks for using Edge Impulse! I just checked your project and your validation accuracy is 91.6%, so it looks like you resolved the issue with the mismatch? That said, here are some notes that should help improve performance overall:

Steps per epoch

First up, I noticed that you are setting steps_per_epoch=1 in your call to model.fit. This means that you are only using one batch of data per epoch. You have 32047 samples and a batch size of 100, so you are currently throwing away almost 99.7% of your dataset.

I would recommend removing this parameter altogether. It’s almost guaranteed to cause overfitting, since you are training your model on the same 100 samples every epoch and ignoring most of your data.

Dataset repeating

In the code your dataset is being repeated 10 times:

train_dataset= train_dataset.repeat(10)

Since each epoch goes through the dataset once, there’s no need to do this—you can just multiply your number of epochs by 10. Plus, since you set steps_per_epoch=1, you were only using the first 100 samples of the dataset during each epoch anyway, so this repeat was having no effect. I’d recommend leaving this one out.

Validation frequency

With validation_freq=50 you’re running validation only every 50 epochs; to get some insight into potential overfitting it’s useful to leave this on for every epoch.

Number of epochs

Since your dataset was being reduced to only 100 samples, you had a very high number of epochs set. With more samples a lower number will work.

New model version

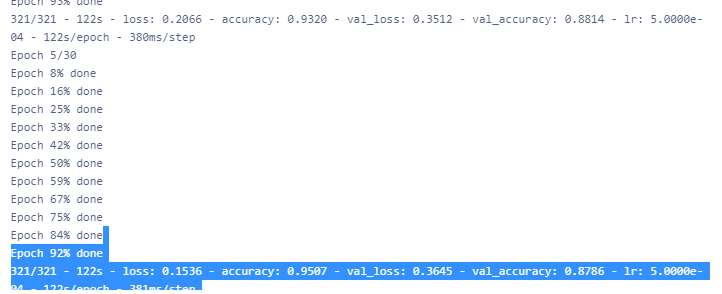

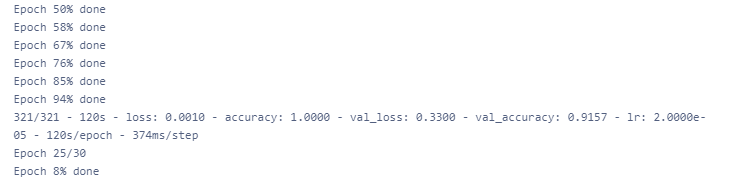

After making these changes in a new model version I’m seeing 88% validation accuracy after 5 epochs, which is promising—you can try training for more epochs and see how high you can get it.

Reasons for drop in accuracy between the logs and the final model

There are a couple of things that can cause a drop in accuracy between the last epoch and the final printed value. They are:

- By default the UI displays the accuracy for the quantized model, which has been reduced in precision so that it runs more quickly and takes up less space when deployed to an embedded device. Sometimes the quantized model has lower accuracy than the original model.

- At the end of training, we use the model at whichever epoch has the lowest validation loss. This may not be the model from the final epoch. It may also not be the model that has the lowest accuracy, since loss is a better measure of the model’s overall representation of the dataset.

I hope this helps! Let me know if you have any more questions.

Warmly,

Dan