@rjames

TLDR;

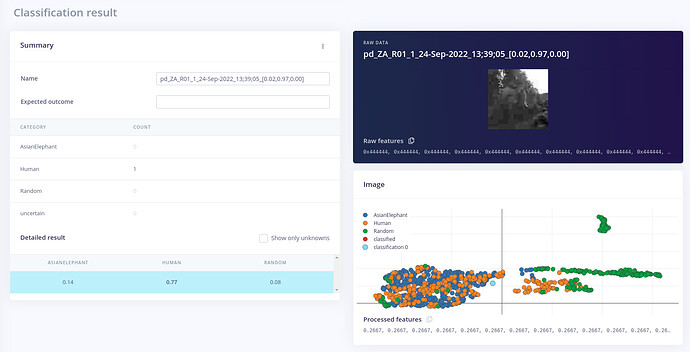

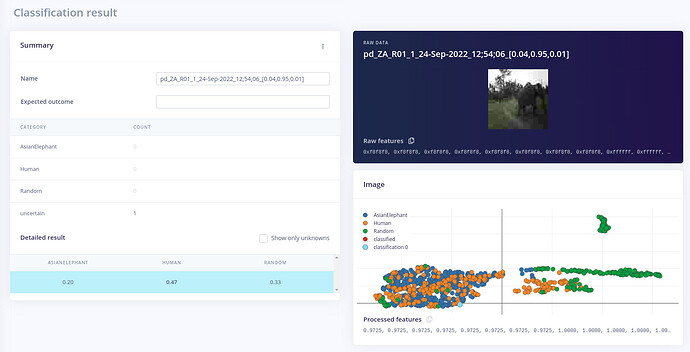

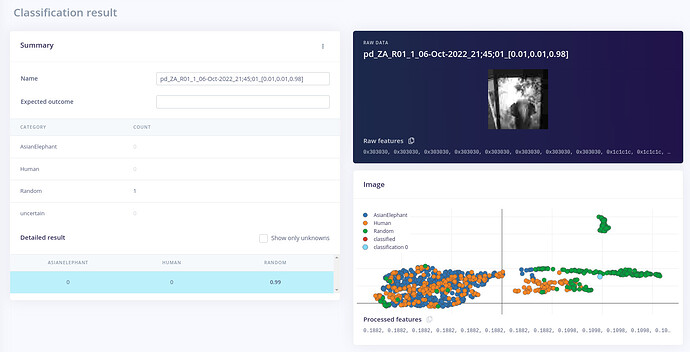

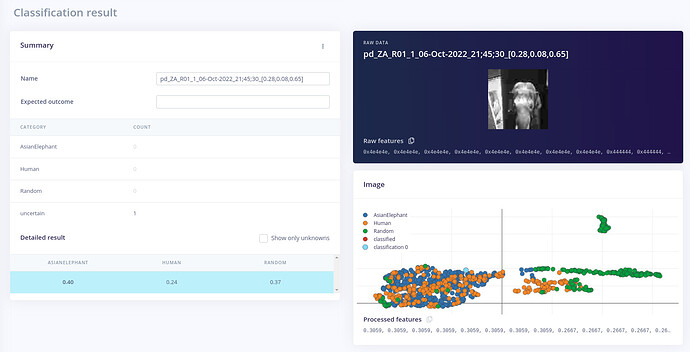

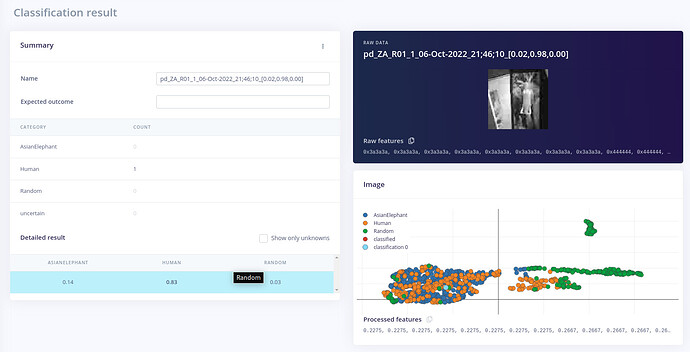

Even with the image converted to gray-scale, the results vary drastically on edge implementation compared to live classification.

I did my some prep as suggested, I’m listing those in order,

- Capturing image in gray-scale 320x240.

- Converting the captured RAW buffer to RGB888.

- Resizing it to 96x96.

- Passing the data to the classifier.

The snippet for the image captured from the ESP32-CAM (OV2640) in the gray-scale format,

static camera_config_t camera_config = {

.xclk_freq_hz = 20000000,

.pixel_format = PIXFORMAT_GRAYSCALE,

.frame_size = FRAMESIZE_QVGA,

.jpeg_quality = 10,

.fb_count = 2,

}

Once I’ve the RAW buffer, I’m converting them to RGB888 and holding the value in rgb888_matrix

fmt2rgb888(pic->buf,pic->len,pic->format,rgb888_matrix);

After this, 96x96 resizing is achieved using,

image_resizer(resized_matrix,rgb888_matrix,EI_CLASSIFIER_INPUT_WIDTH,EI_CLASSIFIER_INPUT_HEIGHT,3,pic->width,pic->height);

image_resizer() function:

void image_resizer(uint8_t *dst_image, uint8_t *src_image, int dst_w, int dst_h,

dst_c, int src_w, int src_h) {

float scale_x = (float)src_w / dst_w;

float scale_y = (float)src_h / dst_h;

int dst_stride = dst_c * dst_w;

int src_stride = dst_c * src_w;

if (fabs(scale_x - 2) <= 1e-6 && fabs(scale_y - 2) <= 1e-6) {

image_zoomIn_twice(dst_image, dst_w, dst_h, dst_c, src_image, src_w, dst_c);

} else {

for (int y = 0; y < dst_h; y++) {

float fy[2];

fy[0] = (float)((y + 0.5) * scale_y - 0.5); // y

int src_y = (int)fy[0]; // y1

fy[0] -= src_y; // y - y1

fy[1] = 1 - fy[0]; // y2 - y

src_y = DL_IMAGE_MAX(0, src_y);

src_y = DL_IMAGE_MIN(src_y, src_h - 2);

for (int x = 0; x < dst_w; x++) {

float fx[2];

fx[0] = (float)((x + 0.5) * scale_x - 0.5); // x

int src_x = (int)fx[0]; // x1

fx[0] -= src_x; // x - x1

if (src_x < 0) {

fx[0] = 0;

src_x = 0;

}

if (src_x > src_w - 2) {

fx[0] = 0;

src_x = src_w - 2;

}

fx[1] = 1 - fx[0]; // x2 - x

for (int c = 0; c < dst_c; c++) {

dst_image[y * dst_stride + x

* dst_c + c] = round(

src_image[src_y * src_stride + src_x * dst_c + c] * fx[1] *

fy[1] +

src_image[src_y * src_stride + (src_x + 1) * dst_c + c] * fx[0] *

fy[1] +

src_image[(src_y + 1) * src_stride + src_x * dst_c + c] * fx[1] *

fy[0] +

src_image[(src_y + 1) * src_stride + (src_x + 1) * dst_c + c] *

fx[0] * fy[0]);

}

}

}

}

}

After this the data is been passed to the classifier run_classifier(&features_signal, &result, false); the produced result is not matching with the live classification.

Is there something I’ve missed in the prep?

Attached are the sample classification results on the edge and the result for the same image on the live classification.

More images follows.

Awaiting your response @rjames

Thanks,

Abu.