Hi,

I’m having problems with deploying my model into my arduino 33 BLE SENSE. I am using the static buffer example. I collect data from 6 analog pins of the arduino, put them together, and loop 10 times to get a feature vector of size 60. Then I start the prediction. In the non-prediction mode I rather print the data to serial port so that the data forwarder CLI can be used to send training data to Edge Impulse.

In data forwarder mode, the prediction works perfectly fine and I can see them in the “live classification” tab. However, when i download the library and set up the prediction (i change the boolean bool flag_prediction_mode = true; then the output of the algorithm is always nan:

run_classifier returned: 0

Predictions (DSP: 0 ms., Classification: 1 ms., Anomaly: 0 ms.):

[nan, nan, nan]

position_bonne: nan

position_mauvaise: nan

siege_vide: nan

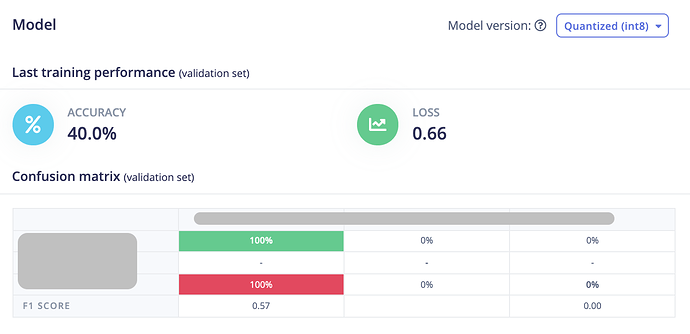

I used all standard parameters while training the model, with flatten block and raw block, and classification NN. Script posted in reply. Project number: 51781

)

)