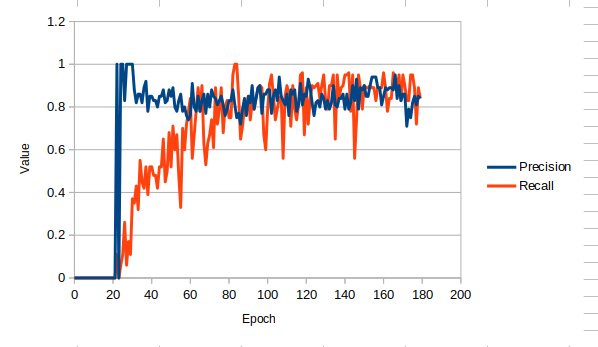

Why would Precision abruptly change from 0 to unity?

Training Cycles = 60

Learning Rate = 0.0001

Hello @MMarcial,

Could you share a project ID that I can share internally so our ML expert can have a deeper look please?

Best,

Louis

I saved the project under Version 1 because by the time the Dev’s get to it the project may have changed quite a bit.

The previous post used

FOMO MobileNetV2 0.35-

Training Cycles = 60

*Learning Rate = 0.0001? ← didn’t log this info - 2 output classes

I ran again with:

FOMO MobileNetV2 0.1Training Cycles = 180Learning Rate = 0.0005- 3 output classes

and got this chart:

It exhibits a step function but then oscillates as on might expect versus the 1st case where it got stuck at unity.

Project ID: 136034

Hi @MMarcial,

Thanks so much for using our platform! I took a look at your project and it appears your dataset is highly imbalanced; you only have a few labelled examples for one of your classes. This tiny number of examples is what’s leading to your precision changing as a step function.

It’s also worth noting that you probably won’t get good real world performance until you have added more examples of the minority class.

Warmly,

Dan

F1, precision and recall aren’t smooth with respect to the FOMO loss function; so it’s expected that they’ll have large discrete jumps and have the stochastic variance you see ( which is the model jumping between equivalent-with-respect-to-loss solutions )

Mat