My Arduino Portenta Vision Shield just arrived, I will spend a bit of time with OpenMV, but wondering how well Edge Impulse is setup for working with this.

I assume the Arduino library is not ready, but hoping the micro-python files are fully functioning. Any suggestions?

The Edge Impulse Arduino libraries work as-is on the Portenta, but you’d need to hook up the camera to the signal_t get_data function to do classification on the live image function. I haven’t looked at the camera driver yet.

If the micropython environment works the same as OpenMV (I think it will at some point) you can reuse the OpenMV export but not sure if it’s done already.

1 Like

Very impressive!

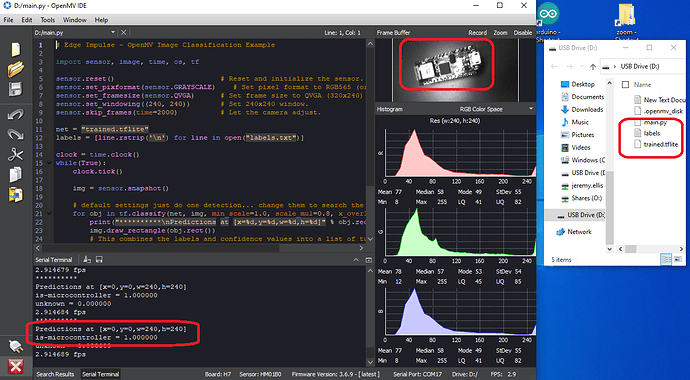

I dragged the Camera Edge Impulse (made with my cell phone) OpenMV build files using all default setting onto the PortentaH7 with Vision Shield pop up drive. Changed RGB565 to GRAYSCALE and it worked great.

I have a Portenta Arduino correctly identyfing a Nano 33 IOT as a microcontroller.

3 Likes

Oh wow! I didn’t realize the OpenMV compatibility layer was already released, awesome!

1 Like

By the way, if you have one of these Portenta Vision Shields, especially any of the first shipments, it seems there is a electrical short potential if the camera touches the shield base. Which it could very easily do, so make sure you put a bit of insulation between the camera and the base of the shield.

I thought I had a dead vision shield when I only had a fried camera, switched the camera and everything is fine.

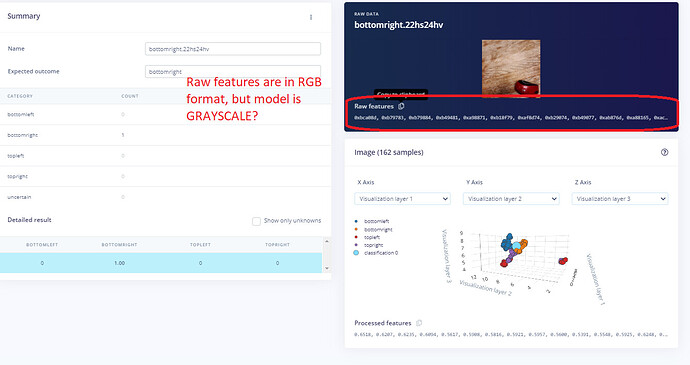

I am still getting used to my Portenta Vision Shield GRAYSCALE processing. When I test my work, I use the Arduino_Static sketch and post RAW data from the Live Classification area, but this live data is color RGB format and my Portenta Vision Shield will be sensing GRAYSCALE. See image.

I know the grayscale is in a strange RGB style format. 0x2a2a2a where each color is the same, but for testing it looks like it is grabbing full color from the original data, shouldn’t it be GRAYSCALE from the original? I have checked several times that my Impulse is actually set for GRAYSCALE.

Thanks @janjongboom I was just getting to that way of thinking about it.

@janjongboom I have a question here. SO sensing grayscale is Portenta camera specific, right? If we deploy an RGB Model in Arduino Format to Portenta, and use the Raw features in static_buffer script, will it perform inference irrespective of the features being RGB. In that case, can Arduino Nano 33 Sense process those features as well? without using an external cam or module?

@janjongboom can probably answer better, but my 2 cents are:

An RGB impulse will run fine on both Nano33BleSense with a camera and the Portenta since the Portenta converts GRAYSCALE to a quasi RGB.

instead of RGB888 example 0x1a44cc the Portenta might see 0x1a1a1a.

Theoretically a GRAYSCALE impulse could be much smaller than an RGB impulse. So the GRAYSCALE Portenta might be able to load higher resolutions and run them faster. I have been testing bigger models, but my build typically times-out.

The problem with RGB is that I could not get bigger than 48x48 pixels with the Nano33BleSense and the OV7670 cam. And it is cropped not squished so very hard to line up the camera with what you want to see. Are you getting better than that? What camera do you have with your Nano33BleSense?

1 Like

@dhruvsheth In the end everything is automatically converted to RGB / Grayscale depending on your impulse in the DSP layer, so you can mix & match here, but as @Rocksetta said the size of things increases.

@Rocksetta we now have new Transfer Learning models based on MobileNetV1, these are much smaller and you can probably run 96x96 on the Nano 33 BLE Sense.

2 Likes