With the tensorflow lite library download for the arduino nano 33 ble sense, there is an example sketch of a person detection model which is doing image classification with an Arducam OV2640. Is it possible to replace the person detection trained_tflite model and lable information from a c++ library deployment from edge impulse to have a custom image model that will run on the arduino nano 33 ble sense?

@timlester Sure, if you follow the Adding sight to your sensors tutorial you can export as OpenMV library. That gives you a tflite file and a labels.txt file.

Hi Jan, from what I can tell there is no direct deployment of an image classification model to an Arduino nano 33 ble sense from edge impulse. So would editing the tflite ‘person detection’ model with the files from an OpenMV library deployment be the best approach at getting the nano 33 ble sense to do image classification or am I over complicating this?

Ah scrap that then. No, the easiest way is just deploy to Arduino library, then following the steps in https://docs.edgeimpulse.com/docs/running-your-impulse-arduino. Then just hook the camera output to the run_impulse function and this works.

You say that like it’s easy, lol…two days later and I’m still trying. When I go the tensorflow lite model solution by changing the model and labels, I can tell that it’s detecting the images but its not outputting the probabilities of each label. I’m assuming the C source file output of the edge impulse model is directly compatible with the tensorflow lite example model?

I’d prefer the route you suggested and stay within edge impulse deployments but when I try the arduino library deployment from edge impulse and try routing camera output to run_impulse I get hung up trying to port the camera output through the

std::function<void(float*, size_t)> data_fn

of the run_impulse function. I can find no documentation on the run_impulse function. Every search just pulls up run_classifier examples, but I know that the camera data must go through the run_impulse function to be processed for the run_classifier function. Is there any documentation on this that I’m missing? I mostly develop .net apps, so forgive me if I’m missing something simple.

Hi @timlester,

I would suggest to start with the static_buffer Arduino example once you imported the library.

The features[] array contains RGB values of your image (you can grab an example of raw features values in your Live Classification tab).

Once it works with the current static definition, you can dynamically fill the features array in the loop function based on your Arducam output.

Side note: if you wish to train an image recognition model within our pipeline, you will need to decrease image size to 48*48 as the Arduino BLE board is limited in RAM.

Aurelien

I was able to get up to about a 55 x 55 (RGB) running the static buffer deployed as Quantized (int8) with EON compiler. Any larger and I would get the Failed to allocate TFLite arena error and such. I stole the jpeg decoder resize code for the Arducam from the tensorflow lite person detection model to resize the image and load the ‘features[]’ (image_datax[PPIXELS]) array in the loop. I seem to be having some success with this code by chance it helps anyone. I’m only training on about 600ish images. If you see where I am in error let me know because C/C++ is not my native language…

BTW: I left the 16 bit to RGB565 conversion and grayscale conversion from the original sketch in but commented out in case anyone needs them.

#include <anit-hog_feeder_inference.h>

#include <SPI.h>

#include <Wire.h>

#include <memorysaver.h>

#include <ArduCAM.h>

#include <JPEGDecoder.h>

#include <stdint.h>

#if !(defined OV2640_MINI_2MP_PLUS)

#error Please select the hardware platform and camera module in the Arduino/libraries/ArduCAM/memorysaver.h

#endif

#define MAX_JPEG_BYTES 5000

#define PPIXELS 3025

#define CS 7

#define img_sz 55

ArduCAM myCAM(OV2640, CS);

float image_datax[PPIXELS];

enum imgcaptured

{

not_captured = 0,

captured = 1

};

int raw_feature_get_data(size_t offset, size_t length, float *out_ptr)

{

memcpy(out_ptr, image_datax + offset, length * sizeof(float));

return 0;

}

void setup()

{

// put your setup code here, to run once:

Serial.begin(115200);

// Configure the CS pin

pinMode(CS, OUTPUT);

digitalWrite(CS, HIGH);

Wire.begin();

// initialize SPI

SPI.begin();

// Reset the CPLD

myCAM.write_reg(0x07, 0x80);

delay(100);

myCAM.write_reg(0x07, 0x00);

delay(100);

// Test whether we can communicate with Arducam via SPI

myCAM.write_reg(ARDUCHIP_TEST1, 0x55);

uint8_t test;

test = myCAM.read_reg(ARDUCHIP_TEST1);

if (test != 0x55)

{

Serial.println("Can't communicate with Arducam");

delay(1000);

}

// Use JPEG capture mode, since it allows us to specify

// a resolution smaller than the full sensor frame

myCAM.set_format(JPEG);

myCAM.InitCAM();

// Specify the smallest possible resolution

myCAM.OV2640_set_JPEG_size(OV2640_160x120);

delay(100);

}

void loop()

{

imgcaptured captureimg = capture_resize_image();

if (captureimg == captured)

{

if (sizeof(image_datax) / sizeof(float) != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE)

{

Serial.println("array size error");

return;

}

ei_impulse_result_t result = {0};

// the features are stored into flash, and we don't want to load everything into RAM

signal_t features_signal;

features_signal.total_length = sizeof(image_datax) / sizeof(image_datax[0]);

features_signal.get_data = &raw_feature_get_data;

// invoke the impulse

EI_IMPULSE_ERROR res = run_classifier(&features_signal, &result, false /* debug */);

if (res != 0)

{

Serial.println("res != 0");

return;

}

bool identifiedd = false;

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++)

{

float valuee = result.classification[ix].value;

if (valuee > 0.6)

{

if (strcmp(result.classification[ix].label, "hog") == 0)

{

Serial.print("hog - ");

Serial.println(valuee);

identifiedd = true;

}

else

{

Serial.print("not_hog - ");

Serial.println(valuee);

identifiedd = true;

}

}

}

if (!identifiedd)

{

Serial.println("uncertain");

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

#endif

}

}

imgcaptured capture_resize_image()

{

uint8_t jpeg_buffer[MAX_JPEG_BYTES] = {0};

uint32_t jpeg_length = 0;

myCAM.flush_fifo();

myCAM.clear_fifo_flag();

// Start capture

myCAM.start_capture();

// Wait for indication that it is done

while (!myCAM.get_bit(ARDUCHIP_TRIG, CAP_DONE_MASK))

{

}

Serial.println("Image captured");

delay(50);

// Clear the capture done flag

myCAM.clear_fifo_flag();

jpeg_length = myCAM.read_fifo_length();

Serial.println("Reading %d bytes from Arducam");

// Ensure there's not too much data for our buffer

if (jpeg_length > MAX_JPEG_BYTES)

{

Serial.println("Too many bytes in FIFO buffer (%d)");

return not_captured;

}

if (jpeg_length == 0)

{

Serial.println("No data in Arducam FIFO buffer");

return not_captured;

}

myCAM.CS_LOW();

myCAM.set_fifo_burst();

for (int index = 0; index < jpeg_length; index++)

{

jpeg_buffer[index] = SPI.transfer(0x00);

}

delayMicroseconds(15);

Serial.println("Finished reading");

myCAM.CS_HIGH();

JpegDec.decodeArray(jpeg_buffer, jpeg_length);

// Crop the image by keeping a certain number of MCUs in each dimension

const int keep_x_mcus = img_sz / JpegDec.MCUWidth;

const int keep_y_mcus = img_sz / JpegDec.MCUHeight;

// Calculate how many MCUs we will throw away on the x axis

const int skip_x_mcus = JpegDec.MCUSPerRow - keep_x_mcus;

// Roughly center the crop by skipping half the throwaway MCUs at the

// beginning of each row

const int skip_start_x_mcus = skip_x_mcus / 2;

// Index where we will start throwing away MCUs after the data

const int skip_end_x_mcu_index = skip_start_x_mcus + keep_x_mcus;

// Same approach for the columns

const int skip_y_mcus = JpegDec.MCUSPerCol - keep_y_mcus;

const int skip_start_y_mcus = skip_y_mcus / 2;

const int skip_end_y_mcu_index = skip_start_y_mcus + keep_y_mcus;

uint16_t *pImg;

uint16_t color;

int indexx = 0;

// Loop over the MCUs

while (JpegDec.read())

{

// Skip over the initial set of rows

if (JpegDec.MCUy < skip_start_y_mcus)

{

continue;

}

// Skip if we're on a column that we don't want

if (JpegDec.MCUx < skip_start_x_mcus ||

JpegDec.MCUx >= skip_end_x_mcu_index)

{

continue;

}

// Skip if we've got all the rows we want

if (JpegDec.MCUy >= skip_end_y_mcu_index)

{

continue;

}

// Pointer to the current pixel

pImg = JpegDec.pImage;

// The x and y indexes of the current MCU, ignoring the MCUs we skip

int relative_mcu_x = JpegDec.MCUx - skip_start_x_mcus;

int relative_mcu_y = JpegDec.MCUy - skip_start_y_mcus;

// The coordinates of the top left of this MCU when applied to the output

// image

int x_origin = relative_mcu_x * JpegDec.MCUWidth;

int y_origin = relative_mcu_y * JpegDec.MCUHeight;

// Loop through the MCU's rows and columns

for (int mcu_row = 0; mcu_row < JpegDec.MCUHeight; mcu_row++)

{

// The y coordinate of this pixel in the output index

int current_y = y_origin + mcu_row;

for (int mcu_col = 0; mcu_col < JpegDec.MCUWidth; mcu_col++)

{

// Read the color of the pixel as 16-bit integer

color = *pImg++;

//Extract the color values (5 red bits, 6 green, 5 blue)

// uint8_t r, g, b;

// r = ((color & 0xF800) >> 11) * 8;

// g = ((color & 0x07E0) >> 5) * 4;

// b = ((color & 0x001F) >> 0) * 8;

// Convert to grayscale by calculating luminance

// See https://en.wikipedia.org/wiki/Grayscale for magic numbers

//float gray_value = (0.2126 * r) + (0.7152 * g) + (0.0722 * b);

// The x coordinate of this pixel in the output image

int current_x = x_origin + mcu_col;

// The index of this pixel in our flat output buffer

indexx = (current_y * img_sz) + current_x;

// image_data[indexx] = gray_value;

image_datax[indexx] = (float)color;

}

}

}

return captured;

}That looks really interesting @timlester, I have just got an OV7670 and it looks like it may have similar connections. Any more information about what worked and what did not work for you? How many items were you testing? Are you willing to share your Arduino generated Library? Very impressive what you have done so far.

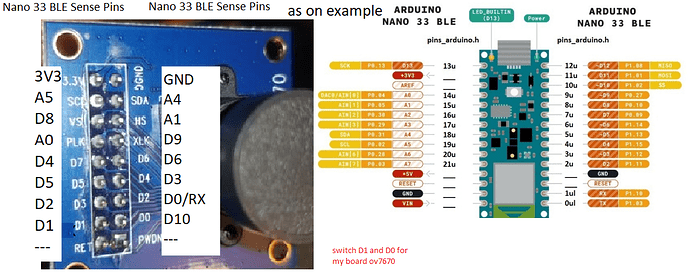

Here is the wiring that is working for me on the OV7670, does it look similar to your setup?

@Rocksetta I ended up training with about 1600 images set at 48 x48 grayscale representing three different catagories. I used the mobilenetV2 0.35 training layer with the Quantized (int8) with EON compiler deployment and used the pin diagram found at the tensorflow person detection example. I didn’t use the grayscale conversion commented out in my other post even though I trained using the grayscale setting because I think the Edge Impulse Arduino static_buffer deployment internally coverts to grayscale during image processing best I could tell. I also got better accuracy that way. The core of my code didn’t change much from what I posted as far as image processing.

I haven’t had a chance to real world test but I did test by pointing camera at web images and seemed to get acceptable accuracy. I used the arducam host V2 app to help align camera while testing.

@Rocksetta I’ve been on other projects but here’s where I got with the code.

#include <anit-hog_feeder_inference.h>

#include <SPI.h>

#include <Wire.h>

#include <memorysaver.h>

#include <ArduCAM.h>

#include <JPEGDecoder.h>

#include <stdint.h>

#define DEBUG //set to DEBUG to turn on the leds and serial prints

#define IMGOUT1 //set to IMGOUT to turn on image output to arducam viewer

#define SERIALINPUT1 //set to SERIALINPUT to turn on sending byte 1 to mimic hog capture to trace open/close events

#if !(defined OV2640_MINI_2MP_PLUS)

#error Please select the hardware platform and camera module in the Arduino/libraries/ArduCAM/memorysaver.h

#endif

#define MAX_JPEG_BYTES 6000

#define PPIXELS 2304

#define img_sz 48

#define R3 3

#define R4 4

#define R5 5

#define R6 6

#define CS 7

#ifdef DEBUG

bool identifiedd = false;

const char *trig[] =

{

"feeder_open",

"first_trigger",

"second_trigger",

"third_trigger",

"feeder_closed",

"trigger_clear"};

#endif

enum imgcaptured

{

not_captured = 0,

captured = 1

};

enum trigger_stage

{

feeder_open = 0,

first_trigger = 1,

second_trigger = 2,

third_trigger = 3,

feeder_closed = 4,

trigger_clear = 5

};

enum error_reporter

{

function_error = 0,

function_succeeded = 1

};

ArduCAM myCAM(OV2640, CS);

float image_datax[PPIXELS];

unsigned long elapsedtime;

trigger_stage current_trigger = feeder_open;

int incomingByte = 0;

int raw_feature_get_data(size_t offset, size_t length, float *out_ptr)

{

memcpy(out_ptr, image_datax + offset, length * sizeof(float));

return 0;

}

void setup()

{

// put your setup code here, to run once:

Serial.begin(115200);

#ifdef DEBUG

pinMode(LEDG, OUTPUT);

pinMode(LEDR, OUTPUT);

pinMode(LEDB, OUTPUT);

digitalWrite(LEDG, HIGH);

digitalWrite(LEDR, HIGH);

digitalWrite(LEDB, HIGH);

#else

digitalWrite(LED_PWR, LOW);

#endif

pinMode(CS, OUTPUT);

pinMode(R3, OUTPUT);

pinMode(R4, OUTPUT);

pinMode(R5, OUTPUT);

pinMode(R6, OUTPUT);

digitalWrite(CS, HIGH);

digitalWrite(R3, HIGH);

digitalWrite(R4, HIGH);

digitalWrite(R5, HIGH);

digitalWrite(R6, HIGH);

Wire.begin();

// initialize SPI

SPI.begin();

// Reset the CPLD

myCAM.write_reg(0x07, 0x80);

delay(100);

myCAM.write_reg(0x07, 0x00);

delay(100);

// Test whether we can communicate with Arducam via SPI

myCAM.write_reg(ARDUCHIP_TEST1, 0x55);

uint8_t test;

test = myCAM.read_reg(ARDUCHIP_TEST1);

if (test != 0x55)

{

//Serial.println("Can't communicate with Arducam");

delay(1000);

}

// Use JPEG capture mode, since it allows us to specify

// a resolution smaller than the full sensor frame

myCAM.set_format(JPEG);

myCAM.InitCAM();

// Specify the smallest possible resolution

myCAM.OV2640_set_JPEG_size(OV2640_160x120);

delay(100);

}

void loop()

{

#ifdef SERIALINPUT

int incomingByte = 0;

if (Serial.available() > 0)

{

incomingByte = Serial.read();

#endif

if (current_trigger == trigger_clear)

{

if (millis() - elapsedtime > 1200000) //open feeder if no hog pic within 20 minutes

{

error_reporter openedfeeder = open_feeder();

if (openedfeeder == function_succeeded)

{

current_trigger = feeder_open;

}

#ifdef DEBUG

Serial.println("hogs gone");

#endif

}

}

else if (current_trigger != feeder_open && current_trigger != feeder_closed)

{

if (millis() - elapsedtime > 300000) //capture three images within five minute threshold

{

current_trigger = feeder_open;

#ifdef DEBUG

Serial.println("three minutes past");

#endif

}

}

imgcaptured captureimg = capture_resize_image();

if (captureimg == captured)

{

if (sizeof(image_datax) / sizeof(float) != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE)

{

#ifdef DEBUG

Serial.println("array size error");

#endif

return;

}

ei_impulse_result_t result = {0};

// the features are stored into flash, and we don't want to load everything into RAM

signal_t features_signal;

features_signal.total_length = sizeof(image_datax) / sizeof(image_datax[0]);

features_signal.get_data = &raw_feature_get_data;

// invoke the impulse

EI_IMPULSE_ERROR res = run_classifier(&features_signal, &result, false /* debug */);

if (res != 0)

{

#ifdef DEBUG

Serial.println("res = 0");

#endif

return;

}

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++)

{

float valuee = result.classification[ix].value;

if (valuee > 0.8)

{

#ifdef SERIALINPUT

if (strcmp(result.classification[ix].label, "hog") == 0 || incomingByte == '1')

#else

if (strcmp(result.classification[ix].label, "hog") == 0)

#endif

{

trigger_stage ctrigger = current_trigger;

switch (ctrigger)

{

case feeder_open:

{

current_trigger = first_trigger;

elapsedtime = millis();

}

break;

case first_trigger:

{

current_trigger = second_trigger;

}

break;

case second_trigger:

{

current_trigger = third_trigger;

}

break;

case third_trigger:

{

error_reporter closedfeeder = close_feeder();

if (closedfeeder == function_succeeded)

{

current_trigger = feeder_closed;

}

}

break;

case feeder_closed:

break;

case trigger_clear:

{

current_trigger = feeder_closed;

}

break;

default:

break;

}

#ifdef DEBUG

identifiedd = true;

Serial.print("hog - ");

Serial.println(valuee);

digitalWrite(LEDR, HIGH);

digitalWrite(LEDB, HIGH);

digitalWrite(LEDG, LOW);

delay(2000);

digitalWrite(LEDG, HIGH);

#endif

}

else if (strcmp(result.classification[ix].label, "deer") == 0)

{

if (current_trigger == feeder_closed)

{

current_trigger = trigger_clear;

elapsedtime = millis();

}

#ifdef DEBUG

identifiedd = true;

Serial.print("deer - ");

Serial.println(valuee);

digitalWrite(LEDG, HIGH);

digitalWrite(LEDR, HIGH);

digitalWrite(LEDB, LOW);

delay(2000);

digitalWrite(LEDB, HIGH);

#endif

}

else

{

if (current_trigger == feeder_closed)

{

current_trigger = trigger_clear;

elapsedtime = millis();

}

#ifdef DEBUG

identifiedd = true;

Serial.print("not_either - ");

Serial.println(valuee);

digitalWrite(LEDG, HIGH);

digitalWrite(LEDB, HIGH);

digitalWrite(LEDR, LOW);

delay(2000);

digitalWrite(LEDR, HIGH);

#endif

}

}

}

#ifdef DEBUG

if (!identifiedd)

{

Serial.println("uncertain");

}

identifiedd = false;

Serial.print("Trigger Stage: ");

Serial.print(trig[current_trigger]);

Serial.print(" - Input: ");

Serial.print(incomingByte, HEX);

Serial.print(" - Elapsed Time: ");

Serial.print(((millis() - elapsedtime) / 1000) / 60.0);

Serial.println(" Minutes");

#endif

delay(10000);

}

#ifdef SERIALINPUT

}

#endif

}

imgcaptured capture_resize_image()

{

uint8_t jpeg_buffer[MAX_JPEG_BYTES] = {0};

uint32_t jpeg_length = 0;

myCAM.flush_fifo();

myCAM.clear_fifo_flag();

// Start capture

myCAM.start_capture();

// Wait for indication that it is done

while (!myCAM.get_bit(ARDUCHIP_TRIG, CAP_DONE_MASK))

{

}

//Serial.println("Image captured");

delay(50);

// Clear the capture done flag

myCAM.clear_fifo_flag();

jpeg_length = myCAM.read_fifo_length();

//Serial.println("Reading %d bytes from Arducam");

// Ensure there's not too much data for our buffer

if (jpeg_length > MAX_JPEG_BYTES)

{

#ifdef DEBUG

Serial.println("Too many bytes in FIFO buffer (%d)");

#endif

return not_captured;

}

if (jpeg_length == 0)

{

#ifdef DEBUG

Serial.println("No data in Arducam FIFO buffer");

#endif

return not_captured;

}

myCAM.CS_LOW();

myCAM.set_fifo_burst();

uint8_t temp;

for (int index = 0; index < jpeg_length; index++)

{

temp = SPI.transfer(0x00);

jpeg_buffer[index] = temp;

#ifdef IMGOUT

Serial.write(temp);

#endif

}

#ifdef IMGOUT

Serial.println("");

#endif

delayMicroseconds(15);

//Serial.println("Finished reading");

myCAM.CS_HIGH();

JpegDec.decodeArray(jpeg_buffer, jpeg_length);

// Crop the image by keeping a certain number of MCUs in each dimension

const int keep_x_mcus = img_sz / JpegDec.MCUWidth;

const int keep_y_mcus = img_sz / JpegDec.MCUHeight;

// Calculate how many MCUs we will throw away on the x axis

const int skip_x_mcus = JpegDec.MCUSPerRow - keep_x_mcus;

// Roughly center the crop by skipping half the throwaway MCUs at the

// beginning of each row

const int skip_start_x_mcus = skip_x_mcus / 2;

// Index where we will start throwing away MCUs after the data

const int skip_end_x_mcu_index = skip_start_x_mcus + keep_x_mcus;

// Same approach for the columns

const int skip_y_mcus = JpegDec.MCUSPerCol - keep_y_mcus;

const int skip_start_y_mcus = skip_y_mcus / 2;

const int skip_end_y_mcu_index = skip_start_y_mcus + keep_y_mcus;

uint16_t *pImg;

uint16_t color;

int indexx = 0;

// Loop over the MCUs

while (JpegDec.read())

{

// Skip over the initial set of rows

if (JpegDec.MCUy < skip_start_y_mcus)

{

continue;

}

// Skip if we're on a column that we don't want

if (JpegDec.MCUx < skip_start_x_mcus ||

JpegDec.MCUx >= skip_end_x_mcu_index)

{

continue;

}

// Skip if we've got all the rows we want

if (JpegDec.MCUy >= skip_end_y_mcu_index)

{

continue;

}

// Pointer to the current pixel

pImg = JpegDec.pImage;

// The x and y indexes of the current MCU, ignoring the MCUs we skip

int relative_mcu_x = JpegDec.MCUx - skip_start_x_mcus;

int relative_mcu_y = JpegDec.MCUy - skip_start_y_mcus;

// The coordinates of the top left of this MCU when applied to the output

// image

int x_origin = relative_mcu_x * JpegDec.MCUWidth;

int y_origin = relative_mcu_y * JpegDec.MCUHeight;

// Loop through the MCU's rows and columns

for (int mcu_row = 0; mcu_row < JpegDec.MCUHeight; mcu_row++)

{

// The y coordinate of this pixel in the output index

int current_y = y_origin + mcu_row;

for (int mcu_col = 0; mcu_col < JpegDec.MCUWidth; mcu_col++)

{

// Read the color of the pixel as 16-bit integer

color = *pImg++;

// The x coordinate of this pixel in the output image

int current_x = x_origin + mcu_col;

// The index of this pixel in our flat output buffer

indexx = (current_y * img_sz) + current_x;

image_datax[indexx] = static_cast<float>(color);

}

}

}

delay(2000);

return captured;

}

error_reporter close_feeder()

{

digitalWrite(R3, HIGH);

digitalWrite(R4, HIGH);

digitalWrite(R5, LOW);

digitalWrite(R6, LOW);

delay(10000);

digitalWrite(R5, HIGH);

digitalWrite(R6, HIGH);

#ifdef DEBUG

Serial.println("feeder closed");

#endif

return function_succeeded;

}

error_reporter open_feeder()

{

digitalWrite(R3, LOW);

digitalWrite(R4, LOW);

digitalWrite(R5, HIGH);

digitalWrite(R6, HIGH);

delay(10000);

digitalWrite(R3, HIGH);

digitalWrite(R4, HIGH);

#ifdef DEBUG

Serial.println("feeder open");

#endif

return function_succeeded;

}Wow, thanks @timlester, your communication with the OV2640 is much more low level than I have been doing with the OV7670, should be very handy to have this to look at. I know what you mean about having other projects on the go.

@Rocksetta most of the ov2640 code is simply resizing the image to match the size you’ve trained your model at. By setting the img_sz to training size (48) in my case and PPIXELS to the square of the img_sz (48x48 = 2304) it sets the parameters by which the imgcaptured capture_resize_image() sub resizes the image to match the EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE input needed by the impulse. I barrowed the resize code directly from the tensorflow person detection example I posted a link to earlier with only minor edits to use the 16 bit color pixel output rather than grayscale. Good luck with your project.