For my model, looking at the confusion matrix, I get much better accuracy with ‘Unoptimized (float32)’ than 'Quantized (int 8). The tooltip says the type can be chosen during deployment - however this choice is not available if you choose OpenMV during deployment.

Is there a way of choosing the optimization type when using OpenMV? Thanks.

2 Likes

Hi @Icarus,

The OpenMV framework supports only quantized model at the moment.

To increase the accuracy, you can try adding more images or increasing the learning rate.

Aurelien

@Icarus there is a workaround, but the f32 model takes a lot more RAM so I doubt that it will work.

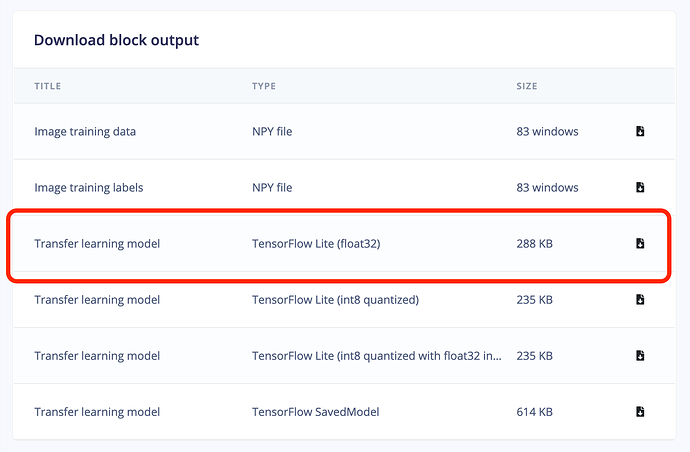

Go to Dashboard, and download the float32 TFLite model:

Then rename that to trained.tflite and drag it to your OpenMV.

Thank you - I’ll try that. I have the H7 plus, so should be fine.

Kindly revert back with your findings, I’ve been wondering same as you.