Hello, I am working with sony spresense board. I have trained a model for object detection(Bounding Box). And I have developed this using the firmware (It Worked) . But I want to see the result without my laptop to be more precise, I want to see the result on a IOT platform(Blynk for example). But I am not getting an idea. I can not change the firmware zip file . I want to capture image in real time ans post the result on the IOT.

But in the first hand I am not able to get the Raw features from the camera. Can you suggest me, how to do this.

I am tring to use Static Buffer example from Arduino Ide . Is it okay to use this??

If is it okay, then how to pass the real time image in this code??

Hoping for a response

I assume you are using edge-impulse-run-impulse to see the results on your PC. After you use flash_windows.bat the Spresense will boot up and start streaming results over its serial port.

You can hook up any device to the Spresense serial port and see the results and parse as needed, so you do not need a PC to see the results.

To get a full understanding of how to use the Sony Spresense with Edge Impulse you should start here with the Edge Impulse tutorial.

Then move onto this example that is a modified static_buffer that does FOMO. You can modify this program to output serial data or send a message to an LCD in the Arduino loop() by taking action on result.classification[ix].value.

I have an Arduino Spresense example here that reads the Spresense raw camera data using the Sony camera library and does FOMO. The work of reading the camera is done in function CamCB(). Before calling CamCB() you will need to configure the camera (see pertinent code in setup()) This program does more that what you are asking for so I would re-write it by taking ONLY the parts you need to accomplish your given task.

Thank You for your help, But when I tried different things I have encountered a weird thing.

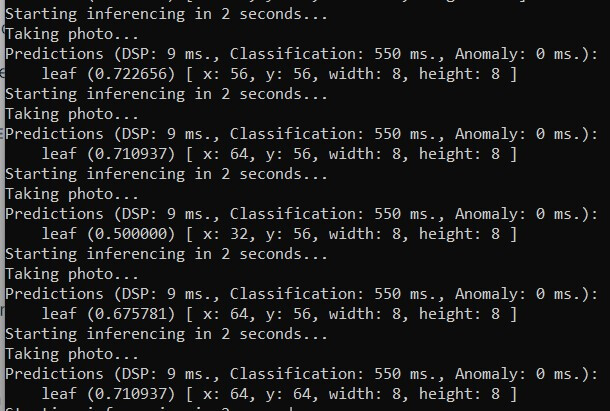

I have trained a new model. Deployed as a firmware. and tested using the following command. “edge-impulse-run-impulse”. Then I am getting the correct result.

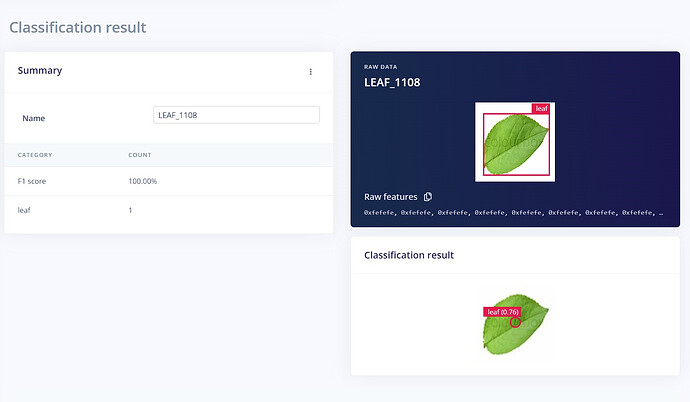

Then I tried running it on a test image. So I choose this image. The Live classification result of this image is correct on the web.

But when I tried doing the same thing on the static buffer(after copying the RAW features of the same image). I am getting different result. I suspect there is something wrong happening. May be It is not able to call the classifier. And the more weird thing is No matter which image I try to classify using the static buffer I am Getting the same result as shown in the picture.

Live Classification uses the float32 model. Are you running float32 or int8 on the Spresense?

Thank You for your help. I was using the int8 version on spresesne. Then I changed it to float32. But it was not able to load the model. Then I tried deceasing the Image size(By camera). It worked!!.

But Now I want to ask why int8 version gives very bad results. And how to resolve it. If I train a new model which is out of memory for Spresense then Can’t I convert it to int8 to deploy it without getting weird result everytime!

I see no direct path forward for you. Put on your Data Scientist cap…Adapt, Improvise and Overcome

Choose the correct model for you mission:

Check @louis response here as to wether FOMO or image classification is the correct approach for your mission.

In that same thread @GilA1 does a through job in evaluating different settings and trials. This is exactly what a Data Engineer does and the path your are about to take.

This documentation is always tuff because you start to follow the data results and can easily weave a web of confusion.

Fine Tune Model:

Try EON and increasing epochs. EON is explained here.

This is a good thread on FOMO improvements.

This is a good thread on improving Model performance.

Hi @Deepak876

I’m investigating on the issue you are experiencing running an object detection model deployed as Arduino Library with the Sony Spresense sketch made by @MMarcial .

regards,

fv

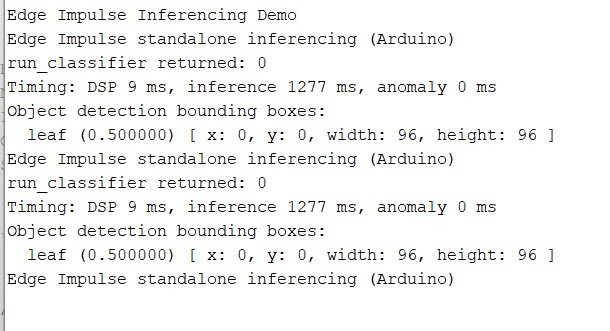

I’m testing the sketch from @MMarcial and I get the same as @Deepak876 - classification reports always 0.5 on the whole image - same for the static_buffer sketch.

As you reported, the same model works fine if deployed as C++ lib or as Spresense binary, I’m investigating the issue.

a bit late, but we added sketches for the Spresense on the Arduino Library deployment.