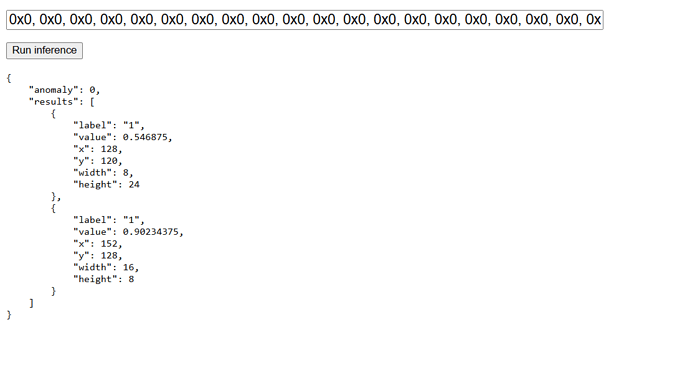

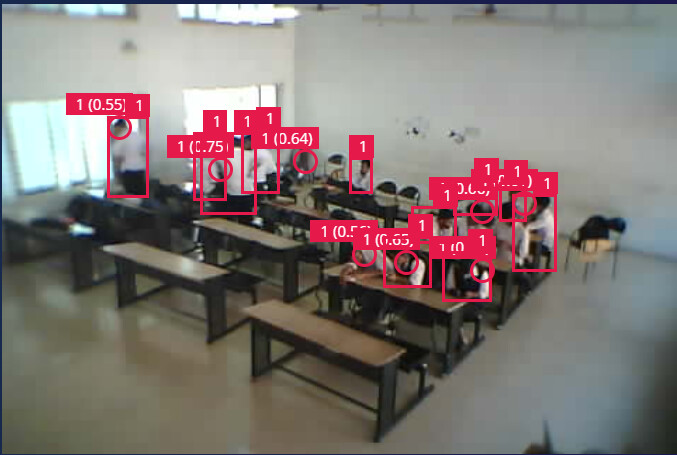

I am using the FOMO 0.35 to detect human presence, I don’t really need the bounding box exactly, I am happy to get the centroid as mentioned the the docs. But when I use tflite Interpreter() to load and run to invoke() inference result[0][0][0] is a list of size 6

It says they are x, y, w, h, label, conf but I couldn’t identify the position of these values in the list

I would love to know the index position of these fields in the record list returned

like I wanna find if the conf is in list[0] or list[5]

I have logic to implement with the detection result

Hopefully waiting for reply

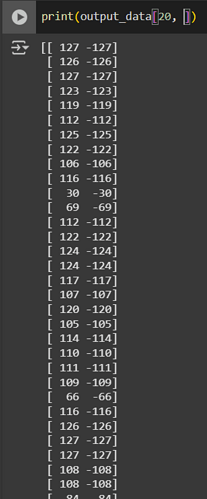

interpreter.invoke()

output_data = interpreter.get_tensor(output_details[0]['index'])

print("Output:", output_data)

Input shape: [ 1 480 320 3]

Output: [[[[[154 145 147 158 28 232]

[149 150 150 157 37 230]

[148 154 151 158 19 229]

…

[149 151 147 147 35 220]

[148 148 145 146 41 221]

[150 146 143 149 46 228]]

[[159 136 146 163 27 233]

[151 137 151 161 39 230]

[149 146 155 164 18 230]

…

[147 152 148 155 38 220]

[146 149 145 154 37 220]

[149 146 141 157 51 230]]

These are the list returned by the model

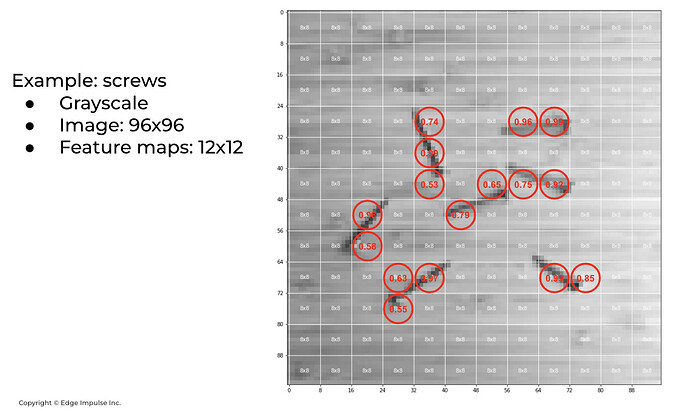

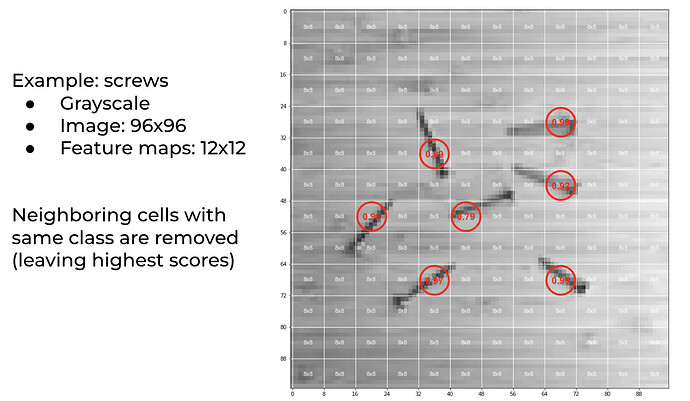

my model takes 320x320x1 images to return a 40x40 grid with the confidence of object

I found that label is an element of this list, but I have only 1 label for human class which is “1”

I didn’t see any “1” here but saw many other values which makes no sense to me

Please provide the signature of the data structure returned such as index representation