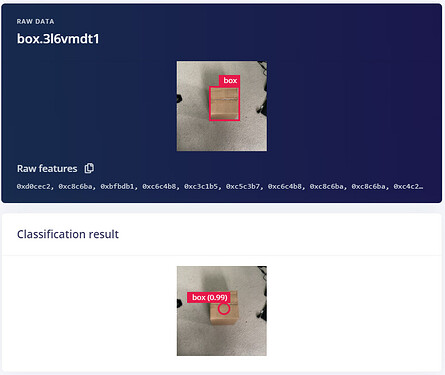

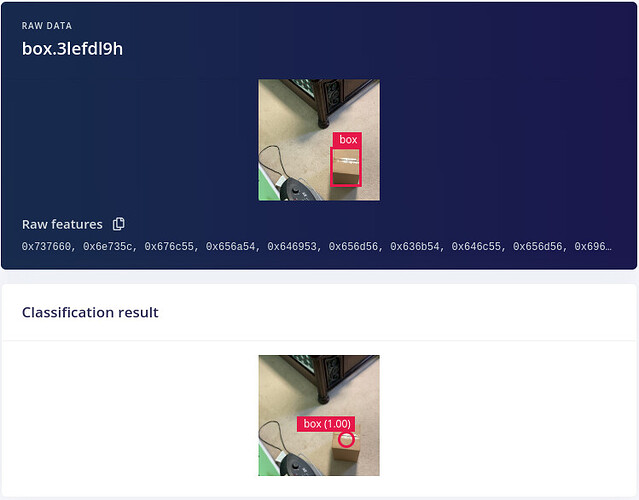

I’m using the FOMO object detection model to predict where cardboard boxes are within a video frame. From Edge Impulse I’m getting ~90% F1 score and some example classification results show good results, with centroids being predicted near-center of most boxes.

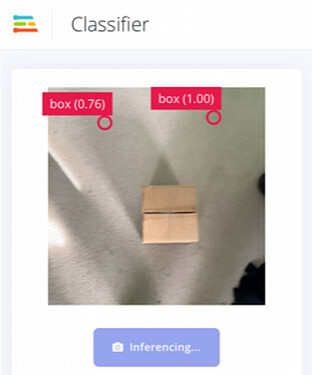

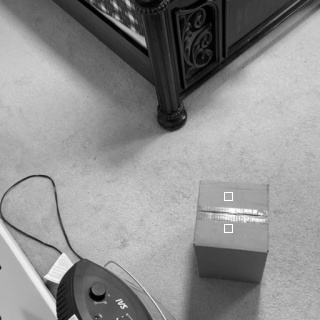

However, when deploying this on my RPi 4 or my phone, the model is able to detect the object but completely misses the centroid of the object. See image for reference:

Can anyone with FOMO/object detection experience help me figure out why the predicted centroids are so far off? For reference, project ID is 172365. Thanks in advance for your advice.

Hi @dbouzolin

I just had a look at your project, your data set does not look very good to me. Here is a couple of advices:

- Try to collect images for the boxes as they are supposed to be in the inference

- Add more images for the dataset, increase the number to 500 ~ 1K at least.

- Diversify where the box is located in the images.

- Try to collect images of the background alone.

This will increase the performance of your model and then you will get higher accuracy, currently you are about 75 percent in your project.

Thanks for your help @OmarShrit. I added some more images, including just background, but not up to 500 as I’m limited by the 20 minute training time. I was able to get up the model accuracy to ~87% but still seeing weird behavior when I deploy the model. Here is what an example classification looks like using the Edge Impulse website.

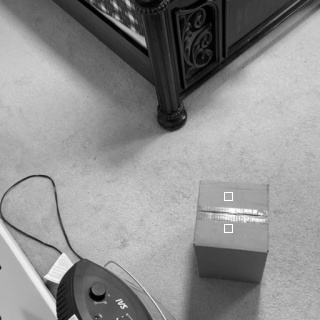

When I deploy the very same model onto my laptop (Linux x86) and run the classify_image example using the .eim file, I get a completely different result:

Somehow it seems as though running the deployed model gives me the wrong (x,y) coordinates by about a factor of 2 in the x-direction and a factor of 4 in the y-direction. I was able to modify the code to adjust for these factors and it now it lines up with my box pretty consistently (even tried it with this live classify example using my webcam).

I know that adding more data will help me detect the objects better, but it seems strange to see the bounding boxes consistently off by such a large factor during deployment yet hardly at all during testing (even with the exact same image). Could it be some kind of bug?

Would you try using the edge-impulse-linux-runner. You will see the results in terminal and you can see them in Firefox via a webserver.

The results should be correct with the runner, would you give it a try and let me know?

Sure, here I’ve uploaded the output of the edge-impulse-linux-runner command: Edge Impulse Box Detection — webmshare

Again it looks like the deployed model detects the presence of boxes but with incorrect centroids, biased towards the top corners of the screen.

Maybe retraining a model from a fresh project would help?

@OmarShrit I figured out the issue:

When I go to Impulse design > Object detection > Neural Network settings > Switch to Keras (expert) mode, I edited line 42 detailing the cutpoint of the MobileNet model.

#! Cut MobileNet where it hits 1/8th input resolution; i.e. (HW/8, HW/8, C)

cut_point = mobile_net_v2.get_layer('block_13_expand_relu')

By default, the cutpoint was set to block_6_expand_relu, but I modified it to cut later at block 13 for a finer resolution (1/16 input resolution instead of 1/8 input resolution). Centroids functioned as expected with the 1/8 input resolution but only started to deviate when I swapped to the finer 1/16 resolution.

Is there a better way to edit the cutpoint precision?

@dbouzolin Thank you for the test.

@matkelcey is the one to answer this.

Also I do not understand the original cut point should work and it does not require any modification.

1 Like

This is the correct way to modify the resolution, but as @OmarShrit says, the 1/8th resolution should work well for your boxes. Given what you’ve described above, there is definitely some bug to hunt down first. I suspect going to 1/16 will just make things worse otherwise sorry. Mat

1 Like