I’m working on a project with my daughter, a light up LED skirt. When she spins, jumps or wiggles it should trigger different effects.

We have it kind of working, but not very reliably. I want to improve it but not sure what to do.

Repo is here: GitHub - mattvenn/laura_skirt

Project here: laura skirt v2 - Dashboard - Edge Impulse

I’ve been following the continuous tutorial, but is this still useful for non continuous gestures like a jump or a spin?

I only have about 4 mins of data collected, which I know isn’t enough, but I want to get on the right track before recording a lot more as it’s a pain to do.

All my data are single shots. Record one jump. Record one spin etc.

Should I leave the windowing on? I would have thought the data would be better with me selecting the pertinent part of each sample rather than window through the whole thing that includes waiting at the start and end.

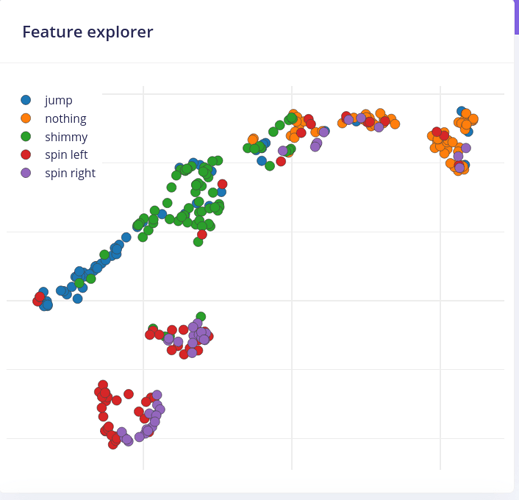

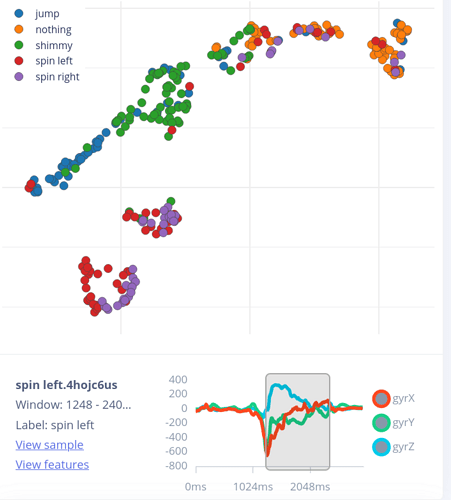

I can see the features roughly in groups:

I can pan & zoom but I’m unable to rotate the graph as shown in the video. Do I have to hold a button? I’ve tried Chrome and Firefox.

Is there a way to remove bad data? I can see some features that are definitely wrong. I can click on them and see the sample but doesn’t seem to be a way to remove the feature. I would have thought the training would go better if I could remove bad features beforehand.

I’d love to collect data wirelessly, it’s a total pain holding the laptop over my daughter with a wire dangling and then having to untwist after every spin. Is it possible? Doesn’t seem so with nano33ble that I have. Does the ESP32 support data capture via wifi? That would be cool.

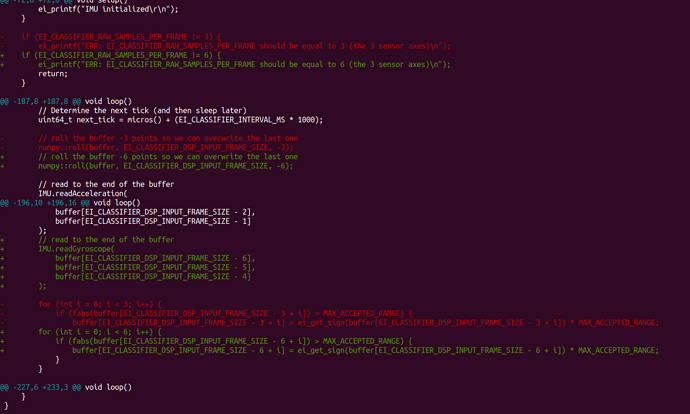

Finally, I thought I got better features with gyros instead of accelerometers. So I used gyrx, gyry and gyrz. That breaks the firmware like this: Problems With Creating/Deploying a model For Arduino - #6 by MMarcial

Is the solution to use the fusion example and delete all the sensors I’m not using?

Anyway, enough questions! Thanks for any help and I’m having fun learning about all this stuff. Thanks for the great tool!

Matt