Hi,

I am a newbie using Edge Impulse to train a Nicla proximity sensor to

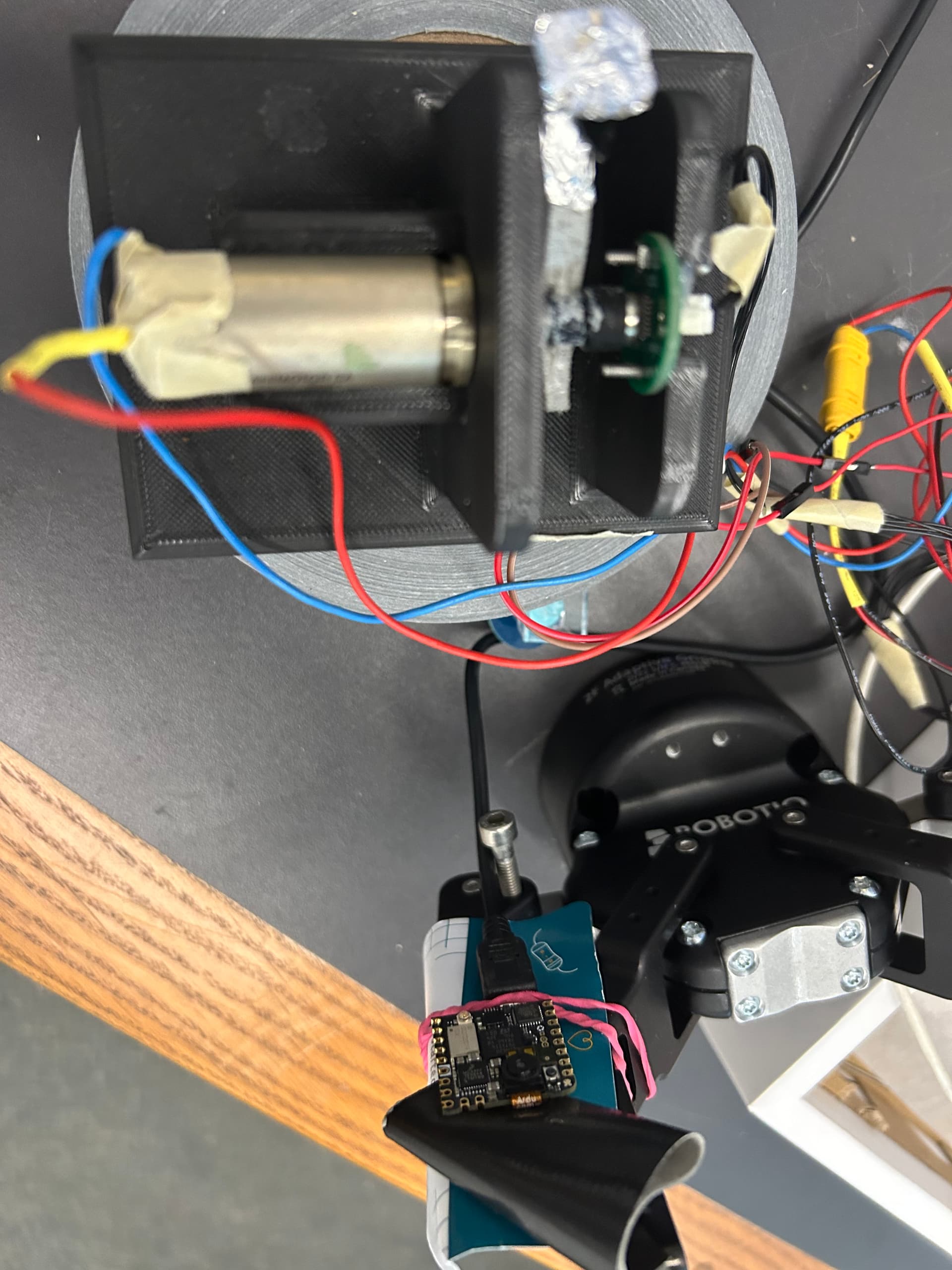

The model works well (I have made it public). I have taped some aluminum foil on the shaft of the motor (+encoder) that goes round and round and in doing so approaches and retreats from the camera. I have been able to use the sample_proximity sensor code in the nicla_vision_ingestion.ino file to successfully train the device to distinguish between a rotating vs stopped motor.

I have deployed the project to my desktop.

My problem is that I cannot find a way to simultaneously acquire visuals of the motor while aso running the classifier (which is based on the proxmity sensor).

Should I be modifying the 'nicla_vision_ingestion program to include camera feed? Will that work? Any hints/tips?

Thanks

Rav