Hello there,

I’m having a few questions regarding the size estimate on the studio vs the actual exported C++ library, as so:

-

the studio shows its estimate

-

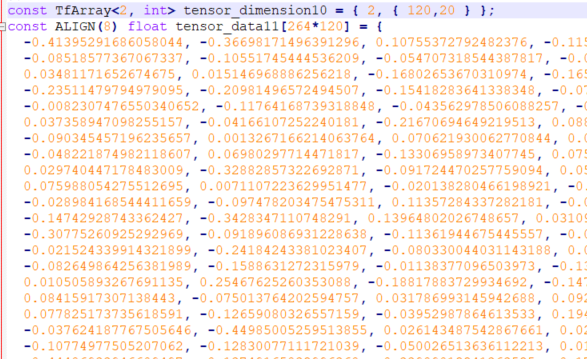

we exported the library and i see one the compiled model data arrays is ~120kB in size

-

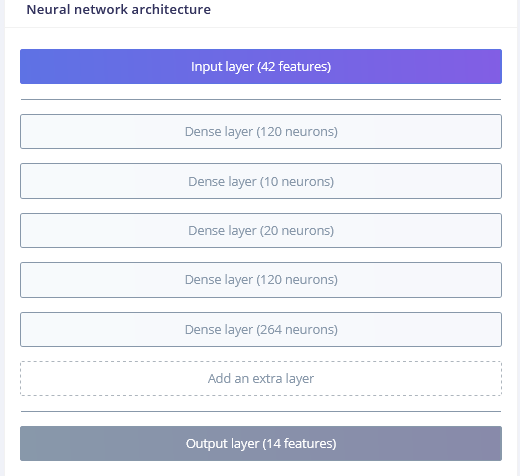

which i found it is related to the layers’ neurons

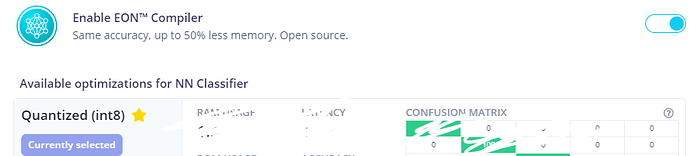

I do not have access to the studio project, i am implementing the exported library in device firmware. We are using a NRF52832 which should be about the same as a STM32 M4F MCU processor wise. Also, i am told we are deploying with these settings:

Questions

- Should i ignore the ROM estimate from the studio ?

- Are we doing something wrong that lets us see this mismatch ?

Thanks in advance