we are trying to develop an orientation detector based on the sound of the hornet in flight.

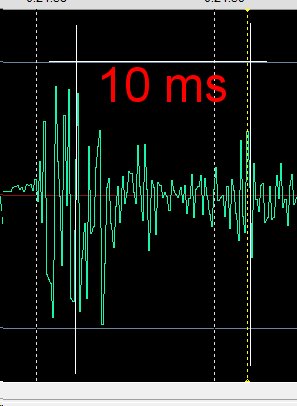

Thanks to a thesis found by Michel, we know that the frequency of wing beating is 100 Hz and that this is a characteristic of hornets. Using two microphones spaced 1.2m apart (half wavelength) therefore in phase opposition we hope to capture a maximum offset (parallel to the flight) or minimum (perpendicular to the flight). Ambitious, isn’t it?

My question: how to develop AI and from what?

We want to observe the difference in sound between two sources, do we need to:

-

make an AI based on listening to the frequency of the hornet’s flight and

separate the two sound arrivals, i.e. use stereo recordings

and measure the gap between the two sound tracks? -

make an AI directly based on direct listening to the sound on a single channel from the two microphones

and give as a basis the different shifts observed by rotating the device?

I don’t know if I’m expressing the problem correctly.

Thank you for enlightening me.