I’ve set up a keyword matching impulse that works pretty well. After recording hundreds of samples, my keyword matching works great… if I’m sitting at my desk where I did the majority of the recordings. It seems as though when I move my device (ESP32S3) to different locations, such as outside, or even just in a situation where there’s background noise (like the air conditioning), the keyword matching accuracy seems to plummet.

I was looking into expanding my dataset further when I ran across the EI documentation for generating synthetic (AI text-to-speech) datasets. It struck me that I may have been using the wrong approach to my dataset. I had been attempting to make pristine audio samples, with high quality microphones, noise filtered out, pop filters, etc. But the synthetic dataset tutorial indicates that background noise might actually be exactly what’s missing from my dataset.

Quoting that article:

Finally we iterate though all the options generated, call the Google TTS API to generate the desired sample, and apply noise to it, saving locally with metadata

It then goes on to demonstrate that the “noisy” samples are the ones ultimately uploaded to the model, rather than the clean keyword samples.

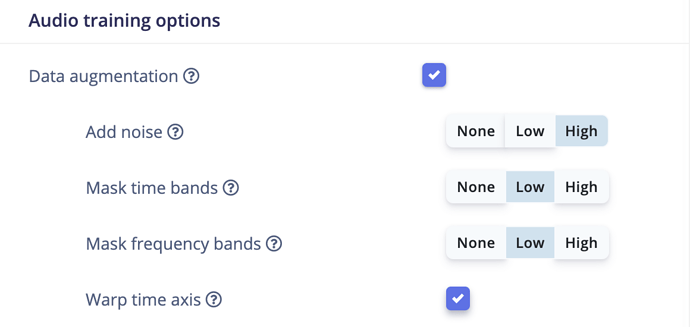

So my big question: Should I refactor my dataset to add background noise to all my samples, rather than trying to remove background noise?

The synthetic dataset tutorial has excellent code samples, I could use my existing dataset to add noise to all samples (perhaps multiple times with different background noises even). But I want to verify if my hypothesis holds any water before doing all that work.

For the record, I think my confusion stemmed from most of the getting started tutorials, like the “Responding to your Voice” documentation and video, which seem to indicate having clean samples is preferable.

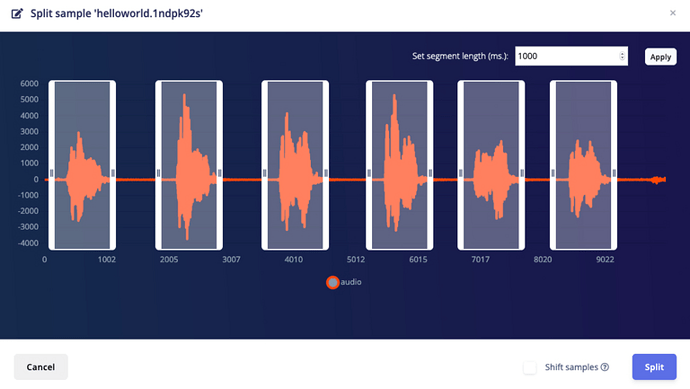

Screenshots such as this example show clearly specified keywords with zero noise between the samples:

I was under the impression that Edge Impulse combined the “noise” dataset with the the desired keyword datasets automatically. Now from the the more advanced documentation, it seems that I was probably incorrect in assuming that was a “behind the scenes” capability of Edge Impulse.