When the Build button on the EI Studio Deployment page is clicked, popup a form with defaults populated. At a minimum one form field is required entittled Object Detection Threshold with a default value of 0.0000000000000000000001.

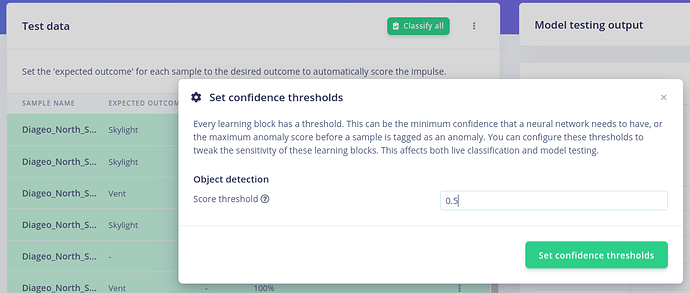

I understand that the EI Studio Model Testing page has a menu item Set Confidence Thresholds that actually controls the parameter, EI_CLASSIFIER_OBJECT_DETECTION_THRESHOLD, and is saved into model_metadata.h. But how is this EI Studio setting in anyway obvious that it will control the &results of an inference via a call to run_classifier()?

—Background—

Precieved FOMO Instability

When I would deploy a FOMO model to a Sony Spresense, the model would identify objects sometimes but usually not. There was no ryme or reason as to why the model would correctly predit a FOMO object was present. I’ve put in 19 days on this discerning issue. I tried everything to make the FOMO model more reliable. Hooking up to Live Classification worked well, usually giving 90% to 100% accuracy. I would keep the camera and lighting the same. I did not move the object to be FOMOed. I would then deploy my Arduino model to the device and FOMO would report No Objects Found. What? Why?

I studied the EI Spresense Firmware that uses complex methods of capturing camera data and converting data. I simply used the Sony Spresense SDK built-in functions for such conversions. Could these FrameBuffer manipulation routines be so different to cause FOMO to act un-reliably? That is, I thought maybe using the EI Spresense Firmware to collect data with the EI Studio, but then use the Sony SDK routines in the Arduino code might be the cause of the FOMO unsteadiness.

I tried this, that and the other thing. I made new models. I created new data on device saving to SD card then uploaded to EI Studio. I deleted the bounding boxes file and re-labeld all the images. Nothing worked. I was about to cry Uncle until I found this gem of a meatball: EI_CLASSIFIER_OBJECT_DETECTION_THRESHOLD

Changing EI_CLASSIFIER_OBJECT_DETECTION_THRESHOLD from 0.500 to 0.050 allowed me to see the FOMO results when the object was in view of the camera. When I removed the object from the FOV, FOMO correctly reported No Object Found.

So please ML Model creators, allow me, the master of my domain, to interpret, translate, decipher, exploit and act on the Model results. The ML Model has no context as to the solution it has been deployed into, so do not hide results from the developer. I am yet to come across Edge Impulse documentation that says, "oh, yeah, you need to edit model_metadata.h".

Perhaps Performance Calibration will make this point all moot in the future. But I wonder how many developers came to the platform and found it very easy to build, train, and deploy a model to a device only to find that FOMO declared it could not find any objects (when running on device). Naturally, they would quickly move on to other platforms, not willing to dig into some very obscrue settings within EI Studio.